Housing is a serious issue across the country, and here in Minneapolis there has been a big discussion about new zoning policies that could be a model for cities everywhere.

In true midwestern fashion, the favored way to fight this out on the ground is the passive-aggressive yard sign. Homeowners kicked it off, followed by a pro-development crowd seeking more affordable housing.

Regardless of where you stand on the issue, both groups draw grassroots support from local residents who live in Minneapolis and have a stake in how it might change. Just recently, though, someone else jumped on the bandwagon. A new set of shiny yard signs started popping up all over my neighborhood. Someone had coordinated an overnight drop, putting out three or more signs every block with this slogan:

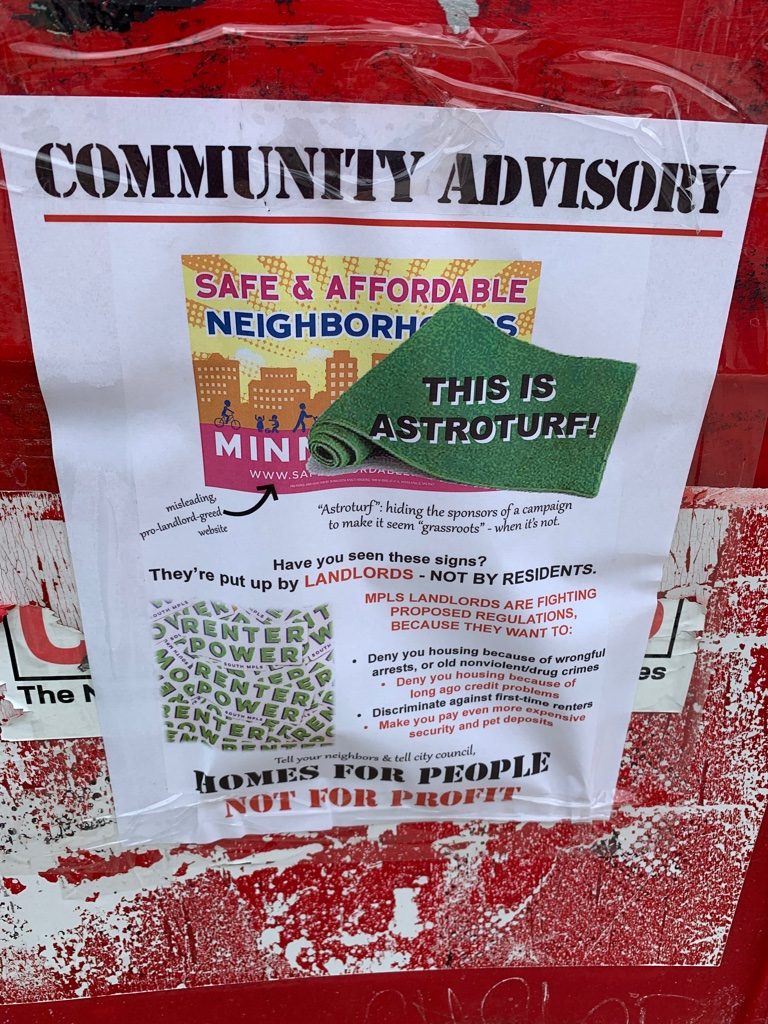

Many of the signs were outside apartment buildings, and it turns out that they came from a group of landlords organizing against protections for renters. I came home to my apartment one day to find three signs posted in the front yard of the building. Nobody told us these signs were going up, and many of them were removed the following week.

This is a classic example of what social scientists call “astroturfing”—a practice where business leaders copy grassroots activism strategies to advocate for their political interests. According to sociologist Edward Walker, full-on astroturfing where a business relies on deception to suggest grassroots support is pretty rare. This is a risky practice that can backfire if they get caught. Instead, business are getting much more savvy by adopting other kinds of grassroots organizing tactics to drive attention to their interests.

These signs show the power of astroturfing, because we usually assume a lawn sign is a pretty direct statement—one that represents the person who lives behind it. Sure, landlords can lobby just like everyone else, but do they have a right to do it in front of where their tenants live, especially if they might disagree? A counter-mobilization effort is already underway in the neighborhood.

Evan Stewart is an assistant professor of sociology at University of Massachusetts Boston. You can follow his work at his website, on Twitter, or on BlueSky.

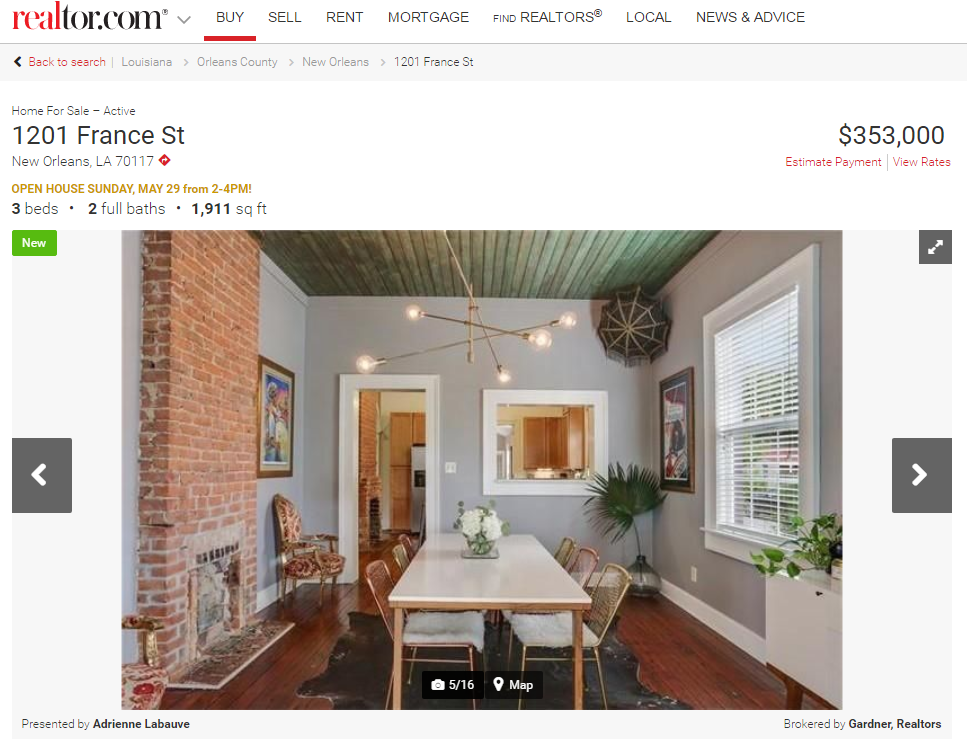

The dining rooms are coming. It’s how I know my neighborhood is becoming aspirationally middle class.

The dining rooms are coming. It’s how I know my neighborhood is becoming aspirationally middle class.

To make a long, well-put, and worth-reading argument short: eviction isn’t rare as many policymakers and sociologists might assume; it is actually a horrifyingly common phenomenon. Urban sociologists have missed the magnitude of the eviction phenomenon because they have traditionally used neighborhoods as the unit of analysis, studying issues such as segregation and gentrification. Because eviction is rarely studied, we don’t have good data on eviction. Establishing a dataset of eviction is not a simple data collecting task, given that there are many forms of informal eviction. The consequences of eviction are devastating and have a profound, negative, and life-long impact on subsequent trajectories: worse housing, more eviction, and homelessness, all disproportionately affecting women of color with children (“a female equivalent of mass incarceration,” Desmond argued at a

To make a long, well-put, and worth-reading argument short: eviction isn’t rare as many policymakers and sociologists might assume; it is actually a horrifyingly common phenomenon. Urban sociologists have missed the magnitude of the eviction phenomenon because they have traditionally used neighborhoods as the unit of analysis, studying issues such as segregation and gentrification. Because eviction is rarely studied, we don’t have good data on eviction. Establishing a dataset of eviction is not a simple data collecting task, given that there are many forms of informal eviction. The consequences of eviction are devastating and have a profound, negative, and life-long impact on subsequent trajectories: worse housing, more eviction, and homelessness, all disproportionately affecting women of color with children (“a female equivalent of mass incarceration,” Desmond argued at a