Flashback Friday.

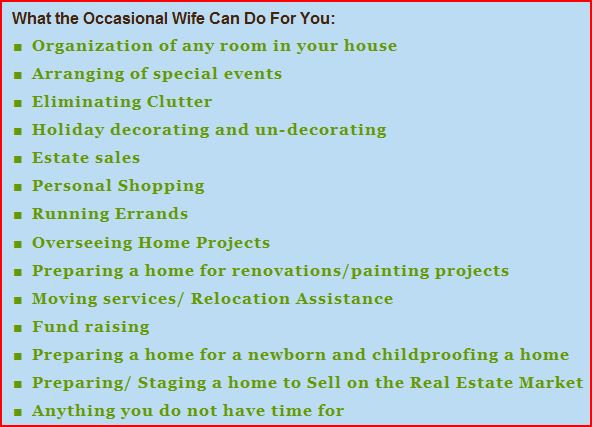

Heather L. sent us a link to a business called The Occasional Wife. It’s slogan: “The Modern Solution To Your Busy Life.” The store sells products that help you organize your home and office, and provides all kinds of helpful services to support your personal goals.

There are two things worth noting here:

First, the business relies on and reproduces the very idea of “wife.” As the website makes clear, wives are people who (a) make your life more pleasurable by taking care of details and daily life-maintenance (such as running errands), (b) organize special events in your life (such as holidays), and (c) deal with work-intensive home-related burdens (such as moving), all while perfectly coiffed and in high heels.

But, the business only makes sense in a world where “real” wives are obsolete. Prior to industrialization, most men and women worked together on home farms. With industrialization, all but the wealthiest of families relied on (at least) two breadwinners. In the 1950s, the era to which this business implicitly harkens, Americans were bombarded with ideological propaganda praising stay-at-home wives and mothers (in part to pressure women out of jobs that “belonged” to men after the war). Since then, women have increasingly participated in wage labor. Today, the two parent, single-earner family is only a minority of families.

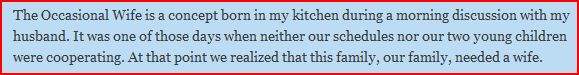

So, in our “modern” world, even when there is a wife in the picture, there’s rarely a “wife.” But, as the founder explains, it’d sure be nice to have one:

See, she was his wife, but not a wife.

Of course, this is nothing new. Tasks performed by wives have been increasingly commodified (that is, turned into services for which people pay): for example, house cleaning, cooking, and child care. This business just makes the transition in reality explicit by referencing the ideology. The fact that the use of the term “wife” works in this way (i.e., brings to mind the 1950s stereotype) in the face of a reality that looks very different, just goes to show how powerful ideology can be.

Originally posted in 2009; the business has grown from one location to four.

Lisa Wade, PhD is an Associate Professor at Tulane University. She is the author of American Hookup, a book about college sexual culture; a textbook about gender; and a forthcoming introductory text: Terrible Magnificent Sociology. You can follow her on Twitter and Instagram.