I’m posting to get some feedback on my initial thoughts in preparation for my chapter in a forthcoming gamification reader. I’d appreciated your thoughts and comments here or @pjrey.

![]() My former prof Patricia Hill Collins taught me to begin inquiry into any new phenomenon with a simple question: Who benefits? And this, I am suggesting, is the approach we must take to the Silicon Valley buzzword du jure: “gamification.” Why does this idea now command so much attention that we feel compelled to write a book on it? Does a typical person really find aspects of his or her life becoming more gamelike? And, who is promoting all this talk of gamification, anyway?

My former prof Patricia Hill Collins taught me to begin inquiry into any new phenomenon with a simple question: Who benefits? And this, I am suggesting, is the approach we must take to the Silicon Valley buzzword du jure: “gamification.” Why does this idea now command so much attention that we feel compelled to write a book on it? Does a typical person really find aspects of his or her life becoming more gamelike? And, who is promoting all this talk of gamification, anyway?

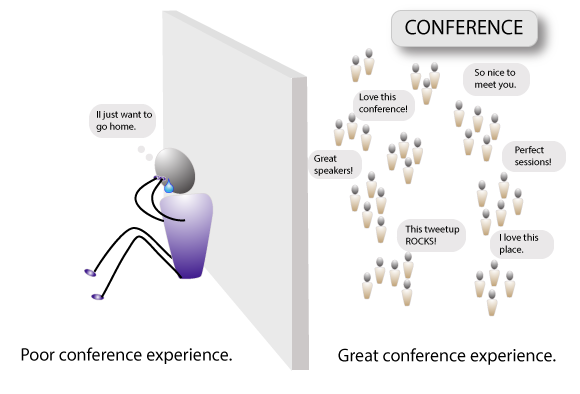

It’s telling that conferences like “For the Win: Serious Gamification” or “The Gamification of Everything – convergence conversation” are taking place in business (and not, say, sociology) departments or being run by CEOs and investment consultants. The Gamification Summit invites attendees to “tap into the latest and hottest business trend.” Searching Forbes turns up far more articles (156) discussing gamification than the New York Times (34) or even Wired (45). All this makes TIME contributor Gary Belsky seems a bit behind the time when he predicts “gamification with soon rule the business world.” In short, gamification is promoted and championed—not by game designers, those interested in game studies, sociologists of labor/play, or even computer-human interaction researchers—but by business folks. And, given that the market for videogames is already worth greater than $25 billion, it shouldn’t come as a surprise that business folk are looking for new growth areas in gaming.