When the team here at Cyborgology first started working on The Quantified Mind, a collaboratively authored post about the increasing metrification of academic life, production, and “success”, I immediately reached out to Zach Kaiser, a close friend and collaborator. Last year, Zach produced Our Program, a short film narrated by a professor from a large research institution at which a newly implemented set of performance indicators has the full attention of the faculty.

For my post this week, then, I’d like to consider Zach an Artist in Residence at Cyborgology—someone using the production and dissemination of works that embody the types of cultural phenomena or theories covered on the blog (as it turns out, this is not Zach’s first film featured on Cyborgology). I suppose it’s up to him if he’d like to include the position on his CV. In the following, I would like to present some of my reactions to the film and let Zach respond, hopefully raising questions that can be asked in dialogue with the ones presented at the end of The Quantified Mind. In full disclosure, I am very familiar with Zach’s scholarship and art (I’m listed as a co-author or co-artist on much of it, though not Our Program in particular), so I hope I don’t lead the witness too much here.

But first, the film:

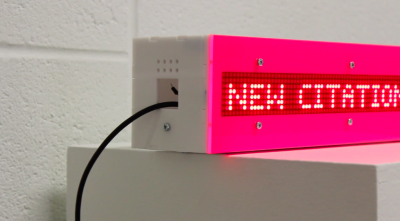

As a classically trained designer teaching in the art department of a Research I school, Zach’s perspective is valuable here for a number of reasons: obviously his day-to-day is highly influenced by the metrification trend in academia (especially considering his pre-tenure status), but he has worked in the commercial realm with companies and organizations enamored with the exact sort of technologically enabled quantification tools and systems (read: big data, et al.) driving the platforms through which academics’ metrics are being tallied. My first reaction to the film, then, is about the use of an object as the main visual here. After all, the film is not about a device—it’s not called Our Ticker or Our Shiny White Box With Seductive Red LEDs, it’s called Our Program; it’s about a cultural phenomenon with, for all intents and purposes, no real consumer-facing physical manifestation (beyond, perhaps, online dashboards or the like).

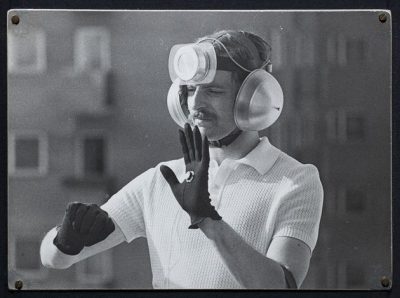

I’m reminded, however, of a book I recently read, Elizabeth Wilson’s Affect and Artificial Intelligence, a brief but fascinating argument for a reconceptualization of AI away from the stereotypical “cool”, emotionless field for mathematicians and computer scientists and into a significantly warmer, more emotional place. Alongside this main pitch, she also suggests that the proliferation and improvement of AI technologies will increase when all parties involved agree on the aforementioned reframing—that is, when AI is understood to be less Skynet, more PARO. One striking piece of research that stands out to me now in the context of Our Program comes in the chapter discussing ELIZA and PARRY, two AI psychoanalysts from the 1960s. Wilson references Sherry Turkle (frequently written about on this blog) and her work on humans’ relationships to technology, but ultimately dismisses this research in favor of Byron Reeves and Clifford Nass, who argue that we as a species are drawn to befriend our technological devices, summed up by Wilson as our “direct affiliative inclinations for artificial objects” (95).

ZK: Considering the metrification of academia in the context of affect is something I didn’t originally conceive as part of the work, but I’m reminded here of various efforts (within the humanities) to produce better metrics that are specific to humanities disciplines as opposed to inheriting metrics systems from the “hard” sciences. This strikes me as curious when situated in relationship to PARO or Siri. Ironically, through producing more “humane” metrics, we may end up furthering the idea that humans are fundamentally computational in nature.

A drive to produce more nuanced or, in the case of the humanities, humane metrics, or to make AI more relatable is not about the metrics or the AI themselves but is, I would suggest, about what we think about ourselves as people—whether we are, or are not, at some basic level, computational. The apotheosis of such a belief is a kind of pan-computationalism, where microbes and microchips operate in glorious harmony, not unlike the proclamations made in the poem “All Watched Over by Machines of Loving Grace,” by Richard Brautigan. Such a belief also caters to a neoliberalization of all life underpinned by models of self-interested human behavior that reach back to the early days of game theory.

To me, it’s not necessarily about asking whether we want to have affinities with artificial intelligence or computational objects in general but to what degree our affinities with those things become absorbed into our own ontological space, rendering us equally as computational as those objects. In this way, I see a strong connection between efforts to make scholarly metrics more nuanced, sophisticated, contextual, etc., and an affinity towards a PARO over a Skynet.

GS: If you’ve read my recent posts on the value of using obvious fiction in art and design versus trying to seem “real”, then you won’t be surprised that I hope Zach will discuss how he frames his narrative. This is not a piece of marketing. He does not leave his name off of the film. Nor does he call the piece a “product tour” or “brand video” on his website. That said, it is obviously influenced by his real life experiences in academia, experiences that we recognize as very much not unique in The Quantified Mind. Why fiction then?

ZK: I was recently asked if the “parody” can keep up with “reality.” Career benchmarking in higher education in Europe (like Reappointment, Promotion, and Tenure here in the States) is increasingly metrics-focused. A european colleague once told me about his dissertation committee, which required him to prove his impact via citations before he could graduate. Universities in the UK are using platforms like Simitive Academic Solutions to address “goal setting and alignment” to produce stronger accountability and incentive systems for faculty members.

I feel as though the fiction is a way of grappling with reality. The object, this “ticker” on which the film centers, is somewhat absurd in both its form and purpose. The intent was to make more explicit the link between the kind of (dare I say) neoliberal, market-based nature of faculty metrics and the physical faculty and university themselves: a “stock-ticker” that illustrates whether or not we as faculty members should continue to receive investment from our institutions. This kind of marketization of faculty data is already happening, and is not necessarily “new,” but the kind of control it wields is shifting. The more sophisticated, contextual, and nuanced the metrics become—not just about citations or number of publications, but about everything related to faculty output (e.g., fitness data via partnerships with FitBit and smart furniture manufacturers to determine whether more fit faculty produce more “impact”, other biometric and psychometric indications to help faculty identify causes of stress that decrease productivity, weighting of metrics based on location, discipline, type of institution)—the more administrators will rely on metrics to shape decision-making processes. As long as we develop ways to demonstrate our fundamentally computational nature, the influence of metrics on academia will be a positive feedback loop, with new metrics being developed, new decisions being based on those metrics, and new metrics being developed in response to the consequences of those decisions.

Gabi Schaffzin is pursuing his PhD in Art History, Theory, and Criticism, with a concentration in art practice, at UC San Diego.

Zach Kaiser is Assistant Professor of Graphic Design and Experience Architecture in the Department of Art, Art History, and Design at Michigan State University.

The two, along with other collaborators, have been working on the Culture Industry [dot] Club, a dynamic assemblage of artist-researchers engaged with emergent media practices and deep historical and theoretical research.