A few weeks ago, the Dow Jones fell 20% from its high. To many this is an indicator of great uncertainty about the future of the market and could be an indication of a coming recession. And this was even before the crisis of COVID-19. In these uncertain economic times, we look back to how the Great Recession of 2008 impacted families’ decision-making including whether or not to have children, where to live, and how much to rely on family members for financial support. Perhaps this recent history can help us imagine what might lie ahead.

Demographers and other social scientists are interested in the fertility rate, or the number of live births over the lifetime of a child-bearing woman. After the Great Recession, the fertility rate fell to below replacement rate (or 2 children per every one woman) for the first time since 1987. Scholars attribute this change in fertility to increased economic uncertainty; people do not feel confident about having a child if they are not sure what will come next. In fact, the fertility rate fell lowest in states with the most uncertainty, those hit hardest by the recession and “red states” concerned about the economic future under Obama.

- Schneider, Daniel. 2015. “The Great Recession, Fertility, and Uncertainty: Evidence From the United States.” Journal of Marriage and Family 77(5):1144–56.

- Guzzo, Karen Benjamin, and Sarah R. Hayford. 2020. “Pathways to Parenthood in Social and Family Contexts: Decade in Review, 2020.” Journal of Marriage and Family 82(1):117–44.

- Sobotka, Tomáš, Vegard Skirbekk, and Dimiter Philipov. 2011. “Economic Recession and Fertility in the Developed World.” Population and Development Review 37(2):267–306.

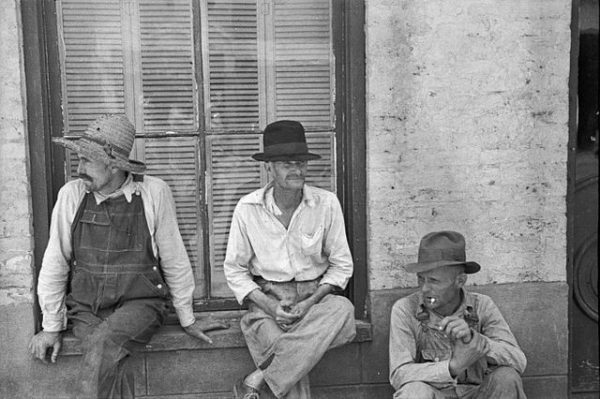

During the Great Recession there was also an increase in the number of young adults, both single and married, living with their parents. Rates of both young married adults living with their parents increased in 2006 to reach 1900 levels which is surprising considering that the century between 1900 and 2000 is considered the “age of independence,” when many more young people moved out and established households of their own. This effect was particularly strong for young adults with less education and those who had fewer resources to weather the storm of the recession on their own.

- Cherlin, Andrew, Erin Cumberworth, S. Philip Morgan, and Christopher Wimer. 2013. “The Effects of the Great Recession on Family Structure and Fertility.” The ANNALS of the American Academy of Political and Social Science 650(1):214–31.

The economic challenges of the late 2000s also may have led to an increase in interpersonal conflict within families. In part, this may stem from the pressure family members feel to serve as a safety net for relatives who are struggling financially. For instance, Jennifer Sherman found that some individuals who were experiencing financial hardship withdrew from their extended family during the Great Recession rather than asking for support. This, along with findings that giving money to family members during the recession increased an individual’s chance of experiencing their own financial stress, raises questions about whether or not family networks can offer support in times of economic turmoil.

- Sherman, Jennifer. 2013. “Surviving the Great Recession: Growing Need and the Stigmatized Safety Net.” Social Problems 60(4):409–32.

- Pilkauskas, Natasha V., Colin Campbell, and Christopher Wimer. 2017. “Giving Unto Others: Private Financial Transfers and Hardship Among Families With Children.” Journal of Marriage and Family 79(3):705–22.