Cross-posted at Montclair SocioBlog.

The Wall Street Journal had an op-ed this week by Donald Boudreaux and Mark Perry claiming that things are great for the middle class. Here’s why:

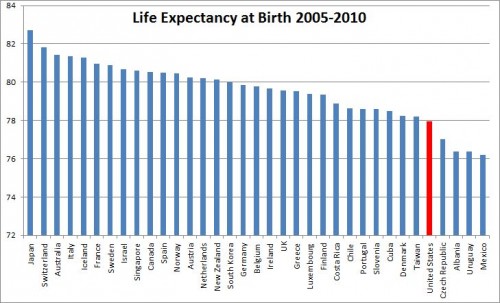

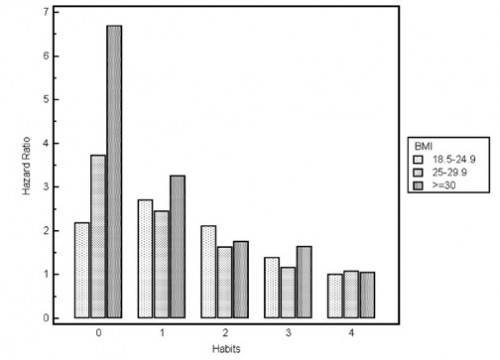

No single measure of well-being is more informative or important than life expectancy. Happily, an American born today can expect to live approximately 79 years — a full five years longer than in 1980 and more than a decade longer than in 1950.

Yes, but. If life-expectancy is the all-important measure of well-being, then we Americans are less well off than are people in many other countries, including Cuba.

The authors also claim that we’re better off because things are cheaper:

…spending by households on many of modern life’s “basics” — food at home, automobiles, clothing and footwear, household furnishings and equipment, and housing and utilities — fell from 53% of disposable income in 1950 to 44% in 1970 to 32% today.

Globalization probably has much to do with these lower costs. But when I reread the list of “basics,” I noticed that a couple of items were missing, items less likely to be imported or outsourced, like housing and health care. So, we’re spending less on food and clothes, but more on health care and houses. Take housing. The median home values for childless couples increased by 26% between just 1984 and 2001 (inflation-adjusted); for married couples with children, who are competing to get into good school districts, median home value ballooned by 78% (source).

The authors also make the argument that technology reduces the consuming gap between the rich and the middle class. There’s not much difference between the iPhone that I can buy and the one that Mitt Romney has. True, but it says only that products filter down through the economic strata just as they always have. The first ball-point pens cost as much as dinner for two in a fine restaurant. But if we look forward, not back, we know that tomorrow the wealthy will be playing with some new toy most of us cannot afford. Then, in a few years, prices will come down, everyone will have one, and by that time the wealthy will have moved on to something else for us to envy.

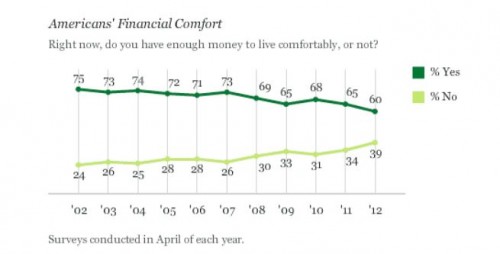

The readers and editors of the Wall Street Journal may find comfort in hearing Boudreaux and Perry’s good news about the middle class. Middle-class people themselves, however, may be a bit skeptical on being told that they’ve never had it so good (source).

Some of the people in the Gallup sample are not middle class, and they may contribute disproportionately to the pessimistic side. But Boudreaux and Perry do not specify who they include as middle class. But it’s the trend in the lines that is important. Despite the iPhones, airline tickets, laptops and other consumer goods the authors mention, fewer people feel that they have enough money to live comfortably.

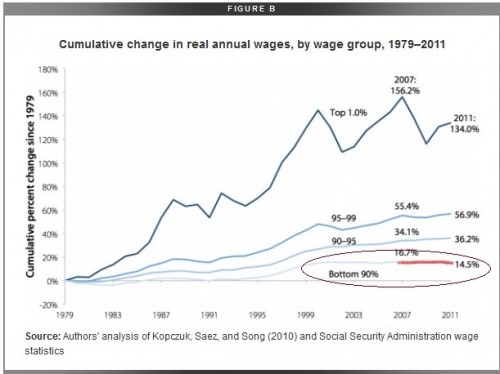

Boudreaux and Perry insist that the middle-class stagnation is a myth, though they also say that

The average hourly wage in real dollars has remained largely unchanged from at least 1964—when the Bureau of Labor Statistics (BLS) started reporting it.

Apparently“largely unchanged” is completely different from “stagnation.” But, as even the mainstream media have reported, some incomes have changed quite a bit (source).

The top 10% and especially the top 1% have done well in this century. The 90%, not so much. You don’t have to be too much of a Marxist to think that maybe the Wall Street Journal crowd has some ulterior motive in telling the middle class that all is well and getting better all the time.

—————————

Jay Livingston is the chair of the Sociology Department at Montclair State University. You can follow him at Montclair SocioBlog or on Twitter.