Dmitriy T.M. sent in a link to a 13-minute video in which Van Jones discusses the problems with patting ourselves on the back too much every time we put a plastic bottle in the recycle bin instead of the trash, and the need to recognize the link between environmental concerns and other social issues:

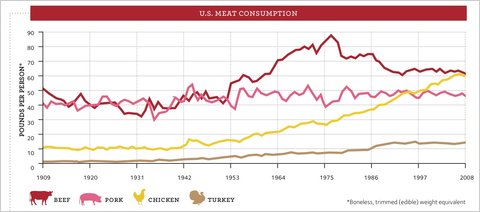

Also see our posts on the race between energy efficiency and consumption, exposure to environmental toxins and social class, race and exposure to toxic-release facilities, reframing the environmental movement, tracking garbage in the ocean, mountains of waste waiting to be recycled, framing anti-immigration as pro-environment, and conspicuous environmentalism.

Full transcript after the jump, thanks to thewhatifgirl.