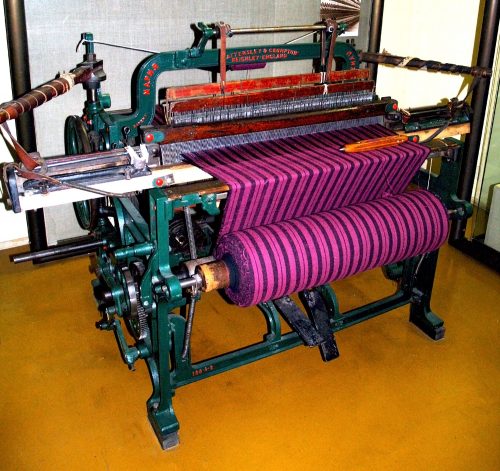

One of Amazon’s many revenue streams is a virtual labor marketplace called MTurk. It’s a platform for businesses to hire inexpensive, on-demand labor for simple ‘microtasks’ that resist automation for one reason or another. If a company needs data double-checked, images labeled, or surveys filled out, they can use the marketplace to offer per-task work to anyone willing to accept it. MTurk is short for Mechanical Turk, a reference to a famous hoax: an automaton which played chess but concealed a human making the moves.

One of Amazon’s many revenue streams is a virtual labor marketplace called MTurk. It’s a platform for businesses to hire inexpensive, on-demand labor for simple ‘microtasks’ that resist automation for one reason or another. If a company needs data double-checked, images labeled, or surveys filled out, they can use the marketplace to offer per-task work to anyone willing to accept it. MTurk is short for Mechanical Turk, a reference to a famous hoax: an automaton which played chess but concealed a human making the moves.

The name is thus tongue-in-cheek, and in a telling way; MTurk is a much-celebrated innovation that relies on human work taking place out of sight and out of mind. Businesses taking advantage of its extremely low costs are perhaps encouraged to forget or ignore the fact that humans are doing these rote tasks, often for pennies.

Jeff Bezos has described the microtasks of MTurk workers as “artificial artificial intelligence;” the norm being imitated is therefore that of machinery: efficient, cheap, standing in reserve, silent and obedient. MTurk calls its job offerings “Human Intelligence Tasks” as additional indication that simple, repetitive tasks requiring human intelligence are unusual in today’s workflows. The suggestion is that machines should be able to do these things, that it is only a matter of time until they can. In some cases, the MTurk workers are in fact labelling data for machine learning, and thus enabling the automation of their own work. more...