This is the complete version of a three-part essay that I posted in May, June, and July of this year:

Part I: Distributed Agency and the Myth of Autonomy

Part II: Disclosure (Damned If You Do, Damned If You Don’t)

Part III: Documentary Consciousness

Part I: Distributed Agency and the Myth of Autonomy

Last spring at TtW2012, a panel titled “Logging off and Disconnection” considered how and why some people choose to restrict (or even terminate) their participation in digital social life—and in doing so raised the question, is it truly possible to log off? Taken together, the four talks by Jenny Davis (@Jup83), Jessica Roberts (@jessyrob), Laura Portwood-Stacer (@lportwoodstacer), and Jessica Vitak (@jvitak) suggested that, while most people express some degree of ambivalence about social media and other digital social technologies, the majority of digital social technology users find the burdens and anxieties of participating in digital social life to be vastly preferable to the burdens and anxieties that accompany not participating. The implied answer is therefore NO: though whether to use social media and digital social technologies remains a choice (in theory), the choice not to use these technologies is no longer a practicable option for number of people.

In this essay, I first extend the “logging off” argument by considering that it may be technically impossible for anyone, even social media rejecters and abstainers, to disconnect completely from social media and other digital social technologies (to which I will refer throughout simply as ‘digital social technologies’). Consequently, decisions about our presence and participation in digital social life are made not only by us, but also by an expanding network of others. I then examine two prevailing privacy discourses—one championed by journalists and bloggers, the other championed by digital technology companies—to show that, although our connections to digital social technology are out of our hands, we still conceptualize privacy as a matter of individual choice and control. Clinging to the myth of individual autonomy, however, leads us to think about privacy in ways that mask both structural inequality and larger issues of power. Finally, I argue that the reality of inescapable connection and the impossible demands of prevailing privacy discourses have together resulted in what I term documentary consciousness, or the abstracted and internalized reproduction of others’ documentary vision. Documentary consciousness demands impossible disciplinary projects, and as such brings with it a gnawing disquietude; it is not uniformly distributed, but rests most heavily on those for whom (in the words of Foucault) “visibility is a trap.” I close by calling for new ways of thinking about both privacy and autonomy that more accurately reflect the ways power and identity intersect in augmented societies.

For the skeptical reader in particular, I want to begin by highlighting that the effects of neither participation nor non-participation in digital sociality are uniform or determined, and that both are likely to vary considerably across different social positions and contexts. An illustrative (if somewhat extreme) example is the elderly: Alexandra Samuels caricatures the absurdity of fretting over seniors who refuse online engagement, and my own socially active but offline-only grandmother makes a great case study in successful living without digital social technology. Though 84 years old, my grandmother is healthy and can get around independently; she lives in a seniors-only community of a few thousand adults (nearly all of whom are offline-only as well), and a number of her neighbors have become her good friends. She has several children, grandchildren, and great-grandchildren who live less than an hour away, and who call and visit regularly. As a financially stable retiree, she can say with confidence that there will be no job-hunting in her future; her surviving siblings still send letters, and her adult children print out digital family photos to show her. For these reasons and others, it would be hard to make the case that either she or any one of her similarly situated friends suffers from digital deprivation.

In contrast, the “Logging Off and Disconnection” panel highlights how the picture of offline-only living shifts if some of the other factors I list above change. Whereas my grandmother has a number of friends with whom she spends time (and who, like her, do not use digital social technologies), Davis describes the isolation that digital abstainers experience when many of the friends with whom they spend time do use digital social technologies. Much to their dismay, non-participating friends of social media enthusiasts in particular can find themselves excluded from both offline and online interaction within their own social groups. Similarly, Roberts finds that even 24 hours of “logging off” can be impossible for students if their close friends, family members, or significant others expect them to be constantly (digitally) available. In these contexts, it becomes difficult to refuse digital engagement without seeming also to refuse obligations of care.

Nor is what I will call abstention-related atrophy limited to relationships with friends and family members; professional relationships and even career trajectories can suffer as well. Vitak points out that, for job-seekers, the much-maligned proliferation of ‘weak ties’ that social media has been accused of fostering is a greater asset for gaining employment than is a smaller assortment of ‘strong ties.’ Modern life has become sufficiently saturated with social media to support use of what Portwood-Stacer calls its “conspicuous non-consumption” as a status marker: in the United States, where 96.7 percent of households have at least one television, “I’m not on Facebook” is the new “I don’t even own a TV.” That even a few people read the purposeful rejection of social media as a privilege signifier implicitly demonstrates the high cost of abstaining from social media.

Conversations about logging off or disconnecting have continued in the weeks since TtW2012. Most recently, PJ Rey (@pjrey) makes the case that social media is a non-optional system; because societies and technologies are always informing and affecting each other, “we can’t escape social media any more than we can escape society itself.” This means that the extent to which we can opt-out is limited; we can choose not to use Facebook, for example, but we can no longer choose to live in a world in which no one else uses Facebook (whether for planning parties or organizing protests). As does Davis, Rey argues that “conscientious objectors of the digital age” therefore risk losing social capital in a number of ways.

I would like to suggest, however, that even those who are “secure enough” to quit social media and other digital social technologies can not separate from them fully, nor can so-called “Facebook virgins” remain pure abstainers. Rejecters and abstainers continue to live within the same socio-technical system as adopters and everyone else, and therefore continue to affect and to be affected by digital social technology indirectly; they also continue to leave digital traces through the actions of other people. As I elaborate below, not connecting and not being connected are two very different things; we are always connected to digital social technologies, whether we are connecting to them or not. A number of digital social technology companies capitalize on this fact, and in so doing amplify the extent to which digital agency is increasingly distributed rather than individualized.

Below, I use Facebook as a familiar example to illustrate the near-impossibility of erasing digital traces of one’s self most generally. Many of the surveillance practices that follow here are not unique to Facebook, but the difficulty of achieving a full disengagement from Facebook can serve as an indicator of how much more difficult a full disengagement from all digital social technology would be. First, consider some of the issues that face people who actually have Facebook accounts (at minimum a username and password). Facebook has tracked its users’ web behavior even when they are logged out of Facebook; the “fixed” version of the site’s cookies still track potentially identifying information after users log out, and these same cookies are deployed whenever anyone (even a non-user) views a Facebook page. Last year, a 24-year-old law student named Max Schrems discovered that Facebook retains a wide array of user profile data that users themselves have deleted; Schrems subsequently launched 22 unique complaints, started an initiative called Europe vs. Facebook, and earned Facebook’s Ireland offices an audit.

In one particular complaint, Schrems alleges that Facebook not only retains data it should have deleted, but also builds “shadow profiles” of both users and non-users. These shadow profiles contain information that the profiled individuals themselves did not choose to share with Facebook. For a Facebook user, a shadow profile could include information about any pages she has viewed that have “Like” buttons on them, whether she has ever “Liked” anything or not. User and non-user shadow profiles alike contain what I call second-hand data, or information obtained about individuals through other individuals’ interactions with an app or website. Facebook harvests second-hand data about users’ friends, acquaintances, and associates when users synchronize their phones with Facebook, import their contact lists from other email or messaging accounts, or simply search Facebook for individual names or email addresses. In each case, Facebook acquires and curates information that pertains to individuals other than those from whom the information is obtained.

Second-hand data collection on and through Facebook is not limited to the creation of shadow profiles, however. As a recent article elaborated, Facebook’s current photo tagging system enables and encourages users to disclose a wealth of information not only about themselves, but also about the people they tag in posted photos. (Though not mentioned in the piece, the “tag suggestions” provided by Facebook’s facial recognition software have made photo tagging nearly effortless for users who post photos, while removing tags now involves a cumbersome five-click process per each tag that a pictured user wants removed.) Recall, too, that other companies collect second-hand data through Facebook each time a Facebook user authorizes a third party app; by default, the third-party app can ‘see’ everything the user who authorized it can see, on each of that user’s friends’ profiles (the same holds true for games and for websites that allow users to log-in with their Facebook accounts).

Those users who dig through Facebook’s privacy settings can prevent apps from accessing some of their information by repeating the tedious, time-consuming process required to block a specific app for each and every app that any one of their Facebook ‘friends’ might have authorized (though the irritation-price of doing so clearly aims to guide users away from this sort of behavior). Certain pieces of information, however—a user’s name, profile picture, gender, network memberships, username, user id, and ‘friends’ list—remain accessible to Facebook apps, no matter what; Facebook states that this makes one’s friends’ experiences on the site (if not one’s own) “better and more social.” Users do have the ‘nuclear option’ of turning off all apps, though this action means they cannot use apps themselves; their information also still remains available for collection through their friends’ other Facebook-related activities.

Facebook representatives have denied any wrongdoing, denied the existence of shadow profiles per se (though a former Facebook employee recently confirmed that the company builds “dark profiles” of non-users), and maintained that there is nothing non-standard about the company’s data collection practices. Nonetheless, even the possibility of shadow profiles raises a complicated question about where to draw the line between information that individuals ‘choose willingly’ to share (and are therefore responsible for assuming it will end up on the Internet), and “information that accumulates simply by existing.” The difficulty of making this determination reflects not only the tensions between prevailing privacy discourses, but also the growing ruptures between the ways in which we conceptualize privacy and the increasingly augmented world in which we live.

As a headline in The Atlantic put it recently, “On Facebook, Your Privacy is Your Friends’ Privacy”—but what does that mean? How should we weigh which of our friends’ desires against which of our own? How are we to anticipate the choices our friends might make, and on whom does the responsibility fall to choose correctly? The problem is that we tend to think of privacy as a matter of individual control and concern, even though privacy—however we define it—is now (and has always been) both enhanced and eroded by networks of others. In a society that places so much emphasis on radical individualism, we are ill-prepared to grapple with the rippling and often unintended consequences that our actions can have for others; we are similarly disinclined to look beyond the level of individual actions in asking why such consequences play out in the ways that they do.

‘Simply existing’ does generate more information than it did two generations ago, in part because so many different corporations and institutions are attempting to capitalize on the potentials for targeted data collection afforded by a growing number of digital technologies. At the same time, surveillance of individual behavior for commercial purposes is nothing new, and Facebook is hardly the only company building data profiles to which the profiled individuals themselves have incomplete access (if any access at all). What is comparatively new about Facebook-style surveillance in social media is the degree to which disclosure of our personal information has become a product not only of choices we make (knowingly or unknowingly), but also of choices made by our family members, friends, acquaintances, or professional contacts.

Put less eloquently: if Facebook were an STI, it would be one that you contract whenever any of your old classmates have unprotected sex. Even one’s own abstinence is no longer effective protection against catching another so-called ‘data double’ or “data self,” yet we still think about privacy and disclosure as matters of individual choice and responsibility. If your desire is to disconnect completely, the onus is on you to keep any and all information about yourself—even your name—from anyone who uses Facebook, or who might use anything like Facebook in the future.

If we dispense with digital dualism—the idea that the ‘virtual,’ ‘digital,’ or ‘online’ world is somehow separate and distinct from the ‘real,’ ‘physical,’ or ‘face to face’ world—it becomes apparent that not connecting to digital social technologies and not being connected to digital social technologies are two different things. Whether as a show of conspicuous non-consumption, an act of atonement and catharsis (as portrayed in Kelsey Brannan’s [@KelsBran] film Over & Out), or for other reasons entirely, we can choose to accept the social risks of deleting our social media profiles, dispensing with our gadgetry, and no longer connecting to others through digital means.

Yet whether we feel liberated, isolated, or smugly self-satisfied in doing so, we have not exited the ‘virtual world’; we remain situated within the same augmented reality, connected to each other and to the only world available through flows that are both physical and digital. I email photographs, my mother prints them out, and my grandmother hangs them in frames on her wall; a social media refuser meets her own searchable reflection in traces of book reviews, grant awards, department listings, and RateMyProfessors.com; a nearby friend sees you check-in early at your office, and drops by to surprise you with much needed coffee. A news story is broken and researched via Twitter, circulated in a newspaper, amplified by a TV documentary, and referenced in a book that someone writes about on a blog. Whether the interface at which we connect is screen, skin, or something else, the digital and physical spaces in which we live are always already enmeshed. Ceasing to connect at one particular type of interface does not change this.

In stating that connection is inescapable, I do not mean to suggest that all patterns of connection are equitable or equivalent in form, function, or impact. Connection does not operate independent of variables such as race, class, gender, ability, or sexual orientation; digital augmentation is not a panacea for oppression, and neither has nor will magically eliminate social and structural inequality to birth a technoutopian future. My intent here in focusing on broader themes is not to diminish the importance of these differences, but to highlight three key points about digital social technology in an augmented world:

1.) First, our individual choices to use or reject particular digital social technologies are structured not only by cultural, economic, and technological factors, but also by our social, emotional, and professional ties to other people;

2.) Second, regardless of how much or how little we choose to use digital social technology, there are more digital traces of us than we are able to access or to remove;

3.) Third, even if we choose not to participate in digital social life ourselves, the participation of people we know still leaves digital traces of us. We are always connected to digital social technologies, whether we are connecting through them or not.

Part II: Disclosure (Damned If You Do, Damned If You Don’t)

So far I’ve argued that whether we leave digital traces is not a decision we can make autonomously, because our friends, acquaintances, and contacts also make these decisions for us. These traces are largely unavoidable, as we cannot escape being connected to digital social technologies anymore than we can escape society itself. But do our current notions of privacy reflect this fact? I now consider two different privacy discourses to show that 1) although our connections to digital social technology are out of our hands, we still conceptualize privacy as a matter of individual choice and control, and 2) clinging to the myth of individual autonomy leads us to think about privacy in ways that mask both structural inequality and larger issues of power.

Inescapable connection notwithstanding, we still largely conceptualize disclosure as an individual choice, and privacy as a personal responsibility. This is particularly unsurprising in the United States, where an obsession with self-determination is foundational not only to the radical individualism that increasingly characterizes American culture, but also to much of our national mythology (to let go of the ‘autonomous individual’ would be to relinquish the “bootstrap” narrative, the mirage of meritocracy, and the shaky belief that bad things don’t happen to good people, among other things).

Though the intersection of digital interaction and personal information is hardly localized to the United States, major digital social technology companies such as Facebook and Google are headquartered in the U.S.; perhaps relatedly, the two primary discourses of privacy within that intersection share a good deal of underlying ideology with U.S. national mythology. The first of these discourses centers on a paradigm that I’ll call Shame On You, and spotlights issues of privacy and agency; the second centers on a paradigm that I’ll call Look At Me, and spotlights issues of privacy and identity.

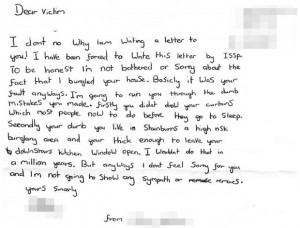

“You shouldn’t have put it on the Internet, stupid!” Within the Shame On You paradigm, the control of personal information and the protection of personal privacy are not just individual responsibilities, but also moral obligations. Choosing to disclose is at best a risk and a liability; at worst, it is the moment we bring upon ourselves any unwanted social, emotional, or economic impacts that will stem (at any point, and in any way) from either an intended or an unintended audience’s access to something we have made digitally available. Disclosure is framed as an individual choice, though we need not choose intentionally or even knowingly; it can be the choice to disclose information, the choice to make incorrect assumptions about to whom information is (or will be) accessible, or the choice to remain ignorant of what, when, by whom, how, and to what end that information can be made accessible.

A privacy violation is therefore ultimately a failure of vigilance, a failure of prescience; it redefines as disclosure the instant in which we should have known better, regardless of what it is we should have known. Accordingly, the greatest shame in compromised privacy is not what is exposed, but the fact of exposure itself. We judge people less for showing women their genitals, and more for being reckless enough to get caught doing so on Twitter.

Shame On You was showcased most recently in the commentary surrounding the controversial iPhone app “Girls Around Me,” which used a combination of public Google Maps data, public Foursquare check-ins, and ‘publicly available’[i] Facebook information to create a display of nearby women. The creators of Girls Around Me claimed their so-called “creepy” app was being targeted as a scapegoat, and insisted that the app could just as well be used to locate men instead of women. Nonetheless, the creators’ use of the diminutive term “girls” rather than the more accurate term “women” exemplifies the sexism and the objectification of women on which the app was designed to capitalize. (If the app’s graphic design somehow failed to make this clear, see also one developer’s comments about using Girls Around Me to “[avoid] ugly women on a night out”).

The telling use of “girls” seemed to pass uncommented upon, however, and most accounts of the controversy (with few exceptions) omitted gender and power dynamics from the discussion—as well as “society, norms, politics, values and everything else confusing about the analogue world.” The result was a powerful but unexamined synergy between Shame On You and the politics of sex and visibility, one that cast as transgressors women who had dared not only to go out in public, but to publicly declare where they had gone.

The telling use of “girls” seemed to pass uncommented upon, however, and most accounts of the controversy (with few exceptions) omitted gender and power dynamics from the discussion—as well as “society, norms, politics, values and everything else confusing about the analogue world.” The result was a powerful but unexamined synergy between Shame On You and the politics of sex and visibility, one that cast as transgressors women who had dared not only to go out in public, but to publicly declare where they had gone.

Women and men alike uncritically reproduced the app’s demeaning language by referring to “girls” throughout their commentaries; many argued that the app was not the problem, and insisted furthermore that its developers had done nothing wrong. The blogger who broke the Girls Around Me story explicitly states that “the real problem” is not the app (which was made by “guys” who are “super nice,” and which was “meant to be all in good fun”), but rather people who, “out of ignorance, apathy or laziness,” do not opt-out of allowing strangers to view their information. He and a number of others hope Girls Around Me will serve as “a wake up call” to “girls who have failed to lock down their info” and to “those who publicly overshare,” since “we all have a responsibility to protect our own privacy” instead of leaving “all our information out there waving in the wind.”

Another blogger admonishes that, “the only way to really stay off the grid is to never sign up for these services in the first place. Failing that, you really should take your online privacy seriously. After all, Facebook isn’t going to help you, as the more you share, the more valuable you are to its real customers, the advertisers. You really need to take responsibility for yourself.” Still another argues that conventional standards of morality and behavior no longer apply to digitally mediated actions, because “publishing anything publicly online means you have, in fact, opted in”—to any and every conceivable (or inconceivable) use of whatever one might have made available. The pervasive tone of moral superiority, both in these articles and in others like them, proclaims loudly and clearly: Shame On You—for being foolish, ignorant, careless, naïve, or (worst of all) deliberately choosing to put yourself on digital display.

Accounts such as these serve not only to deflect attention away from problems like sexism and objectification, but to normalize and naturalize a veritable litany of questionable phenomena: pervasive surveillance, predatory data collection, targeted ads, deliberately obtuse privacy policies, onerous opt-out procedures, neoliberal self-interest, the expanding power of social media companies, the repackaging of users as products, and the simultaneous monetization and commoditization of information, to name just a few. These accounts perpetuate the myth that we are all autonomous individuals, isolated and distinct, endowed with indomitable agency and afforded infinite arrays of choices.

With other variables obfuscated or reduced to the background noise of normalcy, the only things left to blame for unanticipated or unwanted outcomes–or for our disquietude at observing such outcomes–are those individuals who choose to expose themselves in the first place. Of course corporations try to coerce us into “putting out” information, and of course they will take anything they can get if we are not careful; this is just their nature. It is up to us to be good users, to keep telling them no, to remain vigilant and distrustful (even if we like them), and never to let them go all the way. We are to keep the aspirin between our knees, and our data to ourselves.

Shame On You extends beyond disclosure to corporations, and—for all its implicit digital dualism—beyond digitally mediated disclosure as well. Case in point: during the course of writing this essay, I received a mass-emailed “Community Alert Bulletin” in which the Chief of Police at my university campus warned of “suspicious activity.” On several occasions, it seems, two men have been seen “roaming the library;” one of them typically “acts as a look out,” while the other approaches a woman who is sitting or studying by herself. What does one man do while the other keeps watch? He “engages” the woman, and “asks for personal information.” The “exact intent or motives of the subjects” is unknown, but the ‘Safety Reminders’ at the end of the message instruct, “Never provide personal information to anyone you do not know or trust.”

If ill-advised disclosure were always this simple—suspicious people asking us outright to reveal information about ourselves—perhaps the moral mandate of Shame On You would seem slightly less ridiculous. As it stands, holding individuals primarily responsible for violations of their own privacy expands the operative definition of “disclosure” to absurd extremes. If we post potentially discrediting photos of ourselves, we are guilty of disclosure through posting; if friends (or former lovers) post discrediting photos of us, we are guilty of disclosure through allowing ourselves to be photographed; if we did not know that we were being photographed, we are guilty of disclosure through our failure to assume that we would be photographed and to alter our actions accordingly. If we do not want Facebook to have our names or our phone numbers, we should terminate our friendships with Facebook users, forcibly erase our contact information from those users’ phones, and thereafter give false information to any suspected Facebook users we might encounter. This is untenable, to say the least. The inescapable connection of life in an augmented world means that exclusive control of our personal information, as well as full protection of our personal privacy, is quite simply out of our personal hands.

* * * * *

The second paradigm, Look At Me, at first seems to represent a competing discourse. Its most vocal proponents are executives at social- and other digital media companies, along with assorted technologists and other Silicon Valley ‘digerati’. This paradigm looks at you askance not for putting information on the Internet, but for forgetting that “information wants to be free”—because within this paradigm, disclosure is the new moral imperative. Disclosure is no longer an action that disrupts the guarded default state, but the default state itself; it is not something one chooses or does, but something one is, something one has always-already done. Privacy, on the other hand, is a homespun relic of a bygone era, as droll as notions of a flat earth; it is particularly impractical in the 21st century. After all, who really owns a friendship? “Who owns your face if you go out in public?”

Called both “openness” and “radical transparency,” disclosure-by-default is touted as a social and political panacea; it will promote kindness and tolerance toward others, fuel progress and innovation, create accountability, and bring everyone closer to a better world. Alternatively, clinging to privacy will merely harbor evil people and condemn us to “generic relationships.” The enlightened “don’t believe that privacy is a real issue,” and anyone who maintains otherwise is suspect; as even WikiLeaks activist Jacob Appelbaum (@ioerror) has lamented, privacy is cast “as something that only criminals would want.” Our greatest failings are no longer what do or what we choose, but who we are; our greatest shame is not in exposure, but in having or being something to hide.

Look At Me has benefitted, to some degree, from a synergy of its own: “openness,” broadly conceived, has gained traction as more ‘open’ movements and initiatives attract attention and build popular support. In 1998, the Open Source Initiative was the first to claim ‘open’ as a moniker; it is now joined by what one writer has called an “open science revolt,” by not one, but two Open Science movements, by President Obama’s Open Government Initiative, by the somewhat meta Open Movements movement, and by a number of other initiatives aimed at reforming academic research and publishing.

“Transparency,” too, has been popularized into a buzzword (try web-searching “greater transparency,” with quotes). In an age of what Jurgenson and Rey (@nathanjurgenson and @pjrey) have called liquid politics, people demand transparency from institutions in the hope that exposure will encourage the powerful to be honest; in return, institutions offer cryptic policy documents, unreadable reports, and “rabbit hole[s] of links” as transparency simulacra. Yet we continue to push for transparency from corporations and governments alike, which suggests that, to some degree, we do believe in transparency as a means to progressive ends. Perhaps it is not such a stretch, then, for social media companies (and others) to ask that we accept radical transparency for ourselves as well?

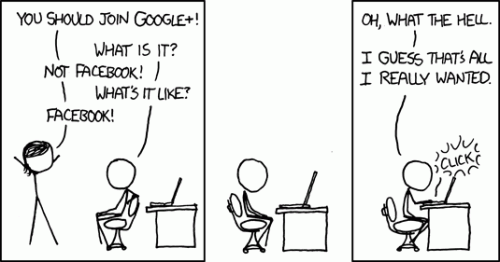

The 2011 launch of social network-cum-“identity service” Google+ served to test this theory, but the protracted “#nymwars” controversy that followed seemed not to be the result that Google had anticipated. Although G+ had been positioned as ‘the anti-Facebook’ in advance of its highly anticipated beta launch, within four weeks of going live Google decided not only to enforce a strict ‘real names only’ policy, but to do so through a “massive deletion spree” that quickly grew into a public relations debacle. The mantra in Silicon Valley is, “Google doesn’t get social,” and this time Google managed to (as one blogger put it) “out-zuck the Zuck.” Though sparked by G+, #nymwars did not remain confined to G+ in its scope; nor were older battle lines around privacy and anonymity redrawn for this particular occasion.

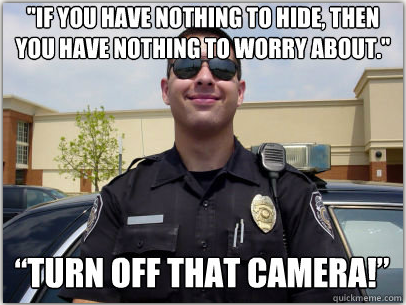

On one side of the conflagration, rival data giants Google and Facebook both pushed their versions of ‘personal radical transparency’; they were more-or-less supported by a loose assortment of individuals who either had “nothing to hide,” or who preached the fallacy that, ‘if you have nothing to hide, you have nothing to fear.’ The ‘nothing to hide’ argument in particular has been a perennial favorite for Google; well before the advent of G+ and #nymwars, CEO Eric Schmidt rebuked, “If you have something that you don’t want anyone to know, maybe you shouldn’t be doing it in the first place.” Unsurprisingly, Schmidt’s dismissive response to G+ users with privacy concerns was a smug, “G+ is completely optional. No one is forcing you use it.” If G+ is supposed to be an ‘identity service,’ it seems only some identities are worthy of being served.

On the other side was a ‘vocal minority’ who, despite their purportedly fewer numbers, still seemed to generate most of the substantive digital content about #nymwars[ii]. The pro-pseudonym-and/or-privacy camp included the Electronic Frontier Foundation (@EFF), a new website called My Name Is Me, researcher danah boyd (@zephoria), pseudonymity advocate and former Google employee Skud (@Skud, ‘Kirrily Robert’ on her passport at the time and ‘Alex Skud Bayley’ on it now), security technologist Bruce Schneier (@schneierblog, who had argued previously against Schmidt’s view of privacy), the hacker group Anonymous (unsurprisingly), and a substantive minority of Google employees (or “Googlers”). There was a long list of pseudonymous and ‘real named’ users who had been kicked off the site, an irate individual banned because of his “unusual” real name, and an individual with an unremarkable name who couldn’t seem to get banned no matter how hard he tried.

Digital dualists took issue, too; one declared the ‘real names’ policy to be “a radical departure from the way identity and speech interact in the real world,” while another came to the conclusion that,“The real world is often messy, chaotic and anonymous. The Internet is mostly better that way, too.” And in what may be one of my favorite #nymwars posts, a sixteen year old blogger took on the absurdity of equating not only ‘real name’ with ‘known as,’ but also ‘network’ with ‘community’—and then went on to argue that Shakespeare’s Juliet had it right in asking, “What’s in a name?” whereas Google’s Eric Schmidt sounded “shockingly similar” to the head of “the evil, Voldemort-controlled Ministry of Magic” in J. K. Rowling’s first Harry Potter book.

Issues of power are conspicuously absent from Look At Me, and Google makes use of this fact to gloss the profound difference between individuals pressuring a company to be transparent and a company pressuring (or forcing) individuals to be transparent. Google is transparent, for example, in its ad targeting, but allowing users either to opt-out of targeted advertising or to edit the information used in targeting them does not change the fact that Google users are being tracked, and that information about them is being collected. This kind of ‘transparency’ offers users not empowerment, but what Davis (@Jup83) calls “selective visibility”: users can reduce their awareness of being tracked by Google, but can do little (short of complete Google abstention) to stop the tracking itself.

Such ‘transparency’ therefore has little effect on Google in practice; with plenty more fish in the sea (and hundreds of millions of userfish already in its nets), Google has little incentive to care whether any particular user does or does not continue to use Google products. Individual users, on the other hand, can find quitting Google to be a right pain, and this imbalance in the Google/user relationship effectively gives carte blanche to Google policymakers. This is especially problematic with respect to transparency, which has a far greater impact on individual Google users than it does on Google itself.

Google may have ‘corporate personhood’, but persons have identities; in the present moment, many identities still serve to mark people for discrimination, oppression, and persecution, whether they are “evil” or not. Look At Me claims personal radical transparency will solve these problems, yet social media spaces are neither digital utopias nor separate worlds; all regular “*-isms” still apply, and real names don’t stop bullies or trolls in digital spaces any more than they do in conventional spaces. At the height of irony, even ‘real-named’ G+ users who supported Google’s policy still trolled other users who supported pseudonyms.

boyd offers a different assessment of ‘real names’ policies, one that stands in stark contrast to the doctrine of radical transparency. She argues that, far from being empowering, ‘real name’ policies are “an authoritarian assertion of power over vulnerable people.” Geek Feminism hosts an exhaustive list of the many groups and individuals who can be vulnerable in this way, and Audrey Watters (@audreywatters) explains that many people in positions of power do not hesitate to translate their disapproval of even ordinary activities into adverse hiring and firing decisions. Though tech CEOs call for radical transparency, a senior faculty member (for example) condemns would-be junior faculty whose annoying “quirks” turn up online instead of remaining “stifle[d]” and “hidden” during the interview process. As employers begin not only to Google-search job applicants, but also to demand Facebook passwords, belonging to what Erving Goffman calls a “discreditable group” carries not just social but economic consequences.

Rey points out that there are big differences between cyber-libertarianism and cyber-anarchism; if “information” does, in fact, “want to be free,” it does not always “want” to be free for the same reasons. ‘Openness’ does not neutralize preexisting inequality (as the Open Source movement itself demonstrates), whereas forced transparency can be a form of outing with dubious efficacy for encouraging either tolerance or accountability. As boyd, Watters, and Geek Feminism demonstrate, those who call most loudly for radical transparency are neither those who will supposedly receive the greatest benefit from it, nor those who will pay its greatest price. Though some (economically secure, middle-aged, heterosexual, able-bodied) white men acknowledged their privilege and tried to educate others like them about why pseudonyms are important, the loudest calls for ‘real names’ were ultimately the “most privileged and powerful” calling for the increased exposure of marginalized others. Maybe the full realization of an egalitarian utopia would lead more people to choose ‘openness’ or ‘transparency’, or maybe it wouldn’t. But strong-arming more people into ‘openness’ or ‘transparency’ certainly will not lead to an egalitarian utopia; it will only exacerbate existing oppressions.

The #nymwars arguments about ‘personal radical transparency’ reveal that Shame On You and Look At Me are at least as complementary as they are competing. Both preach in the same superior, moralizing tone, even as one lectures about reckless disclosure and the other levels accusations of suspicious concealment. Both would agree to place all blame squarely on the shoulders of individual users, if only they could agree on what is blameworthy. Both serve to naturalize and to normalize the same set of problematic practices, from pervasive surveillance to the commoditization of information.

The #nymwars arguments about ‘personal radical transparency’ reveal that Shame On You and Look At Me are at least as complementary as they are competing. Both preach in the same superior, moralizing tone, even as one lectures about reckless disclosure and the other levels accusations of suspicious concealment. Both would agree to place all blame squarely on the shoulders of individual users, if only they could agree on what is blameworthy. Both serve to naturalize and to normalize the same set of problematic practices, from pervasive surveillance to the commoditization of information.

Most importantly, both turn a blind eye to issues of inequality, identity, and power, and in so doing gloss distinctions that are of critical importance. If these two discourses are as much in cahoots as they are in conflict, what is the composite picture of how we think about privacy, choice, and disclosure? What is the impact of most social media users embracing one paradigm, and most social media designers embracing the other?

Part III: Documentary Consciousness

I now consider one of the many impacts that follow from being inescapably connected in a society that still masks issues of power and inequality through conceptualizations of ‘privacy’ as an individual choice. I argue that the reality of inescapable connection and the impossible demands of prevailing privacy discourses have together resulted in what I term documentary consciousness, or the abstracted and internalized reproduction of others’ documentary vision. Documentary consciousness demands impossible disciplinary projects, and as such brings with it a gnawing disquietude; it is not uniformly distributed, but rests most heavily on those for whom (in the words of Foucault) “visibility is a trap.” I close by calling for new ways of thinking about both privacy and autonomy that more accurately reflect the ways power and identity intersect in augmented societies.

Just before the turn of the 19th century, Jeremy Bentham designed a prison he called the Panopticon. The idea behind the prison’s circular structure was simple: a guard in a central tower could see into any of the prisoners’ cells at any given time, but no prisoner could ever see into the tower. The prisoners would therefore be subordinated by this asymmetrical gaze: because they would always know that they could be watched, but would never know if they werebeing watched, the prisoners would be forced to act at all times as if they were being watched, whether they were being watched or not. In contemporary parlance, Bentham’s Panopticon basically sought to crowd-source the labor of monitoring prisoners to the prisoners themselves.

Though Bentham’s Panopticon itself was never built, Michel Foucault used Bentham’s design to build his own concept of panopticism. For Foucault, the Panopticon represents not power wielded by particular individuals through brute force, but power abstracted to the subtle and ideal form of a power relation. The Panopticon itself is a mechanism not just of power, but of disciplinary power; this kind of power works in prisons, in other types of institutions, and in modern societies generally because citizens and prisoners alike, aware at all times that they could be under surveillance, internalize the panoptic gaze and reproduce the watcher/watched relation within themselves by closely monitoring and controlling their own conduct. Discipline (and other technologies of power) therefore produce docile bodies, as individuals self-regulate by acting on their own minds, bodies, and conduct through technologies of the self.

Foucault famously held that “visibility is a trap”; were he alive today, it is unlikely Foucault would be on board with radical transparency. Accordingly, it has become well-worn analytic territory to critique social media and digital social technologies by linking to Foucault’s panoptic surveillance. As early as 1990, Mark Poster argued that databases of digitalized information constituted what he termed the “Superpanopticon.” Since then, others have pointed out that “[even] minutiae can create the Superpanopticon”—and it would be difficult to argue that social media websites don’t help to circulate a lot of minutiae.

Facebook itself has been likened to a digital panopticon since at least 2007, though there are issues both with some of these critiques and with responses to them. The “Facebook=Panopticon” theme emerges anew each time the site redesigns its privacy settings—for instance, following the launch of so-called “frictionless sharing”—or, in more recent Facebook-speak, every time the site redesigns its “visibility settings.” Others express skepticism, however, as to whether the social media site is really what I jokingly like to call “the Panoptibook”; they claim that Facebook’s goal is supposedly “not to discipline us,” but to coerce us into reckless sharing (though I would argue that the latter is merely an instance of the former).

Others point to the fact that using Facebook is still “largely voluntary”; therefore, it cannot be the Panopticon. ‘Voluntary,’ however, is predicated on the notion of free choice, and as I argued in Part I, our choices here are constrained; at best, each of us can only choose whether or not to interact with Facebook (or other digital social technologies) directly. Infinitely expanding definitions of ‘disclosure’ notwithstanding, whether we leave digital traces on Facebook depends not just on the choices we make as individuals, but on the choices made by people to whom we are connected in any way. For most of us, this means that leaving traces on Facebook is largely inevitable, whether “voluntary” or not.

What may not be as readily apparent is that whether or not we interact with the site directly, Facebook and other social media sites also leave traces on us. Nathan Jurgenson (@nathanjurgenson) describes “documentary vision” as an augmented version of the photographer’s ‘camera eye,’ one through which the infinite opportunity for self-documentation afforded by social media leads us not only to view the world in terms of its documentary potential, but also to experience our own present “as always a future past.” In this way, “the logic of Facebook” affects us most profoundly not when we are using Facebook, but when we are doing nearly anything else. Away from our screens, the experiences we choose and the ways we experience them are inexorably colored not only by the ways we imagine they could be read by others in artifact form, but by the range of idealized documentary artifacts we imagine we could create from them. We see and shape our moments based on the stories we might tell about them, on the future histories they could become.

I argue here, however, that Facebook’s phenomenological impact is not limited to opportunities for self-documentation. More and more, we are attuned not only to possibilities of documenting, but also to possibilities of being documented. As I explored in Part I of this essay, living in an augmented world means that we are always connected to digital social technologies (whether we are connecting to them or not). As I elaborated in last month’s Part II, the Shame On You paradigm reminds us that virtually any moment can be a future past disclosure; we also know that social media and digital social technologies are structured by the Look At Me paradigm, which insists that “any data that can be shared, WILL be shared.” Consequently, if augmented reality has seen the emergence of a new kind of ‘camera eye’, it has seen as well the emergence of a new kind of camera shyness. Much as Bentham designed the Panopticon to crowd-source the disciplinary work of prison guards, Facebook’s design ends up crowd-sourcing the disciplinary functions of the paparazzi.

Accordingly, I extend Jurgenson’s concept of documentary vision—through which we are simultaneously documenting subjects and documented objects, perpetually aware of each moment’s documentary potential—into what I term documentary consciousness, or the perpetual awareness that, at each moment, we are potentially the documented objects of others. I want to be clear that it is not Facebook itself that is “the Panoptibook”; knowing something about nearly everyone is not nearly the same thing as seeing everything about anyone. Moreover, what Facebook ‘knows’ comes not only from what it ‘sees’ of our actions online, but also from the online and offline actions of our family members, friends, and acquaintances. Our loved ones—and our “liked” ones, and untold scores of strangers—are at least as much the guard in the Panopticon tower as is Facebook itself, if not moreso. As a result, we are now subjected to a second-order asymmetrical gaze: we can never know with certainty whether we are within the field of someone else’s documentary vision, and we can never know when, where, by whom, or to what end any documentary artifacts created of us will be viewed.

As Erving Goffman elaborated more than 50 years ago, we all take on different roles in different social contexts. For Goffman, this represents not “a lack of integrity,” but the work each of us does, and is expected to do, in order to make social interaction function. In fact, it is those individuals who refuse to play appropriate roles, or whose behavior deviates from what others expect based on the situation at hand, who lose face and tarnish their credibility with others. The context collapse designed into most social media therefore complicates profoundly even the purposeful, asynchronous self-presentation that takes place on such websites, which has come to require “laborious practices of protection, maintenance, and care.”

When we internalize the abstracted and compounded documentary gaze, we are left with a Sisyphean disciplinary task: we become obligated to consider not just the situation at hand, and not just the audiences we choose for the documentary artifacts we create, but also every future audience for every documentary artifact created by anyone else. It is no longer enough to play the appropriate social role in the present moment; it is no longer enough to craft and curate images of selves we dream of becoming, selves who will have lived idealized versions of our near and distant pasts. Applying technologies of self starts to bring less pleasure and more resignation, as documentary consciousness stirs a subtle but persistent disquietude; documentary consciousness haunts us with the knowledge that we cannot be all things, at all times, to all of the others that we (or our artifacts) will ever encounter. Documentary consciousness entails the ever-present sense of a looming future failure.

As I discussed in Part II, the impacts of these inevitable failures are not evenly distributed. Those who have the most to lose are not people who are “evil” or who are “doing something they shouldn’t be doing,” but people who live ordinary lives within marginalized groups. Those who have the most to gain, on the other hand, are people who are already among the most privileged, and corporations that already wield a great deal of power. The greatest burdens of documentary consciousness itself are therefore likely to be carried by people who are already carrying other burdens of social inequality.

Recent attention to a website called WeKnowWhatYoureDoing.com showcased much of this yet again. The speaker behind the “we” is a white, 18-year-old British man named Callum Haywood (who’s economically privileged enough to own an array of computer and networking hardware), who built a site that aggregates potentially incriminating Facebook status updates and showcases them with the names and photos of the people who posted them. Because all the data used is “publicly accessible via the Graph API,” Haywood states in a disclaimer on the site that he “cannot be held responsible for any persons [sic] actions” as a result of using what he terms “this experiment”; he has further stated that his site (which is subtitled, “…and we think you should stop”) is intended to be “a learning tool” for those users who have failed to “properly [understand] their privacy options” on Facebook.

Coverage of the site’s rapid rise to popularity (or at least high visibility) was similar to coverage surrounding Girls Around Me: a lot of Shame On You, and the occasional critique that stopped at “creepy.” Tech–savvy white men thought the site was great; a young white woman starting college at Yale this fall explained that her digital cohort—“the youngest millennials, the real Facebook Generation”—has learned from the mistakes of “those who are ten years older than us.” As a result, her generation thinks Facebook’s privacy settings are easy, obvious, and normal; if your mileage has varied, “you have no one to blame but yourself.” In examining the screenshots from WeKnowWhatYoureDoing.com that these articles feature, I have yet to find one featured Facebook user who writes like a Yale-bound preparatory school graduate; unlike the articles’ authors, the majority of featured Facebook users in these screenshots are people of color. It is hard not to see WeKnowWhatYoureDoing.com as doing anything other than offering self-satisfied amusement to privileged people, at the acknowledged potential expense of less privileged people’s employment.

Even overlooking the facts that Facebook’s privacy policy may be “more confusing and harder to understand than the small print coming from credit card companies,” and that the data in Facebook’s Graph API is really only “publicly accessible” in reference to a ‘public’ comprised entirely of software developers, the story Haywood wants to tell with WeKnowWhatYoureDoing.com is fundamentally flawed. It is a story in which “people violate their own privacies on a regular basis,” in a world where digital surveillance, companies like Facebook, and smug self-important software developers fade into the background of the setting’s ‘natural’ world. Haywood states, “[p]eople have lost their jobs in the past due to some of the posts they put on Facebook, so maybe this demonstrates why”; what he seems to be missing is that his site demonstrates not only “why,” but how people come to lose their jobs.

Even overlooking the facts that Facebook’s privacy policy may be “more confusing and harder to understand than the small print coming from credit card companies,” and that the data in Facebook’s Graph API is really only “publicly accessible” in reference to a ‘public’ comprised entirely of software developers, the story Haywood wants to tell with WeKnowWhatYoureDoing.com is fundamentally flawed. It is a story in which “people violate their own privacies on a regular basis,” in a world where digital surveillance, companies like Facebook, and smug self-important software developers fade into the background of the setting’s ‘natural’ world. Haywood states, “[p]eople have lost their jobs in the past due to some of the posts they put on Facebook, so maybe this demonstrates why”; what he seems to be missing is that his site demonstrates not only “why,” but how people come to lose their jobs.

In pretending that “information wants to be free” and holding individuals responsible for violations of their own privacy, we neglect to consider the responsibility of other individuals who write code for companies like Facebook, or who use the data available through the Graph API, or who circulate Facebook data more widely, or who help Facebook generate and collect data (yes, even by tagging their friends in photographs). If we cannot control our own privacy, it is because we can so easily impact the privacy of everyone we know—and even of people we don’t know.

We urgently need to rethink ‘privacy’ in ways that expand beyond the level of individual conditions, obligations, or responsibilities, yet also take into account differing intersections of visibility, context, and identity in an unequal but intricately connected society. And we need as well to turn much of our thinking about privacy and individual autonomy on its head. Due justice to questions of who is visible, and to whom, to what end, and to what effect cannot be done so long as we continue to believe that privacy and visibility are simply neutral consequences of individual choices, or that such choices are individual moral responsibilities.

We must reconceptualize privacy as a collective condition, one that entails more than simply ‘lack of visibility’; privacy must be also a collective responsibility, one that individuals and institutions alike honor in ways that go beyond ‘opting out’ of the near-ubiquitous state and corporate surveillance we seem to take for granted. It is time to stop critiquing the visible minutiae of individual lives and choices, and to start asking critical questions about who is looking, and why, and what happens next.

Private browsing image from http://appadvice.com/appnn/2011/05/apple-tackles-set-privacy-concerns-newest-published-patent

Performance image by Neil Girling, http://www.theblight.net. Used with permission.

Modernized Rockwell image by William George Wadman, from http://fadedandblurred.com/blog/great-art-for-a-great-cause/

Shadow image by yalayama, from http://braingasmic.tumblr.com/post/22348601967/how-pcbs-promote-dendrite-growth-may-increase-autism

Kids image from http://www.eyesonbullying.org/about.html

Houdini image from http://www.thestar.com/article/914083–houdini-s-inescapable-influence

Baby bathtub image from http://www.mommyshorts.com/2011/11/caption-contest.html

Peeping bathroom image from http://gweedopig.com/index.php/2009/11/03/peeping-tom-hides-video-camera-in-christian-store-bathroom/

Dear Victim image from http://news.nationalpost.com/2011/11/24/let-me-run-through-your-dumb-mistakes-teen-burglars-apology-letter-to-victims/

Pushpin image from http://www.pinewswire.net/article/to-warrant-or-not-to-warrant-aclu-police-clash-over-cellphone-location-data/

Don’t rape sign image from http://higherunlearning.files.wordpress.com/2012/05/dsc7600r.jpg?w=580&h=510

Anti-woman image from http://www.feroniaproject.org/fired-for-using-contraception-maybe-in-arizona/

Naked wedding photo from http://www.mccullagh.org/photo/1ds-10/burning-man-cathedral-wedding

Cop image from http://www.quickmeme.com/meme/6ajf/

G+ vs. Facebook comic by Randal Munroe, from xkcd: http://xkcd.com/918/

Transparency word cluster from http://digiphile.wordpress.com/2010/03/28/transparency-camp-2010-government-transparency-open-data-and-coffee/

Transparent sea creature image from http://halfelf.org/2012/risk-vs-transparency/

Transparent grenade image from http://www.creativeapplications.net/objects/the-transparency-grenade-by-julian-oliver-design-fiction-for-leaking-data/

Transparency and toast image from http://time2morph.files.wordpress.com/2012/01/transparent-toaster.jpg?w=300&h=234

Looming storm photo by Whitney Erin Boesel. Used with permission.

Digital eye image from http://www.indypendent.org/2012/04/28/facebook-lobbies-washington-spying-users

Store surveillance image from http://mastersofmedia.hum.uva.nl/2010/10/30/facebook-open-minded-panopticon/

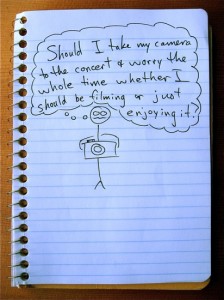

Stick figure comic by Luke Simpson, from http://www.stickworldcomics.com/comics/2009/11/

Camera shy photo by M1L4N, from http://m1l4n.com/camerashy/

Impossible task image by Hyeyoung Kim, from http://www.co-mag.net/2008/dark-word-hyeyoung-kim/

Facebook traffic sign photo from http://epicfails.net/2011/10/facebook-fail/

Facebook gunshot image from http://www.buzzingup.com/2010/09/facebook-is-infected-by-the-failwhale/

New look image by Kyle Froman from http://www.artsjournal.com/tobias/2011/10/starting_over.html

[i] Note that “publicly available” is tricky here: Girls Around Me users were ported to Facebook to view the women’s profiles, and so could also have accessed non-public information if they happened to view profiles of women with whom they had friends or networks in common.

[ii] Alternatively, my difficulty finding posts written in support of Google’s policy may simply reflect Google’s ‘personalization’ of my search results, both then and now.

Comments 24

Race, Class, App.net: The Beginning of ‘White Flight’ from Facebook & Twitter? » Cyborgology — August 9, 2012

[...] mom’s on Twitter, too), and there’s even a growing chance your grandma is on Facebook (though I admit that mine isn’t). Facebook has become so quotidian—some would even say pedestrian—that as Laura Portwood-Stacer [...]

Is App.net The Beginning Of ‘White Flight’ From Facebook And Twitter? | Test — August 10, 2012

[...] on Twitter, too), and there’s even a growing chance your grandma is on Facebook (though I admit that mine isn’t). Facebook has become so quotidian — some would even say pedestrian — that as Laura [...]

Is App.net The Beginning Of ‘White Flight’ From Facebook And Twitter? | LED World — August 11, 2012

[...] on Twitter, too), and there’s even a growing chance your grandma is on Facebook (though I admit that mine isn’t). Facebook has become so quotidian — some would even say pedestrian — that as Laura [...]

A New Privacy: Full Essay (Parts I, II, and III) » Cyborgology | Sui Generis Net | Scoop.it — August 12, 2012

[...] Last spring at TtW2012, a panel titled “Logging off and Disconnection” considered how and why some people choose to restrict (or even terminate) their participation in digital social life—and in doing so raised the question, is it truly possible to log off? Taken together, the four talks by Jenny Davis (@Jup83), Jessica Roberts (@jessyrob), Laura Portwood-Stacer (@lportwoodstacer), and Jessica Vitak (@jvitak) suggested that, while most people express some degree of ambivalence about social media and other digital social technologies, the majority of digital social technology users find the burdens and anxieties of participating in digital social life to be vastly preferable to the burdens and anxieties that accompany not participating. The implied answer is therefore NO: though whether to use social media and digital social technologies remains a choice (in theory), the choice not to use these technologies is no longer a practicable option for number of people. [...]

Is App.net The Beginning Of ‘White Flight’ From Facebook And Twitter? « gregorylnewton — August 12, 2012

[...] on Twitter, too), and there’s even a growing chance your grandma is on Facebook (though I admit that mine isn’t). Facebook has become so quotidian — some would even say pedestrian — that as Laura [...]

Refusing the Refusenicks Paradigm » Cyborgology — October 15, 2012

[...] sites is carried around with social media users almost all the time. Thus, as Whitney Erin Boesel states, it may be technically impossible for anyone, even social media rejecters and abstainers, to [...]

What Would Facebook Be Like Without Quantification? » Cyborgology — November 25, 2012

[...] He further posits that “the site’s relentless focus on quantity leads us to continually measure the value of our social connections within metric terms, and this metricated viewpoint may have consequences on how we act within the system.” I’d take this one step further, and argue that if Facebook’s ‘quantification fetish’ influences how we act within the context of Facebook (and it probably does), then it also influences how we act outside the context of Facebook. (Recall that social media has an affect on our experiences of being in the world even when we’re not using it, and even if we ourselves don’t use it at all.) [...]

Let Sleeping Memories Lie: High School and the Facebookless Past » Cyborgology — November 29, 2012

[...] can delete and untag and re-edit all I want, but anyone with access (remember, access to my profile is not entirely within my control) can download images, and can take screen shots, and can save these things, and can launch them [...]

Origins of the Augmented Subject » Cyborgology — January 15, 2013

[...] Accordingly, mirrors are not inert. As the tale of Narcissus and Echo makes plain, a mirror can shape the behavior of the subject who stands before it. A mirror has certain affordances, and these affordances change depending on where the mirror is placed, or on who comes to stand in front of it. Insofar as “the mirror” serves as a metaphor for social media in particular, it may constitute a subject who has both what Jurgenson calls “documentary vision” (or a “Facebook eye”) and what Whitney Erin Boesel calls “documentary consciousness.” [...]

A Big Fat Fakebook Wedding » Cyborgology — January 24, 2013

[...] it. This highlights some of the reasons we need to rethink our conceptializations of privacy, as I’ve argued before. (danah boyd’s [@zephoria] recent article “Networked Privacy” [pdf] provides a name for the [...]

Difference Without Dualism (Part One) » Cyborgology — March 20, 2013

[...] and inextricably enmeshed, which makes augmented reality a non-optional system, so no you simply cannot “log off” or “disconnect.” The impossibility of escaping the influence of digitally-mediated interaction means that there is [...]

Sextual Healing » Cyborgology — September 4, 2013

[...] fact that some people choose to circulate photo-sexts that were intended to remain private. Forwarding a sext is a failure to understand respect, trust, and boundaries, while the shame and [...]

The Spotification Diaries » Cyborgology — September 21, 2013

[...] but also of my professional development. This is not, of course, to say that I’ve jumped on the ‘Look At Me’ paradigm of Mandatory Visibility for All bandwagon, because nothing could be further from the truth; it’s [...]

Rape Culture, Consent, and “Grabbing 100+ Boobs at Burning Man 2013″ » Cyborgology — September 26, 2013

[...] reactions are merely the latest examples of what Whitney Erin Boesel calls the Shame on You paradigm of victim-blaming in cases of privacy violation. She [...]

Google Glass Doesn’t Erode Privacy. Human Behavior Does. - TIME — May 19, 2014

[…] I’ve written elsewhere, we are currently caught between two conflicting privacy paradigms—but neither paradigm takes […]

Google Glass Doesn’t Have a Privacy Problem. You Do. | HELIK.ES — May 19, 2014

[…] I’ve written elsewhere, we are currently caught between two conflicting privacy paradigms—but neither paradigm takes […]

Google Glass Doesn’t Have a Privacy Problem. You Do. – TIME | Shop Gear Online — May 19, 2014

[…] I’ve written elsewhere, we are currently caught between two conflicting privacy paradigms—but neither paradigm takes […]

Google Glass Doesn't Have a Privacy Problem. You Do. | Online Video Downloader — May 19, 2014

[…] I’ve written elsewhere, we are currently caught between two conflicting privacy paradigms—but neither paradigm takes […]

GOOGLE GLASS DOESN’T HAVE A PRIVACY PROBLEM. YOU DO. | sreaves32 — May 19, 2014

[…] I’ve written elsewhere, we are caught between two conflicting privacy paradigms—but neither paradigm takes into account […]

Foregrounding | digitalwild.org — May 20, 2014

[…] I’ve written elsewhere, we are caught between two conflicting privacy paradigms—but neither paradigm takes into account […]

Indecent Exposure: Breasts as Data, Data as Breasts » Cyborgology — June 19, 2014

[…] care about these things a lot, and I’ve been writing about them on Cyborgology for two years now. But to everyone who comments on these issues, whether in text or in image or in any other […]

Becoming A Glasshole | Ready For the Future? — July 29, 2014

[…] I’ve written elsewhere, we are caught between two conflicting privacy paradigms—but neither paradigm takes into account […]

Becoming A Glasshole | Software Society — July 31, 2014

[…] I’ve written elsewhere, we are caught between two conflicting privacy paradigms—but neither paradigm takes into account […]

Is App.net The Beginning Of ‘White Flight’ From Facebook And Twitter? | Russia — December 22, 2014

[…] mom’s on Twitter, too), and there’s even a growing chance your grandma is on Facebook (though I admit that mine isn’t). Facebook has become so quotidian — some would even say pedestrian — that as Laura […]