Stories of data breaches and privacy violations dot the news landscape on a near daily basis. This week, security vendor Carbon Black published their Australian Threat Report based on 250 interviews with tech executives across multiple business sectors. 89% Of those interviewed reported some form of data breach in their companies. That’s almost everyone. These breaches represent both a business problem and a social problem. Privacy violations threaten institutional and organizational trust and also, expose individuals to surveillance and potential harm.

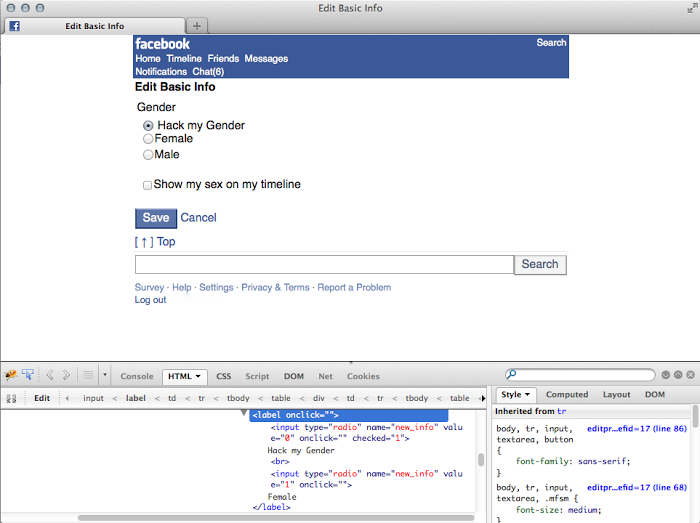

But “breaches” are not the only way that data exposure and privacy violations take shape. Often, widespread surveillance and exposure are integral to technological design. In such cases, exposure isn’t leveled at powerful organizations, but enacted by them. Legacy services like Facebook and Google trade in data. They provide information and social connection, and users provide copious information about themselves. These services are not common goods, but businesses that operate through a data extraction economy.

I’ve been thinking a lot about the cost-benefit dynamics of data economies and in particular, how to grapple with the fact that for most individuals, including myself, the data exchange feels relatively inconsequential or even mildly beneficial. Yet at a societal level, the breadth and depth of normative surveillance is devastating. Resolving this tension isn’t just an intellectual exercise, but a way of answering the persistent and nagging question: “why should I care if Facebook knows where I ate brunch?” This is often wrapped in a broader “nothing to hide” narrative, in which data exposure is a problem only for deviant actors.