The Facebook newsfeed is the subject of a lot of criticism, and rightly so. Not only does it impose an echo chamber on your digitally-mediated existence, the company constantly tries to convince users that it is user behavior –not their secret algorithm—that creates our personalized spin zones. But then there are moments when, for one reason or another, someone comes across your newsfeed that says something super racist or misogynistic and you have to decide to respond or not. If you do, and maybe get into a little back-and-forth, Facebook does a weird thing: that person starts showing up in your newsfeed a lot more.

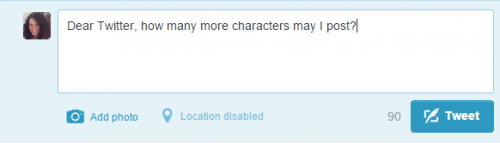

This happened to me recently and it has me thinking about the role of the Facebook newsfeed in inter-personal instantiations of systematic oppression. Facebook’s newsfeed, specially formulated to increase engagement by presenting the user with content that they have engaged with in the past, is at once encouraging of white allyship against oppression and inflicting a kind of violence on women and people of color. The same algorithmic action can produce both consequences depending on the user. more...