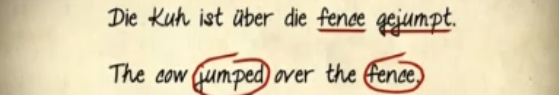

This four-minute BBC video documents a population of ethnic German-Americans. They are the descendants of Germans who immigrated to Texas 150 years ago. Over the generations, the language evolved into a unique dialect. Today linguist Hans Boas is trying to document the dialect before it dies out. While it persisted for a very long time, World War II, and the ensuing stigma against anything German, brought an end to its transmission. Today’s speakers are all 60 or older and will soon be gone.

This four-minute BBC video documents a population of ethnic German-Americans. They are the descendants of Germans who immigrated to Texas 150 years ago. Over the generations, the language evolved into a unique dialect. Today linguist Hans Boas is trying to document the dialect before it dies out. While it persisted for a very long time, World War II, and the ensuing stigma against anything German, brought an end to its transmission. Today’s speakers are all 60 or older and will soon be gone.

Lisa Wade, PhD is an Associate Professor at Tulane University. She is the author of American Hookup, a book about college sexual culture; a textbook about gender; and a forthcoming introductory text: Terrible Magnificent Sociology. You can follow her on Twitter and Instagram.