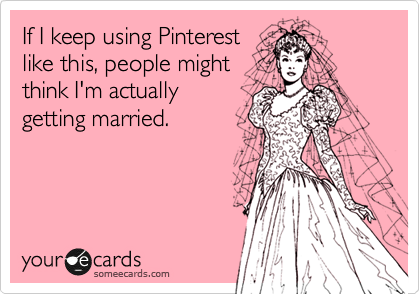

Whether they’ve joined me on Twitter, sneakily coerced me into spending more time on Facebook, or just like to go on at length about how social networking sites are “stupid and a waste of time,” it seems my friends never tire of talking to me about social media. Given my line of work, this is pretty great: it means a never-ending stream of food for thought (or “networked field research,” if you will). This post’s analysis-cum-cautionary tale comes to you through my friend Otto (we’ll refer to him by his nom de plume), who got himself into some pseudonuptial trouble last week.

Whether they’ve joined me on Twitter, sneakily coerced me into spending more time on Facebook, or just like to go on at length about how social networking sites are “stupid and a waste of time,” it seems my friends never tire of talking to me about social media. Given my line of work, this is pretty great: it means a never-ending stream of food for thought (or “networked field research,” if you will). This post’s analysis-cum-cautionary tale comes to you through my friend Otto (we’ll refer to him by his nom de plume), who got himself into some pseudonuptial trouble last week.

It started when Otto was invited to a “wedding party”— more...