Last month in Part I (Distributed Agency and the Myth of Autonomy), I used the TtW2012 “Logging Off and Disconnection” panel as a starting point to consider whether it is possible to abstain completely from digital social technologies, and came to the conclusion that the answer is “no.” Rejecting digital social technologies can mean significant losses in social capital; depending on the expectations of the people closest to us, rejecting digital social technologies can mean seeming to reject our loved ones (or “liked ones”) as well. Even if we choose to take those risks, digital social technologies are non-optional systems; we can choose not to use them, but we cannot choose to live in a world where we are not affected by other people’s choices to use digital social technologies.

I used Facebook as an example to show that we are always connected to digital social technologies, whether we are connecting through them or not. Facebook (and other companies) collect what I call second-hand data, or data about people other those from whom the data is collected. This means that whether we leave digital traces is not a decision we can make autonomously, as our friends, acquaintances, and contacts also make these decisions for us. We cannot escape being connected to digital social technologies anymore than we can escape society itself.

This week, I examine two prevailing privacy discourses—one championed by journalists and bloggers, the other championed by digital technology companies—to show that, although our connections to digital social technology are out of our hands, we still conceptualize privacy as a matter of individual choice and control, as something individuals can ‘own’. Clinging to the myth of individual autonomy, however, leads us to think about privacy in ways that mask both structural inequality and larger issues of power.

Disclosure: Damned If You Do, and Damned If You Don’t

Inescapable connection notwithstanding, we still largely conceptualize disclosure as an individual choice, and privacy as a personal responsibility. This is particularly unsurprising in the United States, where an obsession with self-determination is foundational not only to the radical individualism that increasingly characterizes American culture, but also to much of our national mythology (to let go of the ‘autonomous individual’ would be to relinquish the “bootstrap” narrative, the mirage of meritocracy, and the shaky belief that bad things don’t happen to good people, among other things).

Though the intersection of digital interaction and personal information is hardly localized to the United States, major digital social technology companies such as Facebook and Google are headquartered in the U.S.; perhaps relatedly, the two primary discourses of privacy within that intersection share a good deal of underlying ideology with U.S. national mythology. The first of these discourses centers on a paradigm that I’ll call Shame On You, and spotlights issues of privacy and agency; the second centers on a paradigm that I’ll call Look At Me, and spotlights issues of privacy and identity.

“You shouldn’t have put it on the Internet, stupid!” Within the Shame On You paradigm, the control of personal information and the protection of personal privacy are not just individual responsibilities, but also moral obligations. Choosing to disclose is at best a risk and a liability; at worst, it is the moment we bring upon ourselves any unwanted social, emotional, or economic impacts that will stem (at any point, and in any way) from either an intended or an unintended audience’s access to something we have made digitally available. Disclosure is framed as an individual choice, though we need not choose intentionally or even knowingly; it can be the choice to disclose information, the choice to make incorrect assumptions about to whom information is (or will be) accessible, or the choice to remain ignorant of what, when, by whom, how, and to what end that information can be made accessible.

A privacy violation is therefore ultimately a failure of vigilance, a failure of prescience; it redefines as disclosure the instant in which we should have known better, regardless of what it is we should have known. Accordingly, the greatest shame in compromised privacy is not what is exposed, but the fact of exposure itself. We judge people less for showing women their genitals, and more for being reckless enough to get caught doing so on Twitter.

Shame On You was showcased most recently in the commentary surrounding the controversial iPhone app “Girls Around Me,” which used a combination of public Google Maps data, public Foursquare check-ins, and ‘publicly available’[i] Facebook information to create a display of nearby women. The creators of Girls Around Me claimed their so-called “creepy” app was being targeted as a scapegoat, and insisted that the app could just as well be used to locate men instead of women. Nonetheless, the creators’ use of the diminutive term “girls” rather than the more accurate term “women” exemplifies the sexism and the objectification of women on which the app was designed to capitalize. (If the app’s graphic design somehow failed to make this clear, see also one developer’s comments about using Girls Around Me to “[avoid] ugly women on a night out”).

The telling use of “girls” seemed to pass uncommented upon, however, and most accounts of the controversy (with few exceptions) omitted gender and power dynamics from the discussion—as well as “society, norms, politics, values and everything else confusing about the analogue world.” The result was a powerful but unexamined synergy between Shame On You and the politics of sex and visibility, one that cast as transgressors women who had dared not only to go out in public, but to publicly declare where they had gone.

Women and men alike uncritically reproduced the app’s demeaning language by referring to “girls” throughout their commentaries; many argued that the app was not the problem, and insisted furthermore that its developers had done nothing wrong. The blogger who broke the Girls Around Me story explicitly states that “the real problem” is not the app (which was made by “guys” who are “super nice,” and which was “meant to be all in good fun”), but rather people who, “out of ignorance, apathy or laziness,” do not opt-out of allowing strangers to view their information. He and a number of others hope Girls Around Me will serve as “a wake up call” to “girls who have failed to lock down their info” and to “those who publicly overshare,” since “we all have a responsibility to protect our own privacy” instead of leaving “all our information out there waving in the wind.”

Another blogger admonishes that, “the only way to really stay off the grid is to never sign up for these services in the first place. Failing that, you really should take your online privacy seriously. After all, Facebook isn’t going to help you, as the more you share, the more valuable you are to its real customers, the advertisers. You really need to take responsibility for yourself.” Still another argues that conventional standards of morality and behavior no longer apply to digitally mediated actions, because “publishing anything publicly online means you have, in fact, opted in”—to any and every conceivable (or inconceivable) use of whatever one might have made available. The pervasive tone of moral superiority, both in these articles and in others like them, proclaims loudly and clearly: Shame On You—for being foolish, ignorant, careless, naïve, or (worst of all) deliberately choosing to put yourself on digital display.

Accounts such as these serve not only to deflect attention away from problems like sexism and objectification, but to normalize and naturalize a veritable litany of questionable phenomena: pervasive surveillance, predatory data collection, targeted ads, deliberately obtuse privacy policies, onerous opt-out procedures, neoliberal self-interest, the expanding power of social media companies, the repackaging of users as products, and the simultaneous monetization and commoditization of information, to name just a few. These accounts perpetuate the myth that we are all autonomous individuals, isolated and distinct, endowed with indomitable agency and afforded infinite arrays of choices.

With other variables obfuscated or reduced to the background noise of normalcy, the only things left to blame for unanticipated or unwanted outcomes–or for our disquietude at observing such outcomes–are those individuals who choose to expose themselves in the first place. Of course corporations try to coerce us into “putting out” information, and of course they will take anything they can get if we are not careful; this is just their nature. It is up to us to be good users, to keep telling them no, to remain vigilant and distrustful (even if we like them), and never to let them go all the way. We are to keep the aspirin between our knees, and our data to ourselves.

Shame On You extends beyond disclosure to corporations, and—for all its implicit digital dualism—beyond digitally mediated disclosure as well. Case in point: during the course of writing this essay, I received a mass-emailed “Community Alert Bulletin” in which the Chief of Police at my university campus warned of “suspicious activity.” On several occasions, it seems, two men have been seen “roaming the library;” one of them typically “acts as a look out,” while the other approaches a woman who is sitting or studying by herself. What does one man do while the other keeps watch? He “engages” the woman, and “asks for personal information.” The “exact intent or motives of the subjects” is unknown, but the ‘Safety Reminders’ at the end of the message instruct, “Never provide personal information to anyone you do not know or trust.”

If ill-advised disclosure were always this simple—suspicious people asking us outright to reveal information about ourselves—perhaps the moral mandate of Shame On You would seem slightly less ridiculous. As it stands, holding individuals primarily responsible for violations of their own privacy expands the operative definition of “disclosure” to absurd extremes. If we post potentially discrediting photos of ourselves, we are guilty of disclosure through posting; if friends (or former lovers) post discrediting photos of us, we are guilty of disclosure through allowing ourselves to be photographed; if we did not know that we were being photographed, we are guilty of disclosure through our failure to assume that we would be photographed and to alter our actions accordingly. If we do not want Facebook to have our names or our phone numbers, we should terminate our friendships with Facebook users, forcibly erase our contact information from those users’ phones, and thereafter give false information to any suspected Facebook users we might encounter. This is untenable, to say the least. The inescapable connection of life in an augmented world means that exclusive control of our personal information, as well as full protection of our personal privacy, is quite simply out of our personal hands.

* * * * *

The second paradigm, Look At Me, at first seems to represent a competing discourse. Its most vocal proponents are executives at social- and other digital media companies, along with assorted technologists and other Silicon Valley ‘digerati’. This paradigm looks at you askance not for putting information on the Internet, but for forgetting that “information wants to be free”—because within this paradigm, disclosure is the new moral imperative. Disclosure is no longer an action that disrupts the guarded default state, but the default state itself; it is not something one chooses or does, but something one is, something one has always-already done. Privacy, on the other hand, is a homespun relic of a bygone era, as droll as notions of a flat earth; it is particularly impractical in the 21st century. After all, who really owns a friendship? “Who owns your face if you go out in public?”

Called both “openness” and “radical transparency,” disclosure-by-default is touted as a social and political panacea; it will promote kindness and tolerance toward others, fuel progress and innovation, create accountability, and bring everyone closer to a better world. Alternatively, clinging to privacy will merely harbor evil people and condemn us to “generic relationships.” The enlightened “don’t believe that privacy is a real issue,” and anyone who maintains otherwise is suspect; as even WikiLeaks activist Jacob Appelbaum (@ioerror) has lamented, privacy is cast “as something that only criminals would want.” Our greatest failings are no longer what do or what we choose, but who we are; our greatest shame is not in exposure, but in having or being something to hide.

Look At Me has benefitted, to some degree, from a synergy of its own: “openness,” broadly conceived, has gained traction as more ‘open’ movements and initiatives attract attention and build popular support. In 1998, the Open Source Initiative was the first to claim ‘open’ as a moniker; it is now joined by what one writer has called an “open science revolt,” by not one, but two Open Science movements, by President Obama’s Open Government Initiative, by the somewhat meta Open Movements movement, and by a number of other initiatives aimed at reforming academic research and publishing.

“Transparency,” too, has been popularized into a buzzword (try web-searching “greater transparency,” with quotes). In an age of what Jurgenson and Rey (@nathanjurgenson and @pjrey) have called liquid politics, people demand transparency from institutions in the hope that exposure will encourage the powerful to be honest; in return, institutions offer cryptic policy documents, unreadable reports, and “rabbit hole[s] of links” as transparency simulacra. Yet we continue to push for transparency from corporations and governments alike, which suggests that, to some degree, we do believe in transparency as a means to progressive ends. Perhaps it is not such a stretch, then, for social media companies (and others) to ask that we accept radical transparency for ourselves as well?

The 2011 launch of social network-cum-“identity service” Google+ served to test this theory, but the protracted “#nymwars” controversy that followed seemed not to be the result that Google had anticipated. Although G+ had been positioned as ‘the anti-Facebook’ in advance of its highly anticipated beta launch, within four weeks of going live Google decided not only to enforce a strict ‘real names only’ policy, but to do so through a “massive deletion spree” that quickly grew into a public relations debacle. The mantra in Silicon Valley is, “Google doesn’t get social,” and this time Google managed to (as one blogger put it) “out-zuck the Zuck.” Though sparked by G+, #nymwars did not remain confined to G+ in its scope; nor were older battle lines around privacy and anonymity redrawn for this particular occasion.

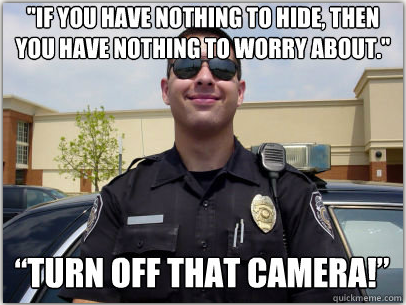

On one side of the conflagration, rival data giants Google and Facebook both pushed their versions of ‘personal radical transparency’; they were more-or-less supported by a loose assortment of individuals who either had “nothing to hide,” or who preached the fallacy that, ‘if you have nothing to hide, you have nothing to fear.’ The ‘nothing to hide’ argument in particular has been a perennial favorite for Google; well before the advent of G+ and #nymwars, CEO Eric Schmidt rebuked, “If you have something that you don’t want anyone to know, maybe you shouldn’t be doing it in the first place.” Unsurprisingly, Schmidt’s dismissive response to G+ users with privacy concerns was a smug, “G+ is completely optional. No one is forcing you use it.” If G+ is supposed to be an ‘identity service,’ it seems only some identities are worthy of being served.

On the other side was a ‘vocal minority’ who, despite their purportedly fewer numbers, still seemed to generate most of the substantive digital content about #nymwars[ii]. The pro-pseudonym-and/or-privacy camp included the Electronic Frontier Foundation (@EFF), a new website called My Name Is Me, researcher danah boyd (@zephoria), pseudonymity advocate and former Google employee Skud (@Skud, ‘Kirrily Robert’ on her passport at the time and ‘Alex Skud Bayley’ on it now), security technologist Bruce Schneier (@schneierblog, who had argued previously against Schmidt’s view of privacy), the hacker group Anonymous (unsurprisingly), and a substantive minority of Google employees (or “Googlers”). There was a long list of pseudonymous and ‘real named’ users who had been kicked off the site, an irate individual banned because of his “unusual” real name, and an individual with an unremarkable name who couldn’t seem to get banned no matter how hard he tried.

Digital dualists took issue, too; one declared the ‘real names’ policy to be “a radical departure from the way identity and speech interact in the real world,” while another came to the conclusion that, “The real world is often messy, chaotic and anonymous. The Internet is mostly better that way, too.” And in what may be one of my favorite #nymwars posts, a sixteen year old blogger took on the absurdity of equating not only ‘real name’ with ‘known as,’ but also ‘network’ with ‘community’—and then went on to argue that Shakespeare’s Juliet had it right in asking, “What’s in a name?” whereas Google’s Eric Schmidt sounded “shockingly similar” to the head of “the evil, Voldemort-controlled Ministry of Magic” in J. K. Rowling’s first Harry Potter book.

Issues of power are conspicuously absent from Look At Me, and Google makes use of this fact to gloss the profound difference between individuals pressuring a company to be transparent and a company pressuring (or forcing) individuals to be transparent. Google is transparent, for example, in its ad targeting, but allowing users either to opt-out of targeted advertising or to edit the information used in targeting them does not change the fact that Google users are being tracked, and that information about them is being collected. This kind of ‘transparency’ offers users not empowerment, but what Davis (@Jup83) calls “selective visibility”: users can reduce their awareness of being tracked by Google, but can do little (short of complete Google abstention) to stop the tracking itself.

Such ‘transparency’ therefore has little effect on Google in practice; with plenty more fish in the sea (and hundreds of millions of userfish already in its nets), Google has little incentive to care whether any particular user does or does not continue to use Google products. Individual users, on the other hand, can find quitting Google to be a right pain, and this imbalance in the Google/user relationship effectively gives carte blanche to Google policymakers. This is especially problematic with respect to transparency, which has a far greater impact on individual Google users than it does on Google itself.

Google may have ‘corporate personhood’, but persons have identities; in the present moment, many identities still serve to mark people for discrimination, oppression, and persecution, whether they are “evil” or not. Look At Me claims personal radical transparency will solve these problems, yet social media spaces are neither digital utopias nor separate worlds; all regular “*-isms” still apply, and real names don’t stop bullies or trolls in digital spaces any more than they do in conventional spaces. At the height of irony, even ‘real-named’ G+ users who supported Google’s policy still trolled other users who supported pseudonyms.

boyd offers a different assessment of ‘real names’ policies, one that stands in stark contrast to the doctrine of radical transparency. She argues that, far from being empowering, ‘real name’ policies are “an authoritarian assertion of power over vulnerable people.” Geek Feminism hosts an exhaustive list of the many groups and individuals who can be vulnerable in this way, and Audrey Watters (@audreywatters) explains that many people in positions of power do not hesitate to translate their disapproval of even ordinary activities into adverse hiring and firing decisions. Though tech CEOs call for radical transparency, a senior faculty member (for example) condemns would-be junior faculty whose annoying “quirks” turn up online instead of remaining “stifle[d]” and “hidden” during the interview process. As employers begin not only to Google-search job applicants, but also to demand Facebook passwords, belonging to what Erving Goffman calls a “discreditable group” carries not just social but economic consequences.

Rey points out that there are big differences between cyber-libertarianism and cyber-anarchism; if “information” does, in fact, “want to be free,” it does not always “want” to be free for the same reasons. ‘Openness’ does not neutralize preexisting inequality (as the Open Source movement itself demonstrates), whereas forced transparency can be a form of outing with dubious efficacy for encouraging either tolerance or accountability. As boyd, Watters, and Geek Feminism demonstrate, those who call most loudly for radical transparency are neither those who will supposedly receive the greatest benefit from it, nor those who will pay its greatest price. Though some (economically secure, middle-aged, heterosexual, able-bodied) white men acknowledged their privilege and tried to educate others like them about why pseudonyms are important, the loudest calls for ‘real names’ were ultimately the “most privileged and powerful” calling for the increased exposure of marginalized others. Maybe the full realization of an egalitarian utopia would lead more people to choose ‘openness’ or ‘transparency’, or maybe it wouldn’t. But strong-arming more people into ‘openness’ or ‘transparency’ certainly will not lead to an egalitarian utopia; it will only exacerbate existing oppressions.

The #nymwars arguments about ‘personal radical transparency’ reveal that Shame On You and Look At Me are at least as complementary as they are competing. Both preach in the same superior, moralizing tone, even as one lectures about reckless disclosure and the other levels accusations of suspicious concealment. Both would agree to place all blame squarely on the shoulders of individual users, if only they could agree on what is blameworthy. Both serve to naturalize and to normalize the same set of problematic practices, from pervasive surveillance to the commoditization of information.

Most importantly, both turn a blind eye to issues of inequality, identity, and power, and in so doing gloss distinctions that are of critical importance. If these two discourses are as much in cahoots as they are in conflict, what is the composite picture of how we think about privacy, choice, and disclosure? What is the impact of most social media users embracing one paradigm, and most social media designers embracing the other?

As I will show next week, the conception of privacy as an individual matter—whether as a right or as something to be renounced—is the linchpin of the troubling consequences that follow.

Whitney Erin Boesel (@phenatypical) is a graduate student in Sociology at the University of California, Santa Cruz.

Image Credits:

Baby bathtub image from http://www.mommyshorts.com/2011/11/caption-contest.html

Peeping bathroom image from http://gweedopig.com/index.php/2009/11/03/peeping-tom-hides-video-camera-in-christian-store-bathroom/

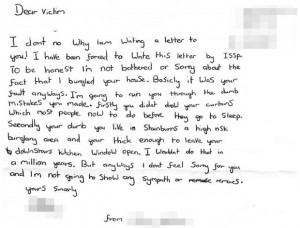

Dear Victim image from http://news.nationalpost.com/2011/11/24/let-me-run-through-your-dumb-mistakes-teen-burglars-apology-letter-to-victims/

Pushpin image from http://www.pinewswire.net/article/to-warrant-or-not-to-warrant-aclu-police-clash-over-cellphone-location-data/

Don’t rape sign image from http://higherunlearning.files.wordpress.com/2012/05/dsc7600r.jpg?w=580&h=510

Anti-woman image from http://www.feroniaproject.org/fired-for-using-contraception-maybe-in-arizona/

Naked wedding photo from http://www.mccullagh.org/photo/1ds-10/burning-man-cathedral-wedding

Cop image from http://www.quickmeme.com/meme/6ajf/

G+ vs. Facebook comic: by Randal Munroe, from xkcd: http://xkcd.com/918/

Transparency word cluster from http://digiphile.wordpress.com/2010/03/28/transparency-camp-2010-government-transparency-open-data-and-coffee/

Transparent sea creature image from http://halfelf.org/2012/risk-vs-transparency/

Transparent grenade image from http://www.creativeapplications.net/objects/the-transparency-grenade-by-julian-oliver-design-fiction-for-leaking-data/

Transparency and toast image from http://time2morph.files.wordpress.com/2012/01/transparent-toaster.jpg?w=300&h=234

[i] Note that “publicly available” is tricky here: Girls Around Me users were ported to Facebook to view the women’s profiles, and so could also have accessed non-public information if they happened to view profiles of women with whom they had friends or networks in common.

[ii] Alternatively, my difficulty finding posts written in support of Google’s policy may simply reflect Google’s ‘personalization’ of my search results, both then and now.

moral imperative

Comments 5

A New Privacy, Part 3: Documentary Consciousness » Cyborgology — July 19, 2012

[...] Part II, I examined two prevailing privacy discourses to show that, although our connections to digital [...]

Status Flight and the Gendering of Google Glass » Cyborgology — May 3, 2013

[...] for marginalized groups, especially when that visibility is on dominant groups’ terms. As I’ve explained before, this is something Google consistently fails to [...]

A New Privacy: Full Essay (Parts I, II, and III) » Cyborgology — June 19, 2014

[…] I posted in May, June, and July of this year: Part I: Distributed Agency and the Myth of Autonomy Part II: Disclosure (Damned If You Do, Damned If You Don’t) Part III: Documentary […]

Indecent Exposure: Breasts as Data, Data as Breasts » Cyborgology — June 19, 2014

[…] you [insert choice of sexual slur here]. It’s the same tired, victim-blaming, “Shame On You” strain of privacy critique, this time made patently manifest in fetching high-tech […]

China Fights Pollution with Transparency - Trendingnewsz.com — February 7, 2015

[…] a lecture at the Yale Center Beijing on Tuesday, the environmental campaigner Ma Jun explained how radical disclosure can pressure governments to be honest about enforcement. In 2006, Ma and his organization, the […]