This is cross-posted from xcphilosophy.

Traditionally, social identities (race, gender, class, sexuality, etc.) use outward appearance as the basis for inferences about inner content, character, or quality. Kant and Hegel, for example, inferred races’ defining characteristics from the physical geography of their ‘native’ territories: the outward appearance of one’s homeland determines your laziness or industriousness. [1] Stereotypes connect outward appearance to personality and (in)capability; your bodily morphology is a key to understanding if you’re good at math, dancing, caring, car repair, etc. Stereotypes are an example of what Linda Martin Alcoff calls “our ‘visible’ and acknowledged identity” (92). The attribution of social identity is a two-step process. First, we use visual cues about the subject’s physical features, behavior, and comportment to classify them by identity category. We then make inferences about their character, moral and intellectual capacity, tastes, and other personal attributes, based on their group classification. As Alcoff puts it, visual appearance is taken “to be the determinate constitutive factor of subjectivity, indicating personal character traits and internal constitution” (183). She continues, “visible difference, which is materially present even if its meanings are not, can be used to signify or provide purported access to a subjectivity through observable, ‘natural’ attributes, to provide a window on the interiority of the self” (192). An identity is a “social identity” when outward appearance (i.e., group membership) itself is a sufficient basis for inferring/attributing someone’s “internal constitution.” As David Halperin argues, what makes “sexuality” different from “gender inversion” and other models for understanding same-sex object choice is that “sexuality” includes this move from outward features to “interior” life. Though we may identify people as, say, Cubs fans or students, we don’t usually use that identity as the basis for making inferences about their internal constitution. Social identities are defined by their dualist logic of interpretation or representation: the outer appearance is a signifier of otherwise imperceptible inner content.

Social identities employ a specific type of vision, which Alia Al-Saji calls “objectifying vision” (375). This method of seeing is objectifying because it is “merely a matter of re-cognition, the objectivation and categorization of the visible into clear-cut solids, into objects with definite contours and uses” (375). Objectifying vision treats each visible thing as a token instance of a type. Each type has a set of distinct visual properties, and these properties are the basis upon which tokens are classified by type. According to this method of vision, seeing is classifying. Objectifying vision, in other words, only sees (stereo)types. As Alcoff argues, “Racism makes productive use of this look, using learned visual cues to demarcate and organize human kinds. Recall the suggestion from Goldberg and West that the genealogy of race itself emerged simultaneous to the ocularcentric tendencies of the Western episteme, in which the criterion for knowledge was classifiability, which in turn required visible difference” (198). Social identities are visual technologies because a certain Enlightenment concept of vision–what Al-Saji called “objectifying vision”–is the best and most efficient means to accomplish this type of “classical episteme” (to use Foucault’s term from The Order of Things) classification. Modern society was organized according to this type of classification (fixed differences in hierarchical relation), so objectifying vision was central to modernity’s white supremacist, patriarchal, capitalist social order.

This leads Alcoff to speculate that de-centering (objectifying) vision would in turn de-center white supremacy:

Without the operation through sight, then, perhaps race would truly wither away, or mutate into less oppressive forms of social identity such as ethnicity and culture, which make references to the histories, experiences, and productions of a people, to their subjective lives, in other worlds, and not merely to objective and arbitrary bodily features (198).

In other words, changing how we see would change how society is organized. With the rise of big data, we have, in fact, changed how we see, and this shift coincides with the reorganization of society into post-identity MRWaSP. Just as algorithmic visualization supplements objectifying vision, what John Cheney-Lippold calls “algorithmic identities” supplement and in some cases replace social identities. These algorithmic identities sound a lot like what Alcoff describes in the preceding quote as a positive liberation from what’s oppressive about traditional social identities:

These computer algorithms have the capacity to infer categories of identity upon users based largely on their web-surfing habits…using computer code, statistics, and surveillance to construct categories within populations according to users’ surveilled internet history (164).

Identity is not assigned based on visible body features, but according to one’s history and subjective life. Algorithmic identities, especially because they are designed to serve the interests of the state and capitalism, are not the liberation from what’s oppressive about social identities (they often work in concert). They’re just an upgrade on white supremacist patriarchy, a technology that allows it to operate more efficiently according to new ideals and methods of social organization.

Like social identities, algorithmic identities turn on an inference from observed traits. However, instead of using visible identity as the basis of inference, algorithmic identities infer identity itself. As Cheney-Lippold argues, “categories of identity are being inferred upon individuals based on their web use…code and algorithm are the engines behind such inference” (165). So, algorithms are programmed to infer both (a) what identity category an individual user profile fits within, and (b) the parameters of that identity category itself. A gender algorithm “can name X as male, [but] it can also develop what ‘male’ may come to be defined as online” (167). “Maleness” as a category includes whatever behaviors that are statistically correlated with reliably identified “male” profiles: “maleness” is whatever “males” do. This is a circular definition, and that’s a feature not a bug. Defining gender in this way, algorithms can track shifting patterns of use within a group, and/or in respect to a specific behavior. For example, they could distinguish between instances in which My Little Pony Friendship Is Magic is an index of feminine or masculine behavior (when a fan is a young girl, and when a fan is a “Brony”). Identity is a matter of “statistical correlation” (170) within a (sub) group and between an individual profile and a group. (I’ll talk about the exact nature of this correlation a bit below.)

This algorithmic practice of identity formation and assignment is just the tool that a post-identity society needs. As Cheny-Lippold argues, “algorithms allow a shift to a more flexible and functional definition of the category, one that de-essentializes gender from its corporeal and societal forms and determinations” (170). Algorithmic gender isn’t essentialist because gender categories have no necessary properties are constantly open to reinterpretation. An identity is a feedback loop of mutual renegotiation between the category and individual instances. So, as long as an individual is sufficiently (“statistically”) masculine or feminine in their online behavior, they are that gender–regardless, for example, of their meatspace “sex.” As long as the data you generate falls into recognizably “male” or “female” patterns, then you assume that gender role. Because gender is de-essentialized, it seems like an individual “choice” and not a biologically determined fact. Anyone, as long as they act and behave in the proper ways, can access the privileges of maleness. This is part of what makes algorithmic identity “post-identity”: privileged categories aren’t de-centered, just expanded a bit and made superficially more inclusive.

Back to the point about the exact nature of the “correlation” between individual and group. Cheney-Lippold’s main argument is that identity categories are now in a mutually-adaptive relationship with (in)dividual data points and profiles. Instead of using disciplinary technologies to compel exact individual conformity to a static, categorical norm, algorithmic technologies seek to “modulate” both (in)dividual behavior and group definition so they synch up as efficiently as possible. The whole point of dataveillance and algorithmic marketing is to “tailor results according to user categorizations…based on the observed web habits of ‘typical’ women and men” (171). For example, targeted online marketing is more interested in finding the ad that most accurately captures my patterns of gendered behavior than compelling or enforcing a single kind of gendered behavior. This is why, as a partnered, graduate-educated, white woman in her mid-30s, I get tons of ads for both baby and fertility products and services. Though statistically those products and services are relevant for women of my demographic, they’re not relevant to me (I don’t have or want kids)…and Facebook really, really wants to know these ads aren’t relevant, and why they aren’t relevant. There are feedback boxes I can and have clicked to get rid of all the baby content in my News Feed. Demanding conformity to one and only one feminine ideal is less profitable for Facebook than it is to tailor their ads to more accurately reflect my style of gender performance. They would much rather send me ads for the combat boots I probably will click through and buy than the diapers or pregnancy tests I won’t. Big data capital wants to get in synch with you just as much as post-identity MRWaSP wants you to synch up with it. [2] Cheney-Lippold calls this process of mutual adaptation “modulation” (168). A type of “perpetual training” (169) of both us and the algorithms that monitor us and send us information, modulation compels us to temper ourselves by the scales set out by algorithmic capitalism, but it also re-tunes these algorithms to fall more efficiently in phase with the segments of the population it needs to control.

The algorithms you synch up with determine the kinds of opportunities and resources that will be directed your way, and the number of extra hoops you will need to jump through (or not) to be able to access them. Modulation “predicts our lives as users by tethering the potential for alternative futures to our previous actions as users” (Cheney-Lippold 169). Your past patterns of behavior determine the opportunities offered you, and the resources you’re given to realize those opportunities. Think about credit scores: your past payment and employment history determines your access to credit (and thus to housing, transportation, even medical care). Credit history determines the cost at which future opportunity comes–opportunities are technically open to all, but at a higher cost to those who fall out of phase with the most healthful, profitable, privileged algorithmic identities. Such algorithmic governmentality “configures life by tailoring its conditions of possibility” (Cheney-Lippold 169): the algorithms don’t tell you what to do (or not to do), but to open specific kinds of futures for you.

Modulation is a particularly efficient way of neutralizing and domesticating the resistance that performative iterability posed to disciplinary power. As Butler famously argued, discipline compels perfect conformity to a norm, but because we constantly have to re-perform these norms (i.e., re-iterate them across time), we often fail to embody norms: some days I look really femme, other days, not so much. Because disciplinary norms are performative, they give rise to unexpected, extra-disciplinary iterations; in this light, performativity is the critical, inventive styles of embodiment that emerge due to the failure of exact disciplinary conformity over time. Where disciplinary power is concerned, time is the technical bug that, for activists, is a feature. Modulation takes time and makes it a feature for MRWaSP–it co-opts the iterative “noise” performativity made around and outside disciplinary signal. As Cheney-Lippold explains, with modulation “the implicit disorder of data collected about an individual is organized, defined, and made valuable by algorithmically assigning meaning to user behavior–and in turn limiting the potential excesses of meanings that raw data offer” (170). Performative iterations made noise because they didn’t synch up with the static disciplinary norm; algorithmic modulation, however, accommodates identity norms to capture individual iterability over time and make rational previously irrational styles of gender performance. Time is no longer a site outside perfect control; with algorithmic modulation, time itself is the medium of control (modulation can only happen over time). [For those of you who have been following my critique of Elizabeth Grosz, this is where the rubber meets the road: her model of ‘time’ and the politics of dynamic differentiation is really just algorithmic identity as ontology, an ontology of data mining…which, notably, Cheney-Lippold defines as “the practice of finding patterns within the chaos of raw data” (169; emphasis mine).]

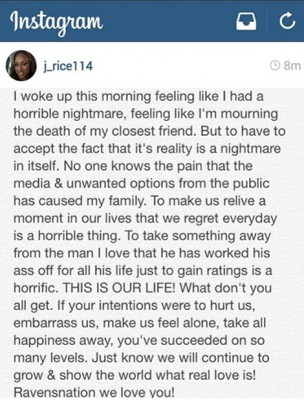

Objectifying vision and data mining are two very different technologies of identity. Social identities and algorithmic identities need to be understood in their concrete specificity. However, they also interact with one another. Algorithmic identities haven’t replaced social identities; they’ve just supplemented them. For example, your visible social identity still plays a huge role in how other users interact with you online. People who are perceived to be women get a lot more harassment than people perceived to be men, and white women and women of color experience different kinds of harassment. Similarly, some informal experimentation by women of color activists on Twitter strongly suggests that the visible social identity of the person in your avatar picture determines the way people interact with you. When @sueypark & @BlackGirlDanger switched from female to male profile pics, there was a marked reduction in trolling of their accounts. Interactions with other users creates data, which then feeds into your algorithmic identity–so social and algorithmic identities are interrelated, not separate.

One final point: Both Alcoff and Al-Saji argue that vision is itself a more dynamic process than the concept of objectifying vision admits. Objectifying vision is itself a process of visualization (i.e., of the habits, comportments, and implicit knowledges that form one’s “interpretive horizon,” to use Alcoff’s term). In other words, their analyses suggest that the kind of vision at work in visual social identities is more like algorithmic visualization than modernist concepts of vision have led us to believe. This leaves me with a few questions: (1) What’s being left out of our accounts of algorithmic identity? When we say identity works in these ways (modulation, etc), what if any parts of the process are we obscuring? Or, just as the story we told ourselves about “objectifying vision” was only part of the picture, what is missing from the story we’re telling ourselves about algorithmic identities? (2) Is this meta-account of objectifying vision and its relationship to social identity only possible in an epistemic context that also makes algorithmic visualization possible? Or, what’s the relationship between feminist critiques & revisions of visual social identities, and these new types and forms of (post)identity? (3) How can we take feminist philosophers’ recent-ish attempts to redefine “vision” and its relation to identity, and the related moves to center affect and implicit knowledge and situate them not only as alternatives to modernist theories of social identity, but also as either descriptive and/or critical accounts of post-identity? I am concerned that many thinkers treat a shift in objects of inquiry/analysis–affect, matter, things/objects, etc.–as a sufficient break with hegemonic institutions, when in fact hegemonic institutions themselves have “modulated” in accord with the same underlying shifts that inform the institution of “theory.” But I hope I’ve shown one reason why this switch in objects of analysis isn’t itself sufficient to critique contemporary MRWaSP capitalism. How then do we “modulate” our theoretical practices to account for shifts in the politics of identity?

[1] As Kant argues, “The bulging, raised area under the eyes and the half-closed squinting eyes themselves seem to guard this same part of the face partly against the parching cold of the air and partly against the light of the snow…This part of the face seems indeed to be so well arranged that it could just as well be viewed as the natural effect of the climate” (11).

[2] “Instead of standards of maleness defining and disciplining bodies according to an ideal type of maleness, standards of maleness can be suggested to users based on one’s presumed digital identity, from which the success of identification can be measured according to ad click-through rates, page views, and other assorted feedback mechanisms” (Cheney-Lippold 171).