Sound happens when things vibrate, displacing air and creating pressure waves that fall within the spectrum of waves the human ear can detect.

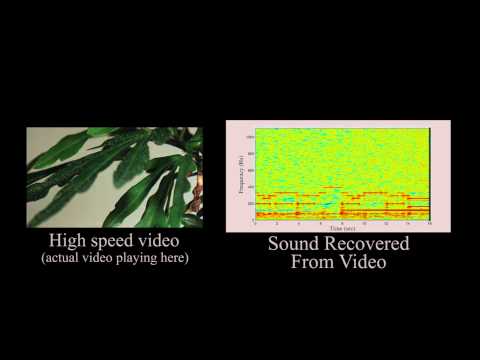

Researchers at MIT, working with Microsoft & Adobe, have developed an algorithm that reads video recordings of vibrating objects more or less like a microphone reads the vibrations of a diaphragm. I like to think it turns the world into a record: instead of vibrations etched in vinyl, the algorithm reads vibrations etched in pixels of light–it’s a video phonograph, something that lets us hear the sounds written in the recorded motion of objects. As researcher Abe Davis explains,

We’re recovering sounds from objects. That gives us a lot of information about the sound that’s going on around the object, but it also gives us a lot of information about the object itself, because different objects are going to respond to sound in different ways.

So, this process gives us info about both the ambient audio environment, and the materiality of the videorecorded objects–that’s a lot of information, info that could obviously be used for all sorts of surveillance. And that will likely be people’s primary concern with this practice.

But I think this is about a lot more than surveillance. This research reflects some general trends that cross both theory, pop culture, and media/tech: