During the month of February, the United States observes Black History Month, importantly celebrating the accomplishments of African Americans and acknowledging the racist history of the United States. Racism is not only part of the past, nor is it not limited to prejudicial attitudes and overtly discriminatory practices against people of color. Social scientists demonstrate how racism is also institutional and cultural, and how these forms of racism powerfully reproduce racial inequalities — even in the absence of explicitly racist attitudes or beliefs.

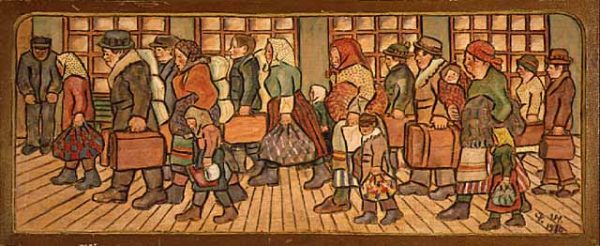

Social scientists commonly rely on two concepts to describe racism: institutional racism and symbolic or cultural racism. Institutional racism refers to how institutions and legal systems in the 21st century do not overtly consider race, but still promote racial inequality. Historically rooted inequalities in institutions — economy, housing markets, the education system, and the criminal justice system — help perpetuate persistent racial disparities.

- Thomas Shapiro. 2017. Toxic Inequality: How America’s Wealth Gap Destroys Mobility, Deepens the Racial Divide, and Threatens Our Future. New York: Basic Books.

- Joe Soss and Vesla Weaver. 2017. “Police Are Our Government: Politics, Political Science, and the Policing of Race–Class Subjugated Communities.” Annual Review of Political Science 20: 565-591.

- Barbara Reskin. 2012. “The Race Discrimination System.” Annual Review of Sociology 38(1):17–35.

- Jo C. Phelan and Bruce G. Link. 2015. “Is Racism a Fundamental Cause of Inequalities in Health?” Annual Review of Sociology 41(1): 311-330.

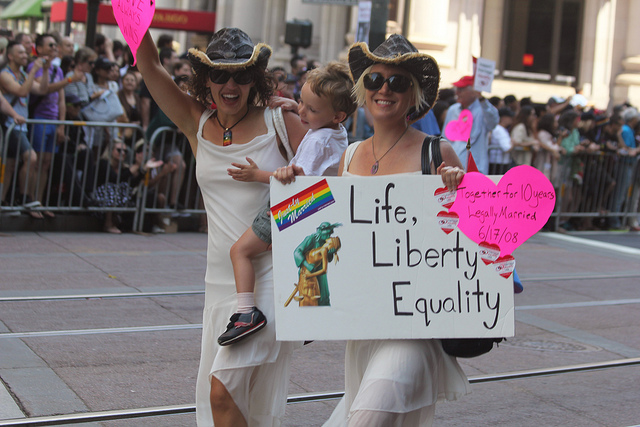

Social scientists utilize the concept of symbolic or cultural racism to discuss attitudes. Though explicitly prejudicial attitudes in the United States have declined, Americans today often use coded or symbolic language — especially when discussing political figures, immigration policy, or the criminal justice system. For example, instead of explicitly claiming people of different races are biologically inferior, individuals may point to “problematic” values or attitudes of other racial groups. Such beliefs allow those people to maintain that racial inequality is the fault of racial minority groups themselves.

- Lawrence Bobo, James R. Kluegel, and Ryan A.Smith. 1997. Laissez-faire Racism: The Crystallization of a Kinder, Gentler, Antiblack Ideology. Pp. 15-42 in Racial Attitudes in the 1990’s: Continuity and Change, eds. S Tuch and J. Martin. Westport, CT: Praeger.

- David O. Sears and P. J. Henry. 2003. “The Origins of Symbolic Racism.” Journal of Personality and Social Psychology 85(2):259.

- Justin Allen Berg. 2013. “Opposition to Pro‐Immigrant Public Policy: Symbolic Racism and Group Threat.” Sociological Inquiry 83(1): 1-31.

- Christopher Sebastian Parker. 2016. “Race and Politics in the Age of Obama.” Annual Review of Sociology 42(1):217–30.