More social scientists are pointing out that the computer algorithms that run so much of our lives have our human, social biases baked in. This has serious consequences for determining who gets credit, who gets parole, and all kinds of other important life opportunities.

It also has some sillier consequences.

Last week NPR host Sam Sanders tweeted about his Spotify recommendations:

Y’all I think @Spotify is segregating the music it recommends for Me by the race of the performer, and it is so friggin’ hilarious pic.twitter.com/gA2wSWup6i

— Sam Sanders (@samsanders) March 21, 2018

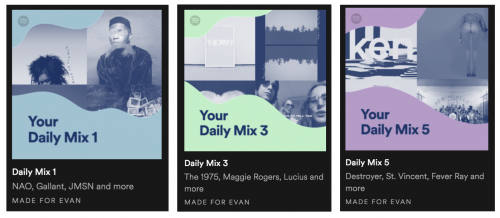

Others quickly chimed in with screenshots of their own. Here are some of my mixes:

The program has clearly learned to suggest music based on established listening patterns and norms from music genres. Sociologists know that music tastes are a way we build communities and signal our identities to others, and the music industry reinforces these boundaries in their marketing, especially along racial lines.

These patterns highlight a core sociological point that social boundaries large and small emerge from our behavior even when nobody is trying to exclude anyone. Algorithms accelerate this process by the sheer number of interactions they can watch at any given time. It is important to remembers the stakes of these design quirks when talking about new technology. After all, if biased results come out, the program probably learned it from watching us!

Evan Stewart is an assistant professor of sociology at University of Massachusetts Boston. You can follow his work at his website, or on BlueSky.

Comments 74

James Horray — December 15, 2020

Sam Sanders has tweeted very timely to share his Spotify recommendations with all. I like this step from him because he didn’t delay a lot to craftily things because a lot of folks were criticizing him on social media. Well, I always visit website EduBirdie to read essay writing reviews before hiring it to help me in writing an essay on this topic.

Emma — August 6, 2022

Most people who rip songs off YouTube or SoundCloud aren’t playing at speakers massive festivals either.

Anna — August 7, 2022

I think this is because we are so focused on the art of music, making it something special and unique that people want, rather than its value as something people can actually use. A song may be beautiful, but it’s not worth its weight in gold if no one wants to listen to it when hdd media players they’re trying to learn how to play the guitar or sing an instrument. So many things have been lost in this focus on what we can do with music — from learning how to play an instrument or sing a song, to learning how to play a sport or join a choir — which has resulted in a lot of great music being lost along the way.

Jerry — September 3, 2022

As of right now, absurdle is exclusively playable on its website here, a platform created by the writer and software developer.

Wordle — September 26, 2022

Great written and come with approximately all important infos Wordle

Stefan Heisl — December 22, 2022

Computer algorithms are amazing! They can help us solve complex problems and make our lives easier. In conclusion, computer algorithms are an essential part of many different scientific disciplines. They are used to solve complex problems and to design new and innovative solutions. The Andersen is a great place to learn about and develop new computer algorithms. We hope you enjoy our website and come back often!

Jewel — February 23, 2024

AI algorithms shaping our lives carry embedded biases, impacting crucial decisions like credit and parole. Reflects the need for vigilance even in seemingly unrelated realms, like exploring the unlocked Spotify 2024.

bertha blea — March 29, 2024

The pervasive influence of AI algorithms extends beyond our awareness, insidiously shaping pivotal aspects of our lives. Embedded biases within these algorithms manifest in critical decisions such as credit assessments and parole determinations, underscoring the imperative for continual vigilance. This necessity for scrutiny extends even to seemingly disparate domains, as evidenced by the exploration of old version of spotify in 2024, revealing the far-reaching consequences of unchecked algorithmic biases.

Gani yoyo — April 7, 2024

Mp3 Convertidor es una plataforma en línea que permite a los usuarios convertir archivos de audio a formato MP3 de manera rápida y sencilla. Con una interfaz intuitiva, ofrece una variedad de opciones de conversión y características adicionales como ajuste de calidad y recorte de archivos. Con millones de usuarios en todo el mundo, se ha convertido en una opción popular debido a su fiabilidad y facilidad de uso para satisfacer las necesidades de conversión de audio.

Spotify Info — April 16, 2024

"Hey there! While we appreciate your interest in Best VPNs for Spotify, Tread is all about discussing and sharing insights on various topics. If you have any questions related to NPR host Sam Sanders' Spotify recommendations, feel free to ask! We're here to help you out."

Christopher — November 1, 2024

AI algorithms, which play an increasing role in our lives, often come with inherent biases that can significantly affect important decisions—such as who qualifies for credit or parole. This highlights the importance of maintaining vigilance across all areas, even those that may seem unrelated, like exploring the features of Spotify Vanced.

Smith jan — November 26, 2024

"Interesting perspective on how data can't truly capture the essence of human creativity, like the art of DJing. Similarly, using tools to заменить фон на видео онлайн can transform a video and give it a new vibe, much like how DJs shape the mood of a crowd."

Anna Belle — November 27, 2024

"Great post! It's always insightful to dive into topics like this. By the way, if you're looking for a way to remove unwanted objects from your images easily, you can check out https://airbrush.com/object-remover

. It’s a great tool to enhance your photos quickly and efficiently!"

Dana — December 2, 2024

Interesting discussion on the limitations of data in decision-making! It’s essential to remember that while data can provide valuable insights, human judgment plays a crucial role in interpreting it. As technology evolves, striking a balance between the two is key to making well-informed choices. Also, for those interested in enhancing visual content with ease, check out https://www.beautyplus.com/background-remover for a simple tool that can help remove backgrounds effortlessly.

Win PKR — December 6, 2024

Earn Online Money to Play WinPKR and enjoy.

Wordle unlimited — January 5, 2025

This post on how data can't DJ is so insightful it really sheds light on the limitations of data analysis when it lacks context or nuance. It's like playing wordle unlimited, where finding the right solution requires more than just patterns it’s about understanding the deeper connections.

Vidmate Apk — January 7, 2025

Vidmate Apk is the world’s most popular multi-platform HD video,movie and music downloader converter and also an excellent player.

Vidmate3434 — January 7, 2025

Vidmate Apk is the world’s most popular multi-platform HD video,movie and music downloader converter and also an excellent player.https://vidmateoriginal.com/

SpotifyLite2025 — January 8, 2025

Spotify Lite is a lightweight version of the popular Spotify app, designed for users with limited storage and data. You can download it from various sources like Uptodown, Google Play, or Aptoide, and it offers a simplified experience for streaming music on the go. Overview of Spotify Lite APK

lilyrose — January 18, 2025

This is a really thought-provoking post on the limitations of data when it comes to interpreting complex human experiences. The analysis of how data can't always capture the nuances of individual lives is crucial for understanding the boundaries of statistical modeling. It's a great reminder that there's more to human behavior than what the numbers might show. For those who are interested in Morse code and want to learn how to convert Morse code into numbers, you can click here to convert Morse code into alphabet and explore this interesting area of communication!

cricket3434 — January 20, 2025

Sports Guru Pro Download is the best fantasy cricket app and cricket news in Hindi. It provides accurate match predictions, expert commentary, live scores, and more.https://sportsgurupro.app/

best3433 — January 20, 2025

Sports Guru Pro Download is the best fantasy cricket app and cricket news in Hindi. It provides accurate match predictions, expert commentary, live scores, and more.

button45454 — January 22, 2025

LuluBox App for Android you can download directly it from the download button given below this app is the latest version of the lulubox app.

Eren — January 25, 2025

For accessing best VPNs for Spotify from anywhere, some of the best VPNs in 2025 include NordVPN, ExpressVPN, and CyberGhost. These services offer fast speeds, strong security features, and a wide range of servers to help you unblock Spotify effectively.

Lottery Sambad Today — January 26, 2025

Lottery Sambad Is a news Website, Thanks For sharing.

malik — February 10, 2025

Touchcric Live is a free online platform that allows cricket fans to stream live matches from around the world. Whether it’s an ICC event, a domestic league, or a bilateral series, Touchcric Live has got you covered.

sanam01 — February 13, 2025

Android you can download directly it from the download bu

Haseeb Ahmad — February 15, 2025

"Wow, this is WILD! 🤯 It’s crazy how even algorithms pick up on human biases without anyone actually programming them to do so! 😱 Makes you wonder what other hidden patterns are shaping our lives without us realizing it. 🤔 Also, speaking of tech, if you want a super easy way to download videos online, check out https://ytcroxyproxy.com/ – it’s seriously a game-changer! 🚀🔥 #AI #Algorithms #TechBias #MustRead"

kevin huis — April 6, 2025

Interesting read! It's wild how data can be so good at pattern recognition but still miss the mark on something as nuanced as taste or vibe. Kind of like how choosing short haircuts for oval faces male requires a mix of structure and style—not just numbers!

Spoti Info — April 22, 2025

"Hey there! While we appreciate your interest in Best VPNs for Spotify, Tread is all about discussing and sharing insights on various topics. If you have any questions related to NPR host Sam Sanders' Spotify recommendations, feel free to ask! We're here to help you out."

alex wale — April 29, 2025

Strong communication skills are essential for both personal and digital interactions. Whether you're leading a conversation or navigating a netflix login.

Tommy — May 23, 2025

You can get more tech related services in this site. You might like this site to get more mod apps and games. So just try this site - Techclicky

uzdemir demet — June 22, 2025

This post highlights an important point: even algorithms that seem neutral, like music recommendation systems, can reflect and reinforce societal biases—it's a reminder to stay vigilant about the tech we trust. After diving into thought-provoking topics like this, I like to recharge with a few rounds of unlimited wordls —a fun escape for the mind!

moeezz — July 30, 2025

Meebhoomi, the official online land records portal of Andhra Pradesh, offers a user-friendly platform to access crucial land-related documents like the 1B, Adangal, village maps, Field Measurement Book (FMB), and E-Passbook. This digital service, launched by the Andhra Pradesh Revenue Department, provides https://meebhomi.com/ seamless access to land records, reducing the need for in-person visits to government offices.

eashalasif — August 2, 2025

AINS NILAM merupakan platform yang direka khas untuk mempermudah pelajar dan pendidik dalam proses merekod bahan bacaan. Dengan menggunakan sistem canggih ini, Kementerian Sains, Teknologi dan Inovasi (MOSTI) bersama Kementerian Pendidikan Malaysia (KPM) berusaha untuk mewujudkan persekitaran pendidikan yang lebih efisien dan berkesan, ains nilam memanfaatkan teknologi untuk meningkatkan pengalaman pembelajaran di seluruh negara.

Lisa Diana — August 2, 2025

Para Magis TV en Smart TV, recomienda LG C4 OLED o Samsung S95D (4K, 120Hz, HDR) para calidad premium, o TCL 6-Series para presupuesto ajustado. MagisTV Descarga el APK desde usando Downloader. Verifica legalidad.

maccksoma — August 4, 2025

After logging in, the E-Shikshakosh Principal selects the “Teacher” section in the dashboard where all teachers affiliated with the school are listed.

ferzeenibrahims — August 10, 2025

MyKasih adalah salah satu program bantuan tunai yang dilaksanakan oleh kerajaan Malaysia, yang memberi fokus kepada golongan berpendapatan rendah seperti B40 dan M40. Melalui sistem ini, penerima bantuan dapat membuat Semakan Program ini secara dalam talian untuk memantau status bantuan mereka serta mengakses bantuan tunai dengan mudah menggunakan kad MyKad. Dalam artikel ini, kami akan berkongsi maklumat terperinci mengenai MyKasih 2025, cara-cara untuk melakukan semakan, senarai barangan yang boleh dibeli menggunakan bantuan, barang mykasih serta maklumat penting lainnya bagi memastikan anda lebih memahami program ini.

mubeenahmafbutt — August 12, 2025

The Automated Permanent Academic Account Registry Apaar ID is a 12-digit unique identification number introduced by the Government of India under the National Education Policy (NEP) 2020. This initiative aims to provide students with a centralized digital repository of their academic records, including degrees, diplomas, certificates, and co-curricular achievements, facili https://aparr-id.com/ tating seamless access and management. The ID serves as a lifelong academic identity, ensuring transparency, reducing duplication, and enhancing the efficiency of the education system.

James Lemor — August 21, 2025

Access and Stream wide range of Free Live TV Channels to Watch Live Cricket Streaming and Live Cricket Match Today Online in HD on Mobilecric. Mobilecric PAK vs IND

ripinzalana@ — August 24, 2025

is West Bengal’s official land record portal, designed to put land details at your .fingertips. From ownership to plot maps, mutation to property value—it’s your digital key to everything land-related. No more long queues or paperwork; just log in https://banglaarbhumi.com/ and get what you need, fast. Whether you’re buying, selling, or just checking land info, Banglarbhumi makes it simple, transparent, and hassle-free.

Tyree Hintz — August 27, 2025

Enjoy high-quality audio, offline listening, and personalized playlists with Spotify IPA++, giving you seamless access to millions of songs and podcasts. Experience an ad-free and smooth music journey anytime, anywhere on your iOS device.

Todd Allred — August 27, 2025

This is the critical flaw of algorithmic bias: When Data Can’t DJ. The "taste" of an algorithm is only a reflection of the biased human data it was trained on, leading to real-world harm in decisions about finance, justice, and opportunity. It's a stark reminder that we still need human curation and critical thinking. For a perfectly curated experience free from algorithmic bias, discover your next favorite movie with human-led selection on the official MovieBox App v3.0. Download it directly from their website to ensure a safe and authentic viewing experience.

Anonymous — August 27, 2025

The mobile-friendly platform also includes the Performance and Evaluation Management System (PEPMIS) for task tracking and feedback. Registration is simple, https://ess-utumishis.com/ with secure login and password reset options, making it easier for public employees to manage their employment tasks efficient.

utumishi@12 — August 27, 2025

The mobile-friendly platform also includes the Performance and Evaluation Management System (PEPMIS) for task tracking and feedback. Registration is simple, ESS Utumishi loginl with secure login and password reset options, making it easier for public employees to manage their employment tasks efficient.

dreamerstarry — August 28, 2025

Easily Update Personal Information (Name, Date of Birth, Gender) via DigiLocker or the https://abciid.com/ ABC Portal.

Ensure Consistency Across Academic Records by Keeping Your Details Accurate and Aligned with Official Documents.

dreamerstarry — August 28, 2025

Easily Update Personal Information (Name, Date of Birth, Gender) via DigiLocker or the ABC Portal.

Ensure Consistency ABC ID Card Across Academic Records by Keeping Your Details Accurate and Aligned with Official Documents.

anarendom6 — September 1, 2025

One of the most crucial fixes in this version is the resolution of the Titan crash bug. Players can now face the challenging Titan boss without worrying about unexpected crashes or losing progress. Previously, crashes often occurred during the intense Titan Battle, especially on newer devices like Android 14. https://shadowfighteer.com/ This fix ensures that players can now experience the entire battle smoothly and defeat the Titan without disruptions.

Munir — September 9, 2025

Data is the most important resource as it helps to target users on the base of their interests and opinions. The timeline in CapCut APK Pro makes trimming and splitting clips easy. It keeps my edits neat and organized.

Henry — September 11, 2025

When data can't dj is going to be a very informative blog, you're doing great job. I can explore without worries because HappyMod Oficial Site ensures every mod is safe and secure for Android.

Henryy — September 15, 2025

Play PS2 games smoothly with NetHerSX2 Android APK free on your phone. It is safe, quick to install, and user-friendly.

jaims — September 18, 2025

Turn your big screen into an IPTV hub with the MagisTV Live Smart TV App.

subwaysurfers — October 8, 2025

When Data Can’t DJ, it’s like playing Subway Surfers without reacting to the obstacles. You might have all the coins (data), but without rhythm and timing (context + human insight), you’ll crash. Just like the game rewards quick, smart moves—not random swipes—data needs skilled interpretation to keep the flow smooth and avoid derailment.

subwaysurfers — October 8, 2025

nice work.

chat gpt — October 9, 2025

When Data Can’t DJ — that’s where creativity steps in. 🎧 Just like numbers can’t feel the rhythm, raw data can’t replicate human flow. Tools like chatgptmodapks bridge that gap — turning logic into art, data into dialogue, and automation into personality. Tech meets soul, remixing intelligence.

https://chatgptmodapks.com

KissKH — October 16, 2025

KissKH is one of the best platforms for streaming Asian entertainment online without any subscription. The site offers a vast collection of K-Dramas, C-Dramas, J-Dramas, anime, and variety shows, updated regularly with the latest episodes.

Badia — October 20, 2025

The Waho App is a mobile‑earning platform designed to let users earn money by completing simple tasks via their smartphones: users download the app, sign up (free of charge), browse available micro‑jobs like watching videos, sharing links, installing apps or referring friends, and their efforts are credited and can be withdrawn once a minimum balance is reached. The app emphasizes ease of use (no special skills needed), features a referral program (you earn when friends join and perform tasks), claims rapid payout options, supports multiple languages and devices, and is positioned as suitable for students, homemakers or anyone looking for side income. At the same time, prospective users should be aware of caveats: task availability may vary by region, full withdrawal may depend on meeting certain conditions, and although the app promotes “real earnings,” several reviews caution about the reliability and scalability of the income it offers.

Rogzov — October 25, 2025

Rogzov provides an extensive collection of films and TV series, giving users plenty of options for every mood and interest. Watch streaming films here rog-zov.fr

BeeTV APK — November 1, 2025

No buffering issue is present in the BeeTV APK. visit site

jackson robins — November 4, 2025

it was so nice and amazing fantastic

Jhon — November 7, 2025

if you are facing watching live sports match issues....lets try this app live match on rbtv77

Apps like OnStream — November 10, 2025

Really interesting post! It’s wild how algorithms can pick up our social patterns without us realizing it. I’ve noticed the same thing on entertainment apps like OnStream — recommendations often reflect what most people watch rather than showing true variety. A great reminder that even technology learns our biases.

Warner — November 10, 2025

Interesting read! It’s crazy how algorithms mirror our own social biases without us even noticing. By the way, this Online slides Downloader tool for free is really handy for saving and studying online materials related to topics like this.

Lacey R. Hayes — November 12, 2025

nice work and goodartical.

SpotiGuru — November 14, 2025

Enjoy an upgraded music experience with ad-free playback, offline listening, and unlimited skips. The Spotify Premium Featuress provide high-quality audio, personalized playlists, and exclusive content, giving users seamless streaming and complete control over their favorite songs and podcasts.

MkvCinemas — November 25, 2025

I appreciate how data cant mixes popular titles with lesser-known ones.

Check SASSA Balance — November 25, 2025

Beneficiaries can easily track their available grant funds and recent payment updates by using Check SASSA Balance. This helps users confirm whether their SRD or other SASSA grants have been paid, allowing them to manage their finances and plan expenses more confidently.

Ahmad Shan — December 11, 2025

It's eye-opening how data-driven algorithms often carry the same social biases as humans, influencing important decisions like credit scores and parole outcomes. As more social scientists highlight, these biases baked into automatic systems can seriously impact our lives. If you want to explore more about this topic and stay updated, be sure to visit and download the app "MovieBox Uptodown"—it’s a great resource to keep informed and entertained!

AETHERSX2HUB — December 13, 2025

NICE WORK

MovieBox — December 15, 2025

The MovieBox App gives users easy access to the latest movies, TV shows, and web series. With a clean interface and smooth navigation, the MovieBox App offers fast streaming in HD quality for an enjoyable entertainment experience.

SASSA Online — December 17, 2025

This update helps applicants understand their SRD grant progress, and SASSA R350 Status shows whether an application is approved, declined, or pending payment. It allows beneficiaries to track payment dates and stay informed about their grant status.

Emma L. Norris — January 10, 2026

I appreciate how Data Cant mixes popular titles with lesser-known ones.

T20WC26 PAK vsIND

heater57 — January 17, 2026

Orca Slicer 3D is a powerful, free, and open‑source 3D printing slicer that turns your digital models into precise printer instructions (G‑code) so you can get high‑quality 3D prints every time. installer orca slicer

Jasmine — January 17, 2026

Just like trusted software such as Champ relies on transparent communication and dedicated developer support, a reliable V2rayN Download service is built on the same foundation of user trust