More social scientists are pointing out that the computer algorithms that run so much of our lives have our human, social biases baked in. This has serious consequences for determining who gets credit, who gets parole, and all kinds of other important life opportunities.

It also has some sillier consequences.

Last week NPR host Sam Sanders tweeted about his Spotify recommendations:

Y’all I think @Spotify is segregating the music it recommends for Me by the race of the performer, and it is so friggin’ hilarious pic.twitter.com/gA2wSWup6i

— Sam Sanders (@samsanders) March 21, 2018

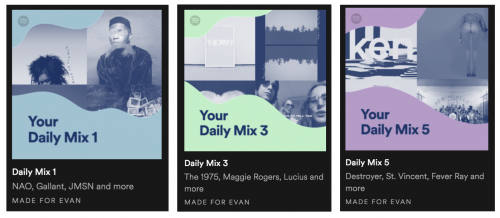

Others quickly chimed in with screenshots of their own. Here are some of my mixes:

The program has clearly learned to suggest music based on established listening patterns and norms from music genres. Sociologists know that music tastes are a way we build communities and signal our identities to others, and the music industry reinforces these boundaries in their marketing, especially along racial lines.

These patterns highlight a core sociological point that social boundaries large and small emerge from our behavior even when nobody is trying to exclude anyone. Algorithms accelerate this process by the sheer number of interactions they can watch at any given time. It is important to remembers the stakes of these design quirks when talking about new technology. After all, if biased results come out, the program probably learned it from watching us!

Evan Stewart is an assistant professor of sociology at University of Massachusetts Boston. You can follow his work at his website, on Twitter, or on BlueSky.

Comments 10

James Horray — December 15, 2020

Sam Sanders has tweeted very timely to share his Spotify recommendations with all. I like this step from him because he didn’t delay a lot to craftily things because a lot of folks were criticizing him on social media. Well, I always visit website EduBirdie to read essay writing reviews before hiring it to help me in writing an essay on this topic.

Emma — August 6, 2022

Most people who rip songs off YouTube or SoundCloud aren’t playing at speakers massive festivals either.

Anna — August 7, 2022

I think this is because we are so focused on the art of music, making it something special and unique that people want, rather than its value as something people can actually use. A song may be beautiful, but it’s not worth its weight in gold if no one wants to listen to it when hdd media players they’re trying to learn how to play the guitar or sing an instrument. So many things have been lost in this focus on what we can do with music — from learning how to play an instrument or sing a song, to learning how to play a sport or join a choir — which has resulted in a lot of great music being lost along the way.

Jerry — September 3, 2022

As of right now, absurdle is exclusively playable on its website here, a platform created by the writer and software developer.

Wordle — September 26, 2022

Great written and come with approximately all important infos Wordle

Stefan Heisl — December 22, 2022

Computer algorithms are amazing! They can help us solve complex problems and make our lives easier. In conclusion, computer algorithms are an essential part of many different scientific disciplines. They are used to solve complex problems and to design new and innovative solutions. The Andersen is a great place to learn about and develop new computer algorithms. We hope you enjoy our website and come back often!

Jewel — February 23, 2024

AI algorithms shaping our lives carry embedded biases, impacting crucial decisions like credit and parole. Reflects the need for vigilance even in seemingly unrelated realms, like exploring the unlocked Spotify 2024.

bertha blea — March 29, 2024

The pervasive influence of AI algorithms extends beyond our awareness, insidiously shaping pivotal aspects of our lives. Embedded biases within these algorithms manifest in critical decisions such as credit assessments and parole determinations, underscoring the imperative for continual vigilance. This necessity for scrutiny extends even to seemingly disparate domains, as evidenced by the exploration of old version of spotify in 2024, revealing the far-reaching consequences of unchecked algorithmic biases.

Gani yoyo — April 7, 2024

Mp3 Convertidor es una plataforma en línea que permite a los usuarios convertir archivos de audio a formato MP3 de manera rápida y sencilla. Con una interfaz intuitiva, ofrece una variedad de opciones de conversión y características adicionales como ajuste de calidad y recorte de archivos. Con millones de usuarios en todo el mundo, se ha convertido en una opción popular debido a su fiabilidad y facilidad de uso para satisfacer las necesidades de conversión de audio.

Spotify Info — April 16, 2024

"Hey there! While we appreciate your interest in Best VPNs for Spotify, Tread is all about discussing and sharing insights on various topics. If you have any questions related to NPR host Sam Sanders' Spotify recommendations, feel free to ask! We're here to help you out."