Originally published March 30, 2022.

Today “help wanted” signs are commonplace; restaurants, shops, and cafes have temporarily closed or have cut back on hours due to staffing shortages. “Nobody wants to work,” the message goes. Some businesses now offer higher wages, benefits, and other incentives to draw in low-wage workers. All the same, “the great resignation” has been met with alarm across the country, from the halls of Congress to the ivory tower.

In America, where work is seen as virtuous, widespread resignations are certainly surprising. How does so many are walking away from their jobs differ from what we’ve observed in the past, particularly in terms of frustrations about labor instability, declining benefits, and job insecurity? Sociological research on work, precarity, expectations, and emotions provides cultural context on the specificity and significance of “the great resignation.”

Individualism and Work

The importance of individualism in American culture is clear in the workplace. Unlike after World War II, when strong labor unions and a broad safety net ensured reliable work and ample benefits (for mostly white workers), instability and precarity are hallmarks of today’s workplace. A pro-work, individualist ethos values individual’s flexibility, adaptability, and “hustle.” When workers are laid off due to shifting market forces and the profit motives of corporate executives, workers internalize the blame. Instead of blaming executives for prioritizing stock prices over workers, or organizing to demand more job security, the cultural emphasis on individual responsibility encourages workers to devote their energy into improving themselves and making themselves more attractive for the jobs that are available.

- Robert N. Bellah. 1985. “The Pursuit of Happiness” and “Culture and Conversation: The Historical.” Habits of the Heart: Individualism and Commitment in American Life. Berkeley: University of California Press.

- Richard Sennett. 2000. The Corrosion of Character: The Personal Consequences of Work in the New Capitalism. New York, NY: W. W. Norton & Company.

- Lester Spence. 2015. Knocking the Hustle: Against the Neoliberal Turn in Black Politics. Brooklyn, NY: Punctum Books.

Expectations and Experiences

For many, the pandemic offered a brief glimpse into a different world of work with healthier work-life balance and temporary (if meaningful) government assistance. Why and how have American workers come to expect unpredictable work conditions and meager benefits? The bipartisan, neoliberal consensus that took hold in the latter part of the twentieth century saw a reduction in government intervention into the social sphere. At the same time, a bipartisan pro-business political agenda reshaped how workers thought of themselves and their employers. Workers became individualistic actors or “companies of one” who looked out for themselves and their own interests instead of fighting for improved conditions. Today’s “precariat” – the broad class of workers facing unstable and precarious work – weather instability by expecting little from employers or the government while demanding more of themselves.

- Wendy Brown. 2017. Undoing the Demos: Neoliberalism’s Stealth Revolution. Princeton, NJ: Zone Books.

- Carrie M. Lane. 2011. A Company of One Insecurity, Independence, and the New World of White-Collar Unemployment. Ithaca: Cornell University Press.

- Devika Narayan. 2022. “Manufacturing Managerial Compliance: How Firms Align Managers With Corporate Interest. Work, Employment & Society, Forthcoming.

- Guy Standing. 2011. The Precariat: The New Dangerous Class. London: Bloomsbury Press.

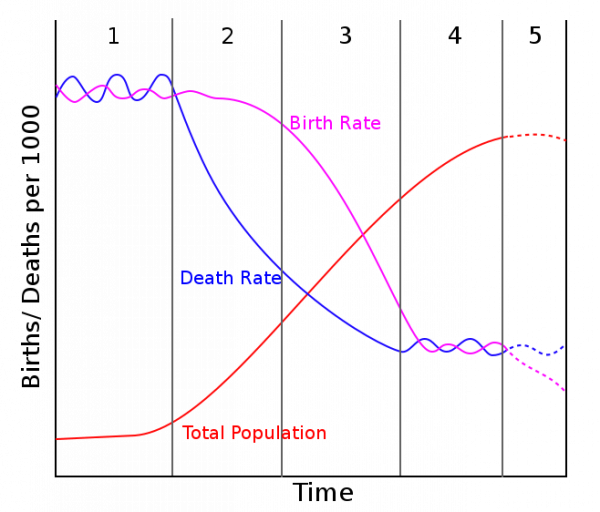

Generational Changes

Researchers have identified generational differences in expectations of work. Survey data shows that Baby Boomers experience greater difficulty with workplace instability and the emerging individualist ethos. On the other hand, younger generations – more accustomed to this precarity – manage the tumult with greater skill. These generational disparities in how insecurity is perceived have real implications for worker well-being and family dynamics.

- Sarah Burgard, Jennie Brand, James House. 2009. “Perceived Job Insecurity and Worker Health in the United States.” Social Science and Medicine 69(5): 777–785.

- Erin Kelly, Phyllis Moen, J. Michael Oakes, Wen Fan, Cassandra Okechukwu, Kelly Davis. 2014. “Changing Work and Work- Family Conflict: Evidence from the Work, Family, and Health Network.” American Sociological Review 79(3): 485–516.

- Jack Lam, Phyllis Moen, Shi-Rong Lee, and Orfeu Buxton. 2016. “Boomer and Gen X Managers and Employees at Risk: Evidence from the Work, Family, and Health Network Study.” Pp. 51-73 in Beyond the Cubicle: Job Insecurity, Intimacy, and the Flexible Self. New York: Oxford University Press.

Emotions

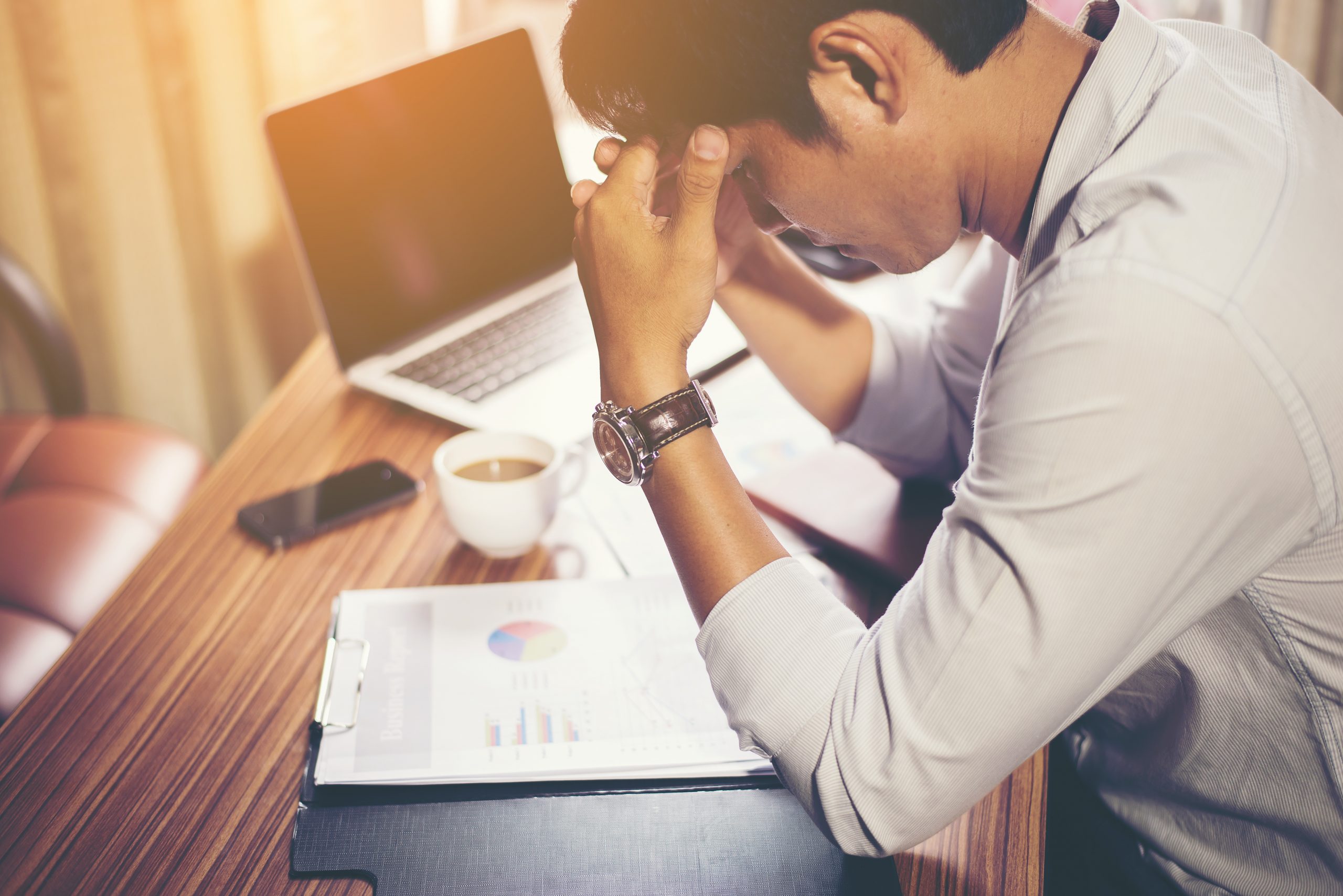

Scholars have also examined the central role emotions play in setting expectations of work and employers, as well as the broad realm of “emotional management” industries that help make uncertainty bearable for workers. Instead of improving workplace conditions for workers, these “emotional management” industries provide “self-care” resources that put the burden of managing the despair and anxiety of employment uncertainty on employees themselves, rather than companies.

- Barbara Ehrenreich. 2010. Smile or Die: How Positive Thinking Fooled America and the World. London: Granta Books.

- Eva Illouz. Cold Intimacies: The Making of Emotional Capitalism. 2007. Cambridge, UK ; Malden, MA: Polity.