The term “meme” first appeared in the 1975 Richard Dawkins’ bestselling book The selfish gene. The neologism is derived from the ancient Greek mīmēma, which means “imitated thing”. Richard Dawkins, a notorious evolutionary biologist, coined it to describe “a unit of cultural content that is transmitted by a human mind to another” through a process that can be referred as “imitation”. For instance, anytime a philosopher ideates a new concept, their contemporaries interrogate it. If the idea is brilliant, other philosophers may eventually decide to cite it in their essays and speeches, with the outcome of propagating it. Originally, the concept was proposed to describe an analogy between the “behaviour” of genes and cultural products. A gene is transmitted from one generation to another, and if selected, it can accumulate in a given population. Similarly, a meme can spread from one mind to another, and it can become popular in the cultural context of a given civilization. The term “meme” is indeed a monosyllable, which resembles the word “gene”. more...

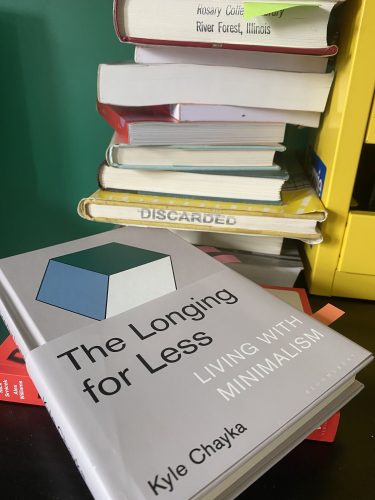

Minimalism has a way of latching on to people that want

nothing to do with it. None of the artists contained in Kyle Chayka’s Longing

for Less wanted to be associated with the term, and yet here they are,

mostly posthumously, contained in a book subtitled Living with Minimalism.

Chayka nevertheless pulls together midcentury artists like Philp Glass and

Donald Judd and contemporary pop culture icons like Marie Kondo and the author

of the 2016 self-help-through-minimalism book The More of Less Joshua

Becker into a single, slim volume against their will.

When it comes to sensitive political issues, one would not necessarily consider Reddit the first point of call to receive up-to-date and accurate information. Despite being one of the most popular digital platforms in the world, Reddit also has reputation as a space which, amongst the memes and play, fosters conspiracy theories, bigotry, and the spread of other hateful material. In turn it would seem like Reddit would be the perfect place for the development and spread of the myriad of conspiracy theories and misinformation that have followed the spread of COVID-19 itself.

How is robot care for older adults envisioned in fiction? In the 2012 movie ‘Robot and Frank’ directed by Jake Schreier, the son of an older adult – Frank – with moderate dementia gives his father the choice between being placed in a care facility or accepting being taken care of by a home-care robot

Living with a home-care robot

Robots in fiction can play a pivotal role in influencing the design of actual robots. It is therefore useful to analyze dramatic productions in which robots fulfill roles for which they are currently being designed. High-drama action packed robot films make for big hits at the box office. Slower paced films, in which robots integrate into the spheres of daily domestic life, are perhaps better positioned to reveal something about where we are as a society, and possible future scenarios. ‘Robot and Frank’ is one such film, focusing on care work outsourced to machines. more...

The best way I can describe the experience of summer 2019-2020 in Australia is with a single word: exhausting. We have been on fire for months. There are immediate threats in progress and new ones at the ready. Our air quality levels dip in and out of hazardous, more often in the former category than the latter. This has been challenging for everyone. For many, mere exhaustion may feel like a luxury.

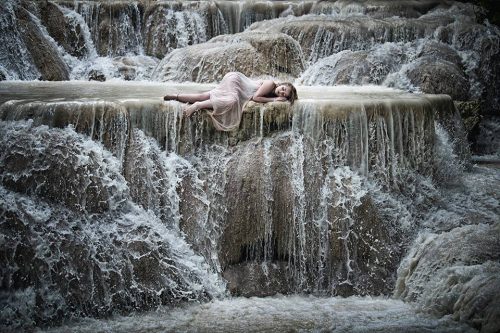

In the trenches of the ongoing fires are the Australian emergency service workers, especially the “fireys,” who have been tireless in their efforts to save homes, people, and wildlife. While the primary and most visible part of their work is the relentless job of managing fires, there is also a secondary–though critical–task of public communication, keeping people informed and providing material for anxious-refreshers looking for information about “fires near me.” In the last few days, as fires have approached the Canberra suburbs where I live, an interesting variant of public safety communication has emerged: Instagramable photography. more...

Drew Harwell (@DrewHarwell) wrote a balanced article in the Washington Post about the ways universities are using wifi, bluetooth, and mobile phones to enact systematic monitoring of student populations. The article offers multiple perspectives that variously support and critique the technologies at play and their institutional implementation. I’m here to lay out in clear terms why these systems should be categorically resisted.

The article focuses on the SpotterEDU app which advertises itself as an “automated attendance monitoring and early alerting platform.” The idea is that students download the app and then universities can easily keep track of who’s coming to class and also, identify students who may be in, or on the brink of, crisis (e.g., a student only leaves her room to eat and therefore may be experiencing mental health issues). As university faculty, I would find these data useful. They are not worth the social costs. more...

Today, the influence of our moon Goddess foremothers is everywhere. Contemporary progressive activists dress up like witches to put hexes on Trump and Pence. The few remaining women’s bookstores in the country sell crystals and potions for practicing DIY feminist magic. There is an annual Queer Astrology conference, Tarot decks created especially for gays, and beloved figures like Chani Nicholas who have made careers out of queer-centered astrology. Almost every LGBTQ+ publication, whether mainstream or radical, features a regular horoscope column (including them.).

In this feature from last year, Sascha Cohen reflects on אסטרולוגיה recent re-ascendance and seeming ubiquity in LGBTQ+ circles, and the skepticism it’s meeting with more queer-identifying people. Astrology’s pseudoscience was a nonstarter for some (mainly those from STEM fields). For others it was New Age culture’s appropriation of indigenous spirituality and separately, the risk astrology poses as a distraction from systemic repression. A “sense of exclusion” or just being “seen as a cynic and no fun,” in one person’s words, was maybe the most common of all the complaints.

Despite these reservations, most of the queer ‘skeptics’ Cohen interviewed recognized astrology’s appeal for queer people — as a source of “meaning and purpose,” as an alternative to exclusionary religious communities, as entertainment, and one that in practice usually “centers and empowers women.” Hardly isolated from systemic anti-LGBTQ+ forces, “a recent uptick in such practices,” Cohen asserts, “may be because, [as interviewee] Chelsea argues, ‘We’re in the midst of a global existential crisis.’”

Though these responses make sense, an aspect that goes unmentioned in the piece is the part popular meme accounts and algorithmic social media appear to be playing in astrology’s current revival.

I am not an expert on Bolivian politics. I am, however, human, which is more than can be said about the Twitter accounts commenting on the Bolivian coup:

“Modelling is superficial, and anything superficial in the long run will never be good for the psyche”—this seems to be intuitively true. Although modelling might boost self-esteem, it cannot fill an inner emptiness. However, several years of participant observation, surveys and interviews in the scene of amateur modelling draw a different picture. Models seem to agree on the fact, that it makes them feel better. How is this possible?

Broadly speaking, there are two reasons apart from the fact that for many there is something pleasurable about it: Modelling can teach some skills relevant in everyday life and modelling can help to cope with identity.

Mark Zuckerberg testified to congress this week. The testimony was supposed to address Facebook’s move into the currency market. Instead, they mostly talked about Facebook’s policy of not banning or fact-checking politicians on the platform. Zuckerberg roots the policy in values of free expression and democratic ideals. Here is a quick primer on why that rationale is ridiculous. more...