With advances in machine learning and a growing ubiquity of “smart” technologies, questions of technological agency rise to the fore of philosophical and practical importance. Technological agency implies deep ethical questions about autonomy, ownership, and what it means to be human(e), while engendering real concerns about safety, control, and new forms of inequality. Such questions, however, hinge on a more basic one: can technology be agentic?

To have agency, technologies need to want something. Agency entails values, desires, and goals. In turn, agency entails vulnerability, in the sense that the agentic subject—the one who wants some things and does not want others—can be deprived and/or violated should those wishes be ignored.

The presence vs. absence of technological agency, though an ontologically philosophical conundrum, can only be assessed through the empirical case. In particular, agency can be found or negated through an empirical instance in which a technological object seems, quite clearly, to express some desire. Such a case arises in the WCry ransomware virus ravaging network systems as I write.

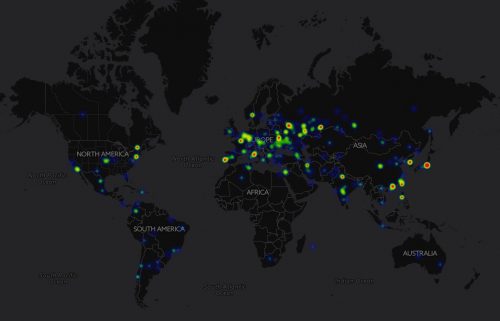

The large scale hack has left organizations around the globe unable to access important documents and data as they struggle with a quickly spreading virus that infects networks with ransomware. The virus, variously referred to as WCry, WannaCry, or Wana Decryptor, accesses networks through an unpatched security breach in the Windows operating system that persists on machines that haven’t been recently updated. Infected networks hide files and data behind a demand for substantial payment, threatening to delete important information and up the financial ante if payments are not made. Cybersecurity experts are in a frenzy trying to contain the damage, while affected organizations are scrambling to continue functionality in the absence of electronic systems that are otherwise integral to daily operation. Hospitals have been disproportionately affected, but telecom companies, car factories, and others have been hit as well. Unlike physical assets that can be locked away with a state of the art security system like the ones sold by Smart Card Store, digital security is often misunderstood and mis managed.

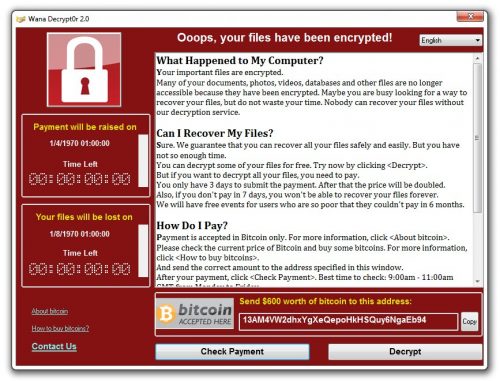

What the virus wants seems straightforward: money—in the form of bitcoin. This desire is made clear through an unambiguous pop-up-window with instructional text and a ticking countdown clock that indicates how much time someone has to comply before the price goes up and files are lost forever.

I argue, however, that the virus doesn’t want anything at all, because it can’t want anything. The virus doesn’t have agency, people do. What the virus has, is efficacy. Agency and efficacy with regard to technological objects are related but distinct constructs, and their place in the WCry incident presents a critical case study in how technologies work.

Ernst Schraube describes technology as materialized action. It is the material form of agentic moves on the part of designers and users, all of whom are embedded in social, structural, and institutional infrastructures. Designers imbue technologies with particular sets of values—both implicit and explicit—derived from multiple sources, including personal history and biography, cultural trends and norms, and directives from corporate and government entities. In turn, users deploy technologies for intended, unintended, and sometimes highly unexpected purposes. Technologies are built with intention, but once a technology is out there, the makers cannot maintain control.

Schraube’s materialized action is a direct response to Actor Network Theory (ANT), which positions organisms and technologies in horizontal assemblages. In ANT, all parts of a socio-technical system hold equal influence as indicated by the shared moniker of “actant.” The arrangement of chairs, desks, and a lectern, for example, create as much as reflect power distinctions between speakers and listeners. That is, technological objects do something in their own right. Schraube begins with this technological doing posited by ANT, but diverges by prioritizing humans as a disproportionate force in the human-technology web. That is, technology is efficacious—it does something—but not agentic—it wants nothing.

While ANT would implicate the ransomware as a subject desiring cash and information, Schraube understands that the WCry program is a materialization of competing agentic agendas: intelligence gathering by the U.S. government and financial exploitation by a criminal hacker element. The virus wants to collect neither knowledge nor money, but can efficaciously acquire both.

Spread through a network vulnerability identified and exploited by the National Security Agency (NSA), the virus is imbued with the goals and desires of this government institution. It is a technology of epistemology—a way of knowing—that includes distrust of U.S. and foreign citizens and an arrogant presumption of legitimate access to individual and organizational information via stored files and data.

With the NSA technology stolen and distributed by the group Shadow Brokers, WCry emerges as a money collection system and at the same time, a powerful symbol of organizational penetrability. It demands money while flaunting the porousness of networked systems so integral to the smooth function of public life. In these ways, the program embodies multiple meanings, infused with the agencies of authoritarian forces along with hackers and social disruptors.

These agentic moves—by the NSA, Shadow Brokers, and those who deployed the tool against hospitals, states, and corporate entities—give new agency to an object that was, already, deeply efficacious. MCry’s capacity to do cannot be denied. What it wants, however, cannot disentangle from the people who made, used, and co-opted the technology.

Conceptualizing technology as efficacious but not agentic centers a political orientation towards technology. The makers are agentic. The users are agentic. The objects are, to varying degrees, effective in carrying out maker-user agencies. What technologies do, then, can only reflect what a particular set of people want. Understood in this way, desirable and undesirable outcomes—or effects—can be named, located, and when needed, changed. Technologies cannot take over the world, as technologies are, always, from and of us.

Jenny is on Twitter @Jenny_L_Davis

Headline Pic Via: Source

Comments 3

Dr Stephen Jones — May 17, 2017

There is no more agency at work here than with the first computer virus.

Stephen Malagodi — May 17, 2017

What then of the autonomy of complex interconnected systems, like capitalism (think of Smith's 'invisible hand') or the corporate/nation state (think of Hobbes' Leviathan)? We naturally ascribe agency to these systems, i.e. "China wants", or "U.S. interests".

It may be helpful to make a distinction between "wants" and " desires". Water "wants" to flow downhill, but its "desire" to do so is unknowable. The problem is that once systems become sufficiently complex, the distinction becomes difficult to see. We can see, however, a tendency shared by complex biological systems and complex industrial systems: once they are able to be identified, they immediately exhibit the ~desire~ to survive as that identity.

Luis ALBEROLA — May 19, 2017

Pushing towards understanding technology as agentic is a simple way to avoid facing responsibility for lawful but arguably unmoral actions by corporate officers. It is very similar, to my mind, to having pushed towards giving corporations legal personality and also considering them as agentic.

In this regard, technology is as terrifying an instrument of power, and therefore of good and/or evil, as religion once was