Nextdoor is a local social network site that connects people who live in the same neighborhood. Neighbors use it to exchange information and keep up with the ongoings of a geographically bounded community. Nextdoor seems like a relatively innocuous site for block party advertisements and zoning debates, and it is. It is also a site on which racial profiling has emerged as a problem and in response, a site on which important debates are currently playing out.

In short, people on Nextdoor have been reporting crimes in which race is the primary descriptor of the subject, casting suspicion upon entire groups of people and instigating/exacerbating racial tensions among neighbors.

Nextdoor CEO Nirav Tolia does not want his site to be a space for racial profiling, and recently instated a policy to ameliorate the problem. The policy is simple: Do not racially profile. What is contentious, however, is how this policy is enacted.

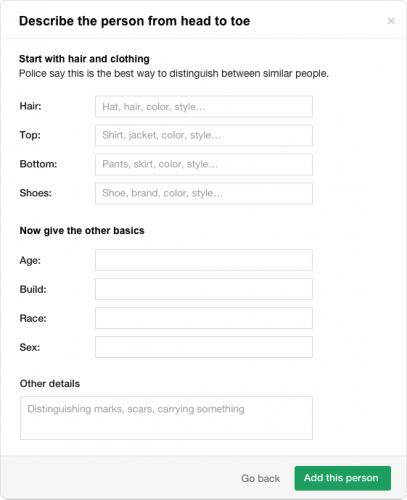

In contrast to Facebook (and more recently, Twitter), whose terms of service warn users that they can be censured or removed for discriminatory language, Tolia instructed his employees to build anti-profiling conduct into the site’s architecture. Specifically, the site provides a crime reporting form in which racial designations can only post if they are accompanied by two additional descriptors (e.g., clothing and hair style). In addition, reports that include race have to be of sufficient length. Otherwise they will be tagged by an algorithm and potentially removed.

Nextdoor’s tactic is exemplary of the politics inherent in codes and algorithms, and it is thus unsurprising that their anti-profiling codes and algorithms have been the subject of political debate. While the CEO makes a strong case for the move away from race-based criminalization, those opposed find the new requirement an impingement upon free speech, as well as a potential threat—if race is the only identifier a witness perceives, that witness is prevented from posting about potential dangers. As quoted on NRP, one person wrote the site administrators and complained: “Why would you engage in anything that limits people’s expression? And especially people who are trying to keep their neighborhoods safe?”

The debates—and potential outcomes—of Nextdoor’s anti-profiling code can be well explained using a gradated theory of affordance.

Broadly, an affordance is what a technological object enables and constrains, given a particular user. While historically, “affordance” is tied in a blanket way to each object—the object either affords or it does not afford a particular action—a gradated theory of affordance recognizes that a technological object affords in degrees.

In a gradated theory of affordance, technological objects request, demand, allow, encourage, and refuse[1]. Requests and demands are how an object influences the user, while allowances, encouragements, and refusals are how an object responds to a user’s desired actions. In the case of Nextdoor, the written policy—like Facebook’s and Twitter’s—requests that participants refrain from racial profiling. The new form, which requires multiple non-racial identifiers, demands it. In turn, the written instruction allows racial profiling, while the form refuses to let profiling persist.

Thus, the debate surrounding Nextdoor is not whether racial profiling is acceptable or not, but about whether antiprofiling policy should come as a request or a demand; whether its response to racist acts should be an allowance or a refusal.

The pilot program, which rolls out nationally in a few weeks, comes down on the side of demand and refuse.

The demand that users include multiple identifiers for the alleged perpetrator and provide a description of sufficient length does two important things, one practical and the other ideological. First, it makes content itself more precise, reducing broad accusations against entire local populations and aiding law enforcement who, certainly, benefit from greater levels of detail. Second, and this is admittedly aspirational, it could teach people to perceive differently.

Communication technologies don’t just inform how we write and share, but how we frame the world and our place within it; social media doesn’t just shape how we communicate, but how we are. As bloggers, we at Cyborgology craft key points into 140 character tweetable lines. In this way, Instagrammers make their way to sunlit mountains and ask their friends to wait a moment before eating so they can capture interesting content with a particular aesthetic, while Facebookers show up to the party, even if perhaps they feel tired, partly because they want to be included in the document of the event–they want to be part of the archive.

Social psychologists have found that along with gender, race is the first thing people notice about someone else. By demanding a new practice of documenting, Nextdoor may, at the same time, shepherd in a new practice of looking.To be sure, and as noted by Tolia himself, forms are not the solution to racism. Certainly, users can (and likely will) revert to coded language and more granular ways of signifying race. But, users will be encouraged to look more holistically, and I hope, if too optimistically, that they will unlink race and criminality–at least to some degree.

Jenny Davis is on Twitter @Jenny_L_Davis

[1] “refuse” is an addition to the original formulation linked her. An extended version is under review in manuscript form.