Cory Doctorow’s recent talk on “The Coming Civil War Over General Purpose Computing” illuminates an interesting tension that, I would argue, is an emerging result of a human society that is increasingly augmented: not only are the boundaries between atoms and bits increasingly blurry and meaningless, but we are also caught in a similar process regarding categories of ownership and usership of technology.

Understanding the tension between owners and users – and the regulatory bodies, both civil and corporate, who would like to have greater degrees of control over both – is necessarily going to be a consideration of the distribution of power in augmented human experience. If the categories of user and owner are increasingly difficult to differentiate clearly, it follows that we need to examine how power moves and where it’s located as the arrangements shift. I don’t mean just the question of whether users or owners have more power, but what kind of power they have, as well as who is losing what kind and who is correspondingly making some gains.

Doctorow’s initial point – and it’s an important point from which to start – is that not only is human life increasingly augmented, but it’s augmented by a collection of technologies that are at once more and less diverse than they used to be:

We used to have separate categories of device: washing machines, VCRs, phones, cars, but now we just have computers in different cases. For example, modern cars are computers we put our bodies in and Boeing 747s are flying Solaris boxes, whereas hearing aids and pacemakers are computers we put in our body.

If we understand these devices as “general purpose”, as Doctorow does, then power within that context takes on a very specific meaning: who controls what programs can run on these devices and how that ends up affecting how the devices are used? Owners? Users? Regulatory bodies? Corporations?

Traditionally we’ve understood an owner of something to have pretty much complete control over its use, within reason; this is fundamental to a lot of how we culturally conceive of private property rights. When we buy something, when we spend money on it and consider it ours, it’s been tacitly understood that we then control how it’s used, at least within the boundaries of the law. If you buy a car, you can have it repainted, switch out the parts for other parts, enhance and augment it largely to your heart’s content. If you buy a house, you can knock down walls and build extensions. I would argue that we tend to instinctively think of technology the same way: we – or, to paraphrase William Gibson, “the street” finds its own uses for things, and those uses aren’t subject to much constraint.

But increasingly, we can’t assume that.

When it comes to general purpose computing, both corporations and corporate-esque bodies with regulatory interests are exercising ever-greater degrees of control over what programs can and can’t run on our devices – in other words, how our “owned” devices can and can’t be used. As Doctorow points out:

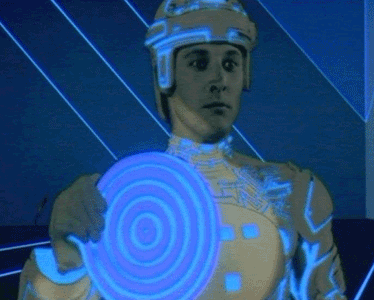

We don’t know how to make a computer that can run all the programs we can compile except for whichever one pisses off a regulator, or disrupts a business model, or abets a criminal. The closest approximation we have for such a device is a computer with spyware on it— a computer that, if you do the wrong thing, can intercede and say, “I can’t let you do that, Dave.”

Such a a computer runs programs designed to be hidden from the owner of the device, and which the owner can’t override or kill. In other words: DRM. Digital Rights Managment.

Things like DRM are clearly problematic because they erode our very idea of what it means to be an owner of something; we can use it, install and run programs on it, and customize it to a degree – but only to a certain degree. Other entities can stop us from doing something with our devices that they don’t like, often through coercive means both subtle and not-so-subtle. And that line between okay and not-okay is subject to change, sometimes without much notice. Owners – people whose devices would traditionally be understood as their property – increasingly resemble users in many respects – people who can use and sometimes even alter or customize a device, but who don’t actually own it and whose power vis a vis the use of that device is necessarily limited. And, as Doctorow goes on to note, we are increasingly users of devices that we don’t even arguably own (such as workplace computers).

PJ Rey wrote an excellent piece in this vein a while back on Apple – probably one of the more egregious offenders here. Apple, PJ notes, makes use of an aura of intuitive, attractive, user-focused design to suggest to its customers that it is empowering them – but this sense of empowerment is ultimately an illusion. Apple doesn’t want owners, it wants largely passive users – people who pay for the privilege of using the device but who will submit to the nature of that usage being severely curtailed:

[B]y burying the inner-workings of its devices in non-openable cases and non-modifiable interfaces, Apple diminishes user agency—instead, fostering naïveté and passive acceptance.

Even when a company is less overt about their desire to control the devices they’re selling, the presence of a net connection coupled with firmware updates can serve to reveal ways in which “owners” of a device have little control over what programs actually run on that device and how it can be used. I own a Playstation 3, and periodically I’m required to download a firmware update. I essentially have no choice in whether or not I download this update – I’m required to signal my agreement, but not doing so would deny me access to a number of features that pretty much make it possible for me to use the PS3 for the very things we bought it to do. I wouldn’t be able to access PSN (Playstation’s online store and software update network), which would mean that many of my games would be unplayable; they require regular software updates to run at all.

But by accepting one of these system firmware updates, I removed the ability of my PS3 to run a Linux-based OS – something that many users have found preferable and more flexible than the PS3’s default OS. The device I own is now less functional; I traded non-functionality for lesser non-functionality. Either way, I was reminded once again that I don’t necessarily “own” the device that is arguably my private property.

So power is in flux. It’s subject to a particular kind of contention here, and I’d argue that the form of that contention – or at least some of its elements – is new.

This picture is further complicated when we consider programs themselves. I’m old enough to remember a time when you bought software and it was basically yours in the traditional sense: you could install it on as many devices as you wanted and an internet connection wasn’t necessary for constant confirmation that you had actually paid for it. Where software is concerned, licensing is arguably supplanting traditional ideas of ownership – you are essentially paying for the privilege of installing it on a severely limited number of devices and you’re required to go through verification processes that frequently serve to make me feel like some kind of digital shoplifter.

Finally, Doctorow points out how this is all still further complicated by the ways in which people’s bodies are physically augmented and are likely to be so in the future (here he contrasts issues specific to owners with issues specific to users):

Most of the tech world understands why you, as the owner of your cochlear implants, should be legally allowed to choose the firmware for them. After all, when you own a device that is surgically implanted in your skull, it makes a lot of sense that you have the freedom to change software vendors. Maybe the company that made your implant has the very best signal processing algorithm right now, but if a competitor patents a superior algorithm next year, should you be doomed to inferior hearing for the rest of your life?…

[But] consider some of the following scenarios:

• You are a minor child and your deeply religious parents pay for your cochlear implants, and ask for the software that makes it impossible for you to hear blasphemy.

• You are broke, and a commercial company wants to sell you ad-supported implants that listen in on your conversations and insert “discussions about the brands you love”.

• Your government is willing to install cochlear implants, but they will archive everything you hear and review it without your knowledge or consent.

The point at which physical bodies are physically augmented by technology is a crucial crossroads here, one that Doctorow discusses but where I also think he could go further: the question of human rights vs. property rights. Doctorow is undoubtedly correct when he notes that users and owners don’t necessarily have the same interests – indeed, sometimes their interests conflict. But I think it’s also important to emphasize once again the the delineation between the two concepts isn’t always clear anymore – if it ever really was – and is likely to become less so. And along with the uncertainty about the boundaries between these two groups comes uncertainty regarding whether we can still meaningfully differentiate between property rights and human rights, when we not only own but are our technology.

On this blog we’re very used to the ideas of categories collapsing, and given that, it follows that once we accept the idea that those categories are collapsing, we have to ask ourselves what that exactly means – or might end up meaning in the long run. What we have now are questions – about where the power is, about where it’s going, and to what degree agent-driven technology use can survive the coercive control of corporate and government regulation of those technologies – especially when human life and experience and our very physical nature are so deeply augmented.

One final theoretical element that I think is useful here – and to which Doctorow makes no direct reference, though I think there’s a lot of room for it in his talk as well as a lot of indirect links already made – is Foucault’s concept of biopower – of power exercised by state institutions by and through and within physical bodies. The idea is an old one now – but within the context of the above, I think it’s changing in some significant ways. When technology is subject to institutional control, it’s deeply meaningful when that technology is literally part of our bodies – or so deeply enmeshed with our daily lived experience and our perceptions of the world around us that it might as well be. And when the lines between government institutional control and corporate institutional control become blurry in their turn, the traditional meaning of biopolitics is additionally up for grabs.

One of the more famous phrasings of the recent spate of technology-critical writing is Jaron Lanier’s You Are Not a Gadget. But more and more, that’s exactly what we are – we are our technology and our technology is us. Given that, we now need to understand how to defend our rights – property and humanity, users and owners, digital and physical, and all the enmeshings in between.

Comments 18

Who Fights For The Users? » Cyborgology | leapmind | Scoop.it — September 7, 2012

[...] [...]

Chris — September 8, 2012

Nicely done, thanks.

I swear a few months ago HP downloaded a firmware upgrade to my all-in-one that made it quit printing because "using stale ink could damage the printer". Scanning quit working just before that. About time to go all Office Space on it.

But as you said, what choice do we have? I set most stuff to accept updates automatically, I spend enough of my life at a computer as is.

Doug Hill — September 8, 2012

Dear Sarah:

Thanks for this piece -- a very important subject. I recently wrote a blog entry that pertains to this, quoting Ellen Ullman's point that the proprietary nature of the computerized trading programs on Wall Street makes it virtually impossible to prevent flash crashes and other trading fiascoes from occurring. The trading firms that use those programs can't control them because they don't own or understand the software and hardware they run on.

As I've mentioned to Nathan Jurgenson, I find it difficult to accept the Cyborgology-approved idea that the dividing line between human beings and their technologies has disappeared or is in the process of disappearing, and I think the distinction between "owner" and "user" being discussed here is another reason that's a questionable proposition. If it's true that "we are our technology and our technology is us," then we have to accept that who we are is increasingly defined by others, most of whom don't have our best interests at heart. The "me" part of the equation is increasingly surrendered.

Of course that's exactly why the rights questions you address here are important. I guess what I'm saying is that I have my doubts whether it's a correctable problem.

Erdogan Sima — September 9, 2012

Good that you brought up the political significance of ontological uncertainty, aka "blurry line".

If I may add, and beyond government/corporate institutional controls, that where such uncertainties abound (e.g. "blurry line" between policing & warfighting) the traditional meaning of biopolitics multiplies. As such, rather than additionally, as you say, its meaning is necessarily unstable: up for grab. So, one first needs to believe in a stable "traditional meaning" before claiming to have it grabbed, the way people imagine a "pure offline" (big ups to Nathan) before claiming for their disconnected soul.

Biopolitics escapes the certainty of a definition, in the way it is superficially political (vs. profoundly meaningful) to remain always on the loose at some blurry line. As to us, we could be deemed biopolitical, say, in the way we negotiate the uncertainties of "owner/user" distinction, and in the face of relentless attempts to grab us -- either as owner or as user, as it were, "of technology". This duality, then, is what makes the biopolitics of technology (yet another struggle-in-flux) possible, from SOPA to the Church of Kopimism, and to your coming to terms with "your" PS3.

All uncertainties, in short, we should be thankful for them.

Empowerment without Independence » Cyborgology — September 10, 2012

[...] week Sarah Wanenchak (@dynamicsymmetry) and Whitney Erin Boesel (@phenatypical) separately broached the tensions [...]

Robert — September 11, 2012

Possibly the transition from owned technology to provisional user can be modeled as a consequence of the fear of litigation. The American mentality revolves around a rather strong sense of entitlement with responsibility as a secondary consideration. As individuals adopt increasingly complex and non-intuitive technology, the risk of damage or injury due to uninformed use also increases. The reflexive response is to sue the manufacturers rather than accept responsibility for foolish behavior (understandable in light of the long history of irresponsible "snake-oil" salesmen and similar negligence on the part of manufacturers). As a reaction to the growing risk of lawsuits, companies transform their products into "black boxes" of mysterious powers, a Pandora's box and magician's top hat in one: an object capable of miracles but woe be the fool who voids the warranty. After many iterations of litigation and obfuscation, we converge on the current state of where the "owner" is simply leasing the product. Maybe? Is this an inappropriate oversimplification?

Who Fights For The Users? | Flash Politics & Society News | Scoop.it — September 12, 2012

[...] I mean, besides this guy. Cory Doctorow’s recent talk on “The Coming Civil War Over General Purpose Computing” illuminates an interesting tension that, I would argue, is an emerging result of a human society that is increasingly augmented: not only... [...]

Free as in Beer: Power and design » Cyborgology — January 4, 2013

[...] restrictions on that use. And this struggle is intimately connected to a much larger one, that of the increasingly problematic distinction between users and owners, between the freedom and associated risks assumed by someone who truly owns a thing, and the safer [...]

User-Renters in SimCity » Cyborgology — March 14, 2013

[...] should we care about this? Most simply, because it’s a continuation of an ongoing trend: The recategorization of technology owners as technology users, of the possession of private property transformed into the leasing of property owned by others, [...]

The Xbox One Reveal and Why it’s Revealing » Cyborgology — May 23, 2013

[...] In other words, owners increasingly = users. [...]

The Gamers Tribune | The Xbox One and Always-Online DRM - The Gamers Tribune — June 25, 2013

[...] And then of course you have the tricky issue of private property ownership and claims that always-online DRM is more akin to borrowing than buying, since the game will become inoperable once the publisher takes down the servers. Instead of buying ownership of digital media you are simply purchasing the rights to use digital media temporarily, the true ownership of which remains in the hands of the publishers, or so the argument goes. [...]

The Gamers Tribune | Xbox One: A Levelheaded Look at Always-Online DRM - The Gamers Tribune — June 28, 2013

[...] And then of course you have the tricky issue of private property ownership and claims that always-online DRM is more akin to borrowing than buying, since the game will become inoperable once the publisher takes down the servers. Instead of buying ownership of digital media you are simply purchasing the rights to use digital media temporarily, the true ownership of which remains in the hands of the publishers, or so the argument goes. [...]

Xbox One: A Look at Always-Online DRM - The Gamers' Tribune — July 15, 2013

[...] And then of course you have the tricky issue of private property ownership and claims that always-online DRM is more akin to borrowing than buying, since the game will become inoperable once the publisher takes down the servers. Instead of buying ownership of digital media you are simply purchasing the rights to use digital media temporarily, the true ownership of which remains in the hands of the publishers, or so the argument goes. [...]

Keurig: Your New Coffee Overlord » Cyborgology — March 7, 2014

[…] control. For a corporation, a lease is always going to be more attractive than a sale. If they can turn owners into users, they […]

Apple’s Health App: Where’s the Power? » Cyborgology — September 30, 2014

[…] at least eventually. Apple already has a kind of control over a device that’s a bit worrying, blurring the line between owner and user and threatening to replace one with the other. The Health app is a glimpse of a kind of […]

Apple’s Health App: Where’s the Power? » Sociological Images — October 20, 2014

[…] at least eventually. Apple already has a kind of control over a device that’s a bit worrying, blurring the line between owner and user and threatening to replace one with the other. The Health app is a glimpse of a kind of […]

The New Health App on Apple’s iOS 8 Is Literally Dangerous - Axis News — October 29, 2014

[…] at least eventually. Apple already has a kind of control over a device that’s a bit worrying, blurring the line between owner and user and threatening to replace one with the other. The Health app is a glimpse of a kind of […]

Apple Health : qui a le pouvoir ? - Ressource Info — December 11, 2014

[…] de prendre en compte certaines populations et l’impossibilité d’éliminer une application, brouillant toujours plus la ligne de savoir qui est propriétaire de l’appareil que vous achetez, souligne surtout combien ce type d’approche demeure extrêmement paternaliste. Au final, rien […]