It’s probably appropriate that amidst a torrent of harassment and abuse directed at marginalized people following the election of noted internet troll Donald Trump, Twitter would roll out a new feature that purports to allow users to protect themselves against harassment and abuse and general unwanted interaction and content. Essentially it functions as an extension of the “mute” feature, with broader and more powerful applications. It allows users to block specific keywords from appearing in their notifications, as well as muting conversation threads they’re @ed in, effectively removing themselves.

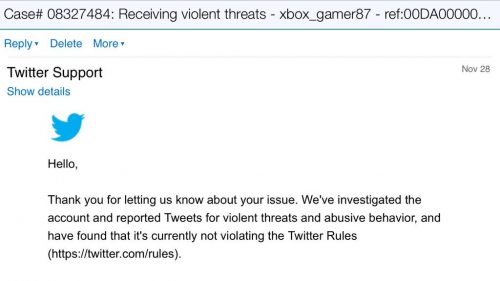

In a lot of ways, this seems like a good feature and a useful tool. Among other things, it addresses problems with Twitter’s abuse reporting system, where people reporting abusive tweets are told that the tweets in question don’t violate Twitter’s anti-abuse policy. As Del Harvey, Twitter’s head of “trust and safety”, explains it:

We really tried to look at why — why did we not catch this? And maybe the person who did that front-line review didn’t have the cultural or historical context for why this was a threat or why this was abuse.

In that same Bloomberg piece, it’s noted that there’s also a new option to report “hateful conduct”, and that abuse team members are being retrained in things like “cultural issues”. Also good. Especially right now, when – despite Melania Trump’s charmingly quixotic stated mission to protect everyone from her husband on Twitter – there’s likely to be a significant upswing in this kind of profound ugliness, probably for a long time.

Here’s the problem, though. And it’s more of a quibble, but it’s worth the quibbling.

The primary thrust of Twitter’s new initiative is oriented toward the target. By which I mean, what looks like putting power in the hands of a user actually has the potential to put responsibility on them for their own safety. Which a lot of people would probably think is perfectly reasonable, and I agree – to a point.

The issue is that it’s very easy to do something like this – toss something into someone’s lap for them to use – and adopt the assumption that this is the best strategy for dealing with the deeper problem. Which isn’t that abusers are able to reach their targets. It’s that the abusers are there at all.

Here’s where someone says hey, that’s the internet, what do you expect? And yeah, I know. Believe me, I know. But what I expect? Is more than putting responsibility on a user in the guise – even if it’s not entirely a guise – of giving them power. I understand that it’s very difficult to kick these people out and keep them out. I understand that it’s just about impossible. I appreciate that Twitter does seem to be doing work in that direction. But it’s not enough. What I expect is that we’ll create spaces where we don’t have to worry about muting these people because they never start talking in the first place.

There’s also the issue of how, when you successfully ignore something while not removing it, you can actually enable its presence. Which is not to say that users shouldn’t take full advantage of this feature, but instead to say that Twitter should remember that just because you can’t hear it, that doesn’t mean it isn’t there.

And it doesn’t mean it isn’t getting worse.

There’s more work to do.

Sunny is on Twitter – @dynamicsymmetry