The concept of the Uncanny Valley is pretty well known by now – the idea, popularized by robotics professor Masahiro Mori, that as non-human objects approach a human likeness they start, for lack of a better phrase, to really creep us the hell out. It’s a fairly simple idea, and one that usually seems reasonable to us on both a visceral and an intellectual level, at least partly because it’s something that most of us have experienced aspects of at one time or another. But there are parts of this concept that often go unexplored, which is what I want to deal with here.

In his essay on what he terms “anthropomorphobia”, Koert van Mensvoort notes that products are increasingly made to appear humanesque or to possess human-like qualities. Our coffeemakers talk to us, robots clean our floors, vending machines are programmed to be friendly to us as they sell us things. van Mensvoort argues that one of the things that potentially makes us uneasy about this is not only the blurring between the human/non-human boundary, but the blurring between the boundary of people/product. In other words, what makes this particular valley so uncanny is not just that it suggests that we might have to rethink what “human” actually means, but that we are forced to confront the degree to which our own bodies are increasingly managed, produced, and marketed to us as products:

It is becoming less and less taboo to consider the body as a medium, something that must be shaped, upgraded and produced. Photoshopped models in lifestyle magazines show us how successful people are supposed to look. Performance-enhancing drugs help to make us just that little bit more alert than others. Some of our fellow human beings are even going so far in their self-cultivation that others are questioning whether they are still actually human − think, for example, of the uneasiness provoked by excessive plastic surgery.

I would actually extend this point to argue that to the degree that products – and objects/machines in general – take on increasingly human-like qualities, we’re actually pretty much okay with this, provided that the objects fail in an extremely evident fashion. I would even argue that we like objects to become slightly human-like, precisely because their failure to truly become human both solidifies our self-concept as an ideal creature and strengthens our perception of our constructed categories. We like a Good Robot that wants to be human but never truly can be. It places our essential humanness firmly out of reach; it makes it exclusive to us alone. It makes us feel unique and special.

So where we dip into the Uncanny Valley is in the threat that these objects might actually succeed. Suddenly our humanness is not so exclusive. Suddenly we’re not so special anymore.

As Jenny Davis observed in her post on Lance Armstrong, one of the things that makes most uncomfortable about something – human or non-human – that threatens to transgress the human/non-human divide is the degree to which it call to our attention how blurry and porous that divide actually is. We don’t really want to confront our truly cyborg nature, because our existing ideas of what makes up a “natural” human being are intensely important to our understanding of ourselves. We need boundaries and binaries; we desperately want to be one thing or the other, because that Other is so often the standard against which we measure ourselves. As long as the non-human remains an Other, we’re safe.

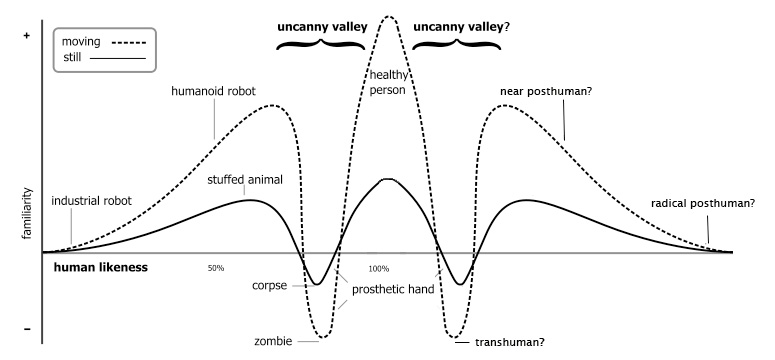

But again, as Jenny so rightly points out, there’s another side to this. One of the things that often goes unexplored when we consider the Uncanny Valley is whether there’s actually an Uncanny Valley on the other side of the category of the fully “natural” human ideal. In other words, whence transhumanism?

Jamais Cascio has an interesting theory about this, one that essentially amounts to: Not only are evident augmentations/enhancements in humans likely to provoke a negative gut-level response, but it’s actually the near-human augmentations that will provoke that response the most intensely. In other words, there is indeed another side to the typical Uncanny Valley graph, and it’s a mirror image. As human augmentations and enhancements extend further and further from our conventional ideas of what it is to be human and toward the truly posthuman, our negative response will decrease in intensity.

This may or may not be so – it’s difficult to be sure, in what are arguably still early days of this particular kind of human augmentation, but again, I would take this a step further: that, as both Jenny and I have argued, what makes us the most uneasy right now about human augmentation is the idea that it might make people – especially people with disabilities – better than abled humans. We can usually stomach humans with close relationships to objects and machines, provided they don’t begin to transgress the boundary that not only delineates a category but defines that category as an ideal.

By the same token, there are few things that frighten us more than the idea that a machine might not only seek to be fully human – and succeed – but that it might desire to be better than human. And to replace us, in the end.

In short, we need to understand the Uncanny Valley not only as an instinctive reaction to something unexpected – often described as neurological in nature – but as a profound disruption of our constructed categories of identity. It’s the threatened removal of the line between self and Other; it threatens our definitions of who we are. This threat works both ways, in both directions; we’re not only afraid of what might become us, but of what we might become.

Sarah is creepily near-human on Twitter – @dynamicsymmetry

Comments 3

Thomas Wendt — December 13, 2012

I love the extension of Mori's graph. I've actually been working on a similar model that looks at current weak AI products like Siri and Google Now, and strong and sentient AI on the other side of the valley. There is some good research that suggests "smart" products are beginning to approach a valley in which user comfort falls drastically as privacy concerns become pronounced. But on the other side, comfort rises again when the utility of these products is realized. There seems to be a distinct trust factor involved that only comes with experience: as we use these products without privacy breeches, trust rises with utility. Or it can all go to hell if the data is used on malicious ways, by either humans or machines.

better than able… | Jana Remy — December 13, 2012

[...] Cyborgology: This may or may not be so – it’s difficult to be sure, in what are arguably still early days [...]

Friday Roundup: December 14, 2012 » The Editors' Desk — December 14, 2012

[...] without taxation (companies are people, too!), explores the unexplored in the “uncanny valley” (and explains Masahiro Mori’s notion that “as non-human objects approach a human [...]