A few weeks back, I wrote a post about special pieces of technology (e.g., backpacks, glasses, a Facebook profile), which become so integrated into our routines that they become almost invisible to us, seeming to act as extension of our own consciousness. I explained that this relationship is what differentiates equipment from tools, which we occasionally use to complete specific tasks, but which remain separate and distinct to us. I concluded that our relationship with equipment fundamentally alters who we are. And, because we all use equipment, we are all cyborgs (in the loosest sense).

In this essay, I want to continue the discussion about our relationship with the technology we use. Adapting and extending Anthony Giddens’ Consequences of Modernity, I will argue that an essential part of the cyborganic transformation we experience when we equip Modern, sophisticated technology is deeply tied to trust in expert systems. It is no longer feasible to fully comprehend the inner workings of the innumerable devices that we depend on; rather, we are forced to trust that the institutions that deliver these devices to us have designed, tested, and maintained the devices properly. This bargain—trading certainty for convenience—however, means that the Modern cyborg finds herself ever more deeply integrated into the social circuit. In fact, the cyborg’s connection to technology makes her increasingly socially dependent because the technological facets of her being require expert knowledge from others.

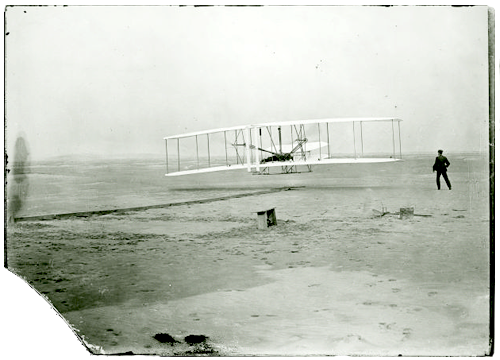

Let us begin by further exploring why Giddens claims that the complexity of the Modern world requires a high degree of trust. Consider the experience of flying on an airplane. Perhaps the typical passenger has vague notions of lift and drag, but these passengers are certainly not privy to the myriad formulas used to calculate the precise mechanics that keep the craft airborne. Unlike the Wright Brothers and their famous “Flyer,” a single engineer can no longer be expected to understand all the various systems that comprise modern aircraft. In fact, the design team for a plane is likely so segmented and specialized that it would be impossible to fit a team capable of understanding a craft inside the craft itself. Giddens explains that complex technologies such as airplanes are “disembedded” from the local context of our lives and our social relations; that is to say we lack direct or even indirect experiential knowledge of modern technology. Instead, our willingness to, say, hurl ourselves 30,000 feet above the Earth in an aluminum cone, derives solely from our trust in expert systems. Importantly, this trust is not in individual experts, but in the institutions that organize and regulate their knowledge as well as the fruits of that knowledge.

Modern day cyborgs are characterized by profound trust in both technology and the expert systems that create it. That is to say, in order to make use of complex technology, we have to accept limited understanding of it and simply assume that it was properly designed and tested. However, trust is not merely passive acceptance of a lack of understanding; it also involves a commitment. Giddens (CoM, p. 26-7) explains:

Trust […] involves more than a calculation of the reliability of likely future events. Trust exists, Simmel [a Classical sociologist] says, when we “believe in” someone or some principle: “It expresses the feeling that there exists between our idea of a being and the being itself a definite connection and unity, a certain consistency in our conception of it, an assurance and lack of resistance in the surrender of the Ego to this conception, which may rest upon particular reasons, but is not explained by them.” Trust, in short, is a form of “faith,” in which the confidence vested in probable outcomes expresses a commitment to something rather than just a cognitive understanding.

The use of complex technology involves an element of risk (e.g., crashing back to Earth). The cyborg’s confidence in the expert systems behind technology must be sufficiently strong to mitigate any perceived risks from use of that technology. Once we have equipped a piece of technology, we become dependent on it. We make decisions that assume its full functioning, and its failure can be perilous. A rock climber, for example, places her life in the hands of her harness (and the experts that engineered it) every time she scales a rock face. She cannot know with certainty that the molecules of her carabineer have been properly alloyed, but her confidence, and her life, rest on the belief that the expert system will not have failed. This trust in equipment demonstrates an existential commitment to technology. Giddens (CoM, p. 28) elaborates:

Everyone knows that driving a car is a dangerous activity, entailing the risk of accident. In choosing to go out in the car, I accept that risk, but rely upon the aforesaid expertise to guarantee that it is minimised as possible. […] When I park the car at the airport and board a plane, I enter other expert systems, of which my own technical knowledge is at best rudimentary.

Being a cyborg is risky business; we must depend on the expertise of others to ensure that our equipment is fit for use. This radical dependency on expert systems—and the societies that create them—makes cyborgs fundamentally social beings. In fact, it is through dependency on technology, and the subsequent loss of self-sufficiency, that we express our commitment to society. Technology has always been part and parcel to the division of labor. Think bows and shovels. In this sense, being a cyborg requires not only trust in technology producers, but trust in other technology users. There is no such thing as a lone cyborg. The birth of cyborg marks the death of the atomistic individual (if such a thing every existed). Donna Haraway rightly contrasts the cyborg to Romantic Goddesses channeled in small lakeside cabins. Cyborgs are cosmopolitan.

This is not to say that Modern day cyborgs are incapable of being critical of technology or expert systems. On the contrary, the cyborg’s humility in admitting her own dependencies leads her to acknowledge the importance of struggling to enforce certain values within techno-social systems, rather than plotting a Utopian escape (the sort that had currency with Thoreau and other Romantics and that continues to be idealized by cyber-libertarians who view the Internet as a fresh start for society). My favorite Haraway quote explains:

This is not some kind of blissed-out technobunny joy in information. It is a statement that we had better get it – this is a worlding operation. Never the only worlding operation going on, but one that we had better inhabit as more than a victim. We had better get it that domination is not the only thing going on here. We had better get it that this is a zone where we had better be the movers and the shakers, or we will be just victims.

Cyborgs always see the social in the technological; the “technology is neutral” trope is a laugh line.

Nowhere are mutual trust and co-dependency more apparent than with social media. Few of us have any clue how the Internet’s infrastructure delivers our digital representations across the world in an instant. This lack of knowledge means simply that we must trust that platforms such as Facebook or Google are delivering information accurately. As the Turing test has demonstrated, computers can easily fool us into believing we are communicating with someone who is not present or who does not even exist, if the system allows. Moreover, on platforms such as Facebook, we also must trust the system to enforce a norm of honesty. If we cannot trust that other users are honestly representing themselves, we become unsure of how to respond. Honesty and accuracy of information are preconditions to participation. And because, as individuals, we lack the capacity to ensure either, we must place our trust in experts. We users do not understand the mechanics of Facebook, we simply accept it as reality; that is to say, Facebook is made possible through widespread suspension of disbelief. Thus, use social media is a commitment to pursuit the benefits of participation, despite the risk that we could be fooled or otherwise taken advantage of. Facebook is not merely social because it involves mutual interaction, it is social because trust in society’s expert systems is a precondition to any such interaction.

Follow PJ Rey on Twitter: @pjrey

Comments 28

Tom — November 23, 2011

"It is no longer feasible to fully comprehend the inner workings of the innumerable devices that we depend on; rather, we are forced to trust that the institutions that deliver these devices to us have designed, tested, and maintained the devices properly."

I'd say the above could be replaced with:

"It was never feasible to fully comprehend the inner workings even of the simplest devices that we depend on, including our own bodies; rather, we are forced to trust causes on the basis of their predictable effects and proceed accordingly."

That is, I don't think the 'trust' you describe or the lack of full comprehension are particular to a technologically advanced age. People did not understand water wheels, longbows, stirrups, keels, oars or other objects any more than they now understand circuits, disc taps, rubber etc.

Thomas Wendt — November 23, 2011

I wonder if this radical trust is justified on the grounds that these expert systems are always trying to make a sale. In other words, since they have a very tangible monetary investment at stake, are they worth trusting? Does their investment decrease the amount of potential hesitancy toward them.

Cyborg insects and trust « FrogHeart — November 23, 2011

[...] P. J. Rey’s Nov. 23, 2011 posting about trust and technology on Cyborgology, In this essay, I want to continue the discussion about [...]

Doug Hill — November 24, 2011

Thanks for this thoughtful essay, PJ. A couple of (long-winded) points in response:

I totally agree that trust in technologies, their makers and their users is a fundamental commitment we make in a technological society, but the plethora of evidence that our trust has been misplaced accounts, I think, for the fact that we live in a time of widespread existential anxiety. Many of the people I encounter simply don't seem very comfortable in the technological world we've created -- with their surroundings, with one another, or with themselves. That helps explain, I think, why people today seem so angry so much of the time, and the general lack of civility that's been widely discussed and decried.

One reason for this lack of trust is, as an earlier poster noted, that we realize that most of the technology available to us today is available because someone wants to make money from it. Profit, not safety or overall social benefit, is the goal. We're also uneasy because we daily witness the effects of technological power used irresponsibly,as when the car in front of us swerves from side to side while its driver texts her girlfriend.

Another major reason for our lack of trust is the insoluble problem of unexpected consequences: those who produce a given technology seldom consider its potential effects in relation to the diverse circumstances in which it will be used, and wouldn't be able to foresee those myriad effects even if they did try to consider them. We're vulnerable to technological mistakes, and on some level we know it.

The growing complexity of technological systems that you cite also helps explain the widespread existential anxiety I referred to above. The combination of dependence and helplessness is not one that promotes security, or a sense of self possession. Talking to an automobile mechanic or a computer geek makes us feel inadequate -- we've lost trust in ourselves. This helplessness is promoted by manufacturers who realize most consumers today don't *want* to be bothered with repairing the technologies they use.

Contrary to what the earlier poster said, there was a time not so long ago when people knew how to fix a lot of the stuff in their lives, and there was a sense of rootedness that came from that ability. See the recent book "Shopcraft as Soul Craft" for a lovely meditation on that theme.

Trust in many respects is a function of engagement, if not intimacy, and here, too, technology can work in the opposite direction. Despite the sorts of surface interactions that are the currency of social media, many of our technologies actively promote disengagement and isolation. Communicating through technologies increases our connectivity in some ways but undermines it in others.

Georg Simmel (and Georg is the correct spelling, btw) had already recognized this tendency at the onset of the twentieth century, when he wrote, in "The Metropolis and Modern Life" (1903),

"Every dynamic extension becomes a preparation not for a similar extension but rather for a larger one,and from every thread which is spun out of it there continue, growing as out of themselves, an endless number of others…. At this point the quantitative aspects of life are transformed qualitatively. The sphere of life of the small town is, in the main, enclosed within itself. For the metropolis it is decisive that its inner life is extended in a wave-like motion over a broader national or international arena."

While it's true that familiarity can breed contempt (as many people are reminded as they spend Thanksgiving Day with their families), and while it's also true that many people can't wait to escape the claustrophobia of small-town life, it's also true that misunderstandings can easily arise when technology enables anonymous interactions over distance. Who hasn't had the experience of having an off-hand remark made in an email misinterpreted by its recipient because the tone didn't come across as it was intended? And who can trust the companies that make many of our most common technologies and techniques when you can't get a real person who works for them on the phone?

Simmel also talked a lot about the disengagement that is endemic to modern life, and that's the problem, it seems to me, with Donna Haraway's urging that we take technology in hand and make it responsive to our needs. I don't disagree, but leave us not forget that passivity is not only the result of many of our technologies but also their purpose.

Excuse me now, there's a football game on TV I want to see.

Bon — November 24, 2011

PJ, i love this piece.

to the points above that most of our complex systems are trying to sell us something: yes, increasingly. the incursion of brand and monetization into the blogosphere and its networks means that not only does digital sociality require trust in platforms and their embedded analytics, but also in the branded performances of those we interact with. but.

Haraway's cyborg recognizes itself as the product of polluted inheritances, like capitalism, and yet contains within itself the capacity to undermine what it has sprung from. when i get weary of the culture of sales that permeates social media, i take comfort in that.

Mike — November 25, 2011

P. J.,

Thought provoking post. I really appreciate the work you and Nathan are posting on these pages.

My response shares in some of the concerns articulated by Mr. Hill in his comment above. Namely, I see the elegance of your argument, but I'm not sure how well it describes the actual existential experience of modern technology. Mostly, I wonder if there is not a distinction between implicit and explicit trust when we talk about the sociability of technology use. In other words, I'm not sure if the users of complex technologies such as the iPhone or social media platforms are consciously deploying what we might meaningfully call "trust" in their use of these technologies and platforms. We could argue that their use implies a kind of de facto trust in the equipment, but does this amount to the kind of trust that operates in truly social relationships? Trusting systems and trusting persons seem to me to be two different phenomenon. And while it's possible to argue that people set up and run systems so that finally we're trusting in them, but I would suggest that the system, if it is running well, practically obscures the human element. So if we've outsourced our trust as it were to expert systems, is it still trust that we are talking about or habituated responses to (mostly) predictable systems?

Historians of technology (and Arthur C. Clarke) have pointed to an affinity between magic and technology that can be traced back to the early modern era. I would suggest that our existential experience of using an iPad, for example, more readily approximates our experience of magic than it does trust among social relations. As I write this it occurs to me that perhaps your analysis assumes the other half of the atomized (political) individual that you criticize, the rational (economic) actor whose decision making is a fully conscious, calculated affair. In other words, I suspect most people are not consciously rationalizing their use of technologies by invoking trust in the persons who stand behind the technologies, rather they use the technology as if it were a magical tool that "just worked" -- Apple products, I think, are paradigmatic here. And if the trust in persons is not explicit, can it meaningfully be called social?

One last thought. Philosopher Albert Borgmann's "device paradigm" comes to mind here. In brief, he argues that our tools are increasingly offering ease of use, effectiveness, and efficiency but at the expense of becoming increasingly opaque to the average user -- much the same dynamic that you describe. But this opacity, in his view, has the effect of alienating us from the technology, and to some degree from the experiences mediated by those technologies. In part, I suspect, this is precisely because we are made to use that which we don't even remotely understand, and this is not really trust, but a kind of gamble. Trust, in social relations, is not quite blind faith; it is risky, but not, in most cases, a gamble. Coming back to the airplane, for many people, statistics not withstanding, boarding an airplane feels like a gamble and involves very little trust at all.

To wrap up, I suppose I'm suggesting that we need to phenomenologically parse out "trust": reasoned, explicit trust; reasonable, implicit trust; unreasonable, implicit trust; unreasonable, explicit trust (blind faith); explicitly untrusting acquiescence; gambles; etc. Our use of technology relies on many of these, but not all of them amount to the kind of trust that might render us meaningfully social.

That felt like a ramble, but I hope it made some sense.

Cheers.

Technology and Trust « The Frailest Thing — November 25, 2011

[...] that make you think, and that was exactly the effect of PJ Rey’s smart post on Cyborgology, “Trust and Complex Technology: The Cyborg’s Modern Bargain.” After doing some of that thinking, I offered some on-site comments. Because they are related to [...]

Ninguno Real — November 28, 2011

This is one of the most disgusting goddamn things I have ever read. Lately, I've been wondering where all the cyberpunks went. By that, I mean, all the old-school, techno-shamanic anarchist chaos vectors that guided me through my misspent youth in the early 90's. And you've given me my answer; they're all busy 'depending on the expertise of others,' and are therefore incapable of realizing that our whole fucking civilization has been stolen out from under us by the sniveling little gen-Y shits that think that this is all about generating a living wage without having to have a real job. I hope to god that they all realize how fucking useless they've become and repent before our society becomes a banal cesspool without a frontier to direct it.

How Cyberpunk Warned against Apple’s Consumer Revolution » Cyborgology — December 1, 2011

[...] isn’t selling a product, it’s selling an illusion. And to enjoy it (as I described in a recent essay), we must suspend disbelief and simply trust in the”Mac Geniuses”—just as we must [...]

How Cyberpunk Warned against Apple’s Consumer Revolution « PJ Rey's Sociology Blog Feed — December 8, 2011

[...] isn’t selling a product, it’s selling an illusion. And to enjoy it (as I described in a recent essay), we must suspend disbelief and simply trust in the”Mac Geniuses”—just as we must [...]

We Have Never Been Actor Network Theorists » Cyborgology — December 15, 2011

[...] the project that Haraway started and that Latour largely ignores. This is evidenced most clearly in PJ’s piece on Trust and Complexity, the work we presented on the Cyborgology panel at #TtW2011, and Nathan’s piece on Digital [...]

Trust, Enterprise Security, and Autonomous Technology | CTOsite — January 30, 2012

[...] in nature) that the systems we use will work as planned. The philosopher Anthony Giddens writes of this, for example, when talking about cars: Everyone knows that driving a car is a dangerous activity, [...]

Come i cyberpunk ci hanno avvisato della rivoluzione dei consumatori della Apple | Centro Studi Etnografia Digitale — February 6, 2012

[...] prodotto, sta vendendo un’illusione. E per divertirsi (come ho descritto in un recente saggio), si deve sospendere l’incredulità e dare semplicemente fiducia ai “Mac [...]

Facebook is Not a Factory (But Still Exploits its Users) « Organizations, Occupations and Work — February 22, 2012

[...] Rey (@pjrey) Is co-founder and co-organizer for both the Cyborgology Blog and the Theorizing the Web conference. He is a PhD student in sociology at the University of [...]

Cyborg | Pearltrees — March 26, 2012

[...] It is no longer feasible to fully comprehend the inner workings of the innumerable devices that we depend on; rather, we are forced to trust that the institutions that deliver these devices to us have designed, tested, and maintained the devices properly. This bargain—trading certainty for convenience—however, means that the Modern cyborg finds herself ever more deeply integrated into the social circuit. In fact, the cyborg’s connection to technology makes her increasingly socially dependent because the technological facets of her being require expert knowledge from others. Trust and Complex Technology: The Cyborg’s Modern Bargain » Cyborgology [...]

Hipsters and Low-Tech » Cyborgology — September 27, 2012

[...] does this relate to technology then? As, I have argued in the past, citing Anthony Giddens “bargain of modernity,” the complexity of modern technology and [...]

Hipsters and Low-Tech « PJ Rey's Sociology Blog Feed — October 4, 2012

[...] does this relate to technology then? As, I have argued in the past, citing Anthony Giddens’ “bargain of modernity,” the complexity of modern [...]

Trust and Complex Technology: The Cyborg’s Modern Bargain » Cyborgology | Cyborgs_Transhumanism | Scoop.it — February 8, 2013

[...] In this essay, I want to continue the discussion about our relationship with the technology we use. Adapting and extending Anthony Giddens’ Consequences of Modernity, I will argue that an essential part of the cyborganic transformation we experience when we equip Modern, sophisticated technology is deeply tied to trust in expert systems. It is no longer feasible to fully comprehend the inner workings of the innumerable devices that we depend on; rather, we are forced to trust that the institutions that deliver these devices to us have designed, tested, and maintained the devices properly. This bargain—trading certainty for convenience—however, means that the Modern cyborg finds herself ever more deeply integrated into the social circuit. In fact, the cyborg’s connection to technology makes her increasingly socially dependent because the technological facets of her being require expert knowledge from others. [...]

Trust and Complex Technology: The Cyborg’s Modern Bargain » Cyborgology | Systems Theory | Scoop.it — February 8, 2013

[...] In this essay, I want to continue the discussion about our relationship with the technology we use. Adapting and extending Anthony Giddens’ Consequences of Modernity, I will argue that an essential part of the cyborganic transformation we experience when we equip Modern, sophisticated technology is deeply tied to trust in expert systems. It is no longer feasible to fully comprehend the inner workings of the innumerable devices that we depend on; rather, we are forced to trust that the institutions that deliver these devices to us have designed, tested, and maintained the devices properly. This bargain—trading certainty for convenience—however, means that the Modern cyborg finds herself ever more deeply integrated into the social circuit. In fact, the cyborg’s connection to technology makes her increasingly socially dependent because the technological facets of her being require expert knowledge from others. [...]

Trust and Complex Technology: The Cyborg’s Modern Bargain » Cyborgology | oAnth's day by day interests - via its scoop.it contacts | Scoop.it — March 2, 2013

[...] In this essay, I want to continue the discussion about our relationship with the technology we use. Adapting and extending Anthony Giddens’ Consequences of Modernity, I will argue that an essential part of the cyborganic transformation we experience when we equip Modern, sophisticated technology is deeply tied to trust in expert systems. It is no longer feasible to fully comprehend the inner workings of the innumerable devices that we depend on; rather, we are forced to trust that the institutions that deliver these devices to us have designed, tested, and maintained the devices properly. This bargain—trading certainty for convenience—however, means that the Modern cyborg finds herself ever more deeply integrated into the social circuit. In fact, the cyborg’s connection to technology makes her increasingly socially dependent because the technological facets of her being require expert knowledge from others. [...]

Trust, Enterprise Security, and Autonomous Technology - CTOvision.com — December 13, 2014

[…] in nature) that the systems we use will work as planned. The philosopher Anthony Giddens writes of this, for example, when talking about […]