Making the world a better place has always been central to Mark Zuckerberg’s message. From community building to a long record of insistent authenticity, the goal of fostering a “best self” through meaningful connection underlies various iterations and evolutions of the Facebook project. In this light, the company’s recent move to deploy artificial intelligence towards suicide prevention continues the thread of altruistic objectives.

Making the world a better place has always been central to Mark Zuckerberg’s message. From community building to a long record of insistent authenticity, the goal of fostering a “best self” through meaningful connection underlies various iterations and evolutions of the Facebook project. In this light, the company’s recent move to deploy artificial intelligence towards suicide prevention continues the thread of altruistic objectives.

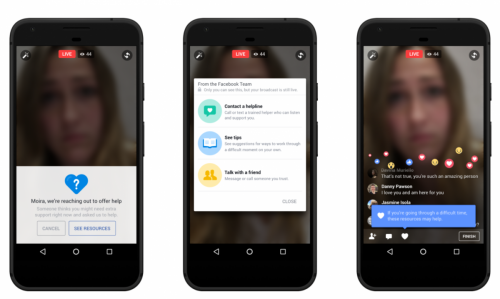

Last week, Facebook announced an automated suicide prevention system to supplement its existing user-reporting model. While previously, users could alert Facebook when they were worried about a friend, the new system uses algorithms to identify worrisome content. When a person is flagged, Facebook contacts that person and connects them with mental health resources.

Far from artificial, the intelligence that Facebook algorithmically constructs is meticulously designed to pick up on cultural cues of sadness and concern (e.g., friends asking ‘are you okay?’). What Facebook’s done, is supplement personal intelligence with systematized intelligence, all based on a combination or personal biographies and cultural repositories. If it’s not immediately clear how you should feel about this new feature, that’s for good reason. Automated suicide prevention as an integral feature of the primordial social media platform brings up dense philosophical concerns at the nexus of mental health, privacy, and corporate responsibility. Although a blog post is hardly the place to solve such tightly packed issues, I do think we can unravel them through recent advances in affordances theory. But first, let’s lay out the tensions.

It’s easy to pick apart Facebook’s new feature as shallow and worse yet, invasive and exploitative. Such dubiousness is fortified by a quick survey of all Facebook has to gain by systematizing suicide prevention. To be sure, integrating this new feature converges with the company’s financial interests in myriad ways, including branding, legal protection, and data collection.

Facebook’s identity is that of the caring company with the caring CEO. Creating an infrastructure with which to care for troubled users thus resonates directly with the Facebook brand image. Legally, integrating suicide prevention into the platform creates a barrier against law suits. Even if suits are unlikely to be successful, they are nonetheless expensive, time-consuming, and of course, bad for branding. Finally, automated suicide prevention entails systematically collecting deeply personal data from users. Data is the product that Facebook sells, and the affective data mined through the suicide prevention program can be packaged as a tradeable good, all the while normalizing deeper data access and everyday surveillance. In these ways, human affect is valuable currency and human suffering is good for business.

At the same time, what if the system works? If Facebook saves just one life, the feature makes a compelling case for itself. A hard-line ideological protest about surveillance and control feels abstract and disingenuous in the face of a dead teenager. Moreover, as an integral part of daily life (especially in the U.S.), Facebook has taken on institutional status. With that kind of power also comes a degree of responsibility. As the platform through which people connect and share, Facebook could well be negligent to exclude safety measures for those whose sharing signals serious self-harm. If Facebook’s going to watch us anyway, shouldn’t we expect them to watch out for us, too?

A tension thus persists between capitalist exploitation through the most personal of means, the wellbeing of real people, and the social responsibility of a thriving corporate entity. Solving such tensions is neither desirable nor possible. These are conditions that exist together and are meaningful largely in their relation. A more productive approach entails clarifying the forces that animate these complex dynamics and laying out what is at stake. Recent conceptual work on affordances, explicating what affordances are and also, how they work, offers a useful scaffold for the latter project.

In an article published in the Journal of Computer Mediated Communication, Evans, Pearce, Vitak, and Treem distinguish between features, outcomes, and affordances. A feature is a property of an artefact (e.g., a video camera on a phone), an outcome is what happens with that feature (e.g., people capture live events) and an affordance is what mediates between the feature and the outcome (e.g., recordability).

Beginning with Evans et al.’s conceptual distinction, we can ask in the first instance: What is the feature, what does it afford, and to what outcome?

The feature here is an algorithm that detects negative affect and evocations of network concern, and that connects concerning persons with friends and professional mental health resources. The feature affords affect-based monitoring. The outcome is multifaceted. One outcome is, hopefully, suicide prevention. The latent outcomes are relinquishment of more data by users and in turn, the acquisition of more user data by Facebook; normalization of surveillance; fodder for the Facebook brand; and protection for Facebook against legal action.

The next question is how automated suicide prevention affords affect-based monitoring, and for whom? Key to Evans et al.’s formulation is the assumption that affordances are variable, which means that the features of an object afford by degrees. The assumption of variability resonates with my own ongoing work[1] in which I emphasize not just what artefacts afford, but how they afford, and for whom. Focusing on variability, I note that artefacts request, demand, encourage, allow, and refuse.

Using the affordance variability model, we can say that the shift from personal reporting to automated reporting represents a shift in which intervention was allowed, but is now required for those expressing particular patterns of negative affect. By collecting affective data and using it to identify “troubled” people, Facebook demands that users get help, and refuses affective expression without systematic evaluation. In this way, Facebook demands that users provide affective data, which the company can use for both intervention and profit building. With all of that said, these requests, demands, requirements and allowances will operate in different ways for different users, including users who may strategically circumvent Facebook’s system. For instance, a user may turn the platform’s demand for their data into a request (a request which they rebuff) by using coded language, abstaining from affective expression, or flooding the system with discordant affective cues. What protects one user, then, may invade another; What controls me, may be controlled by you.

Ultimately, we live in a capitalist system and that system is exploitative. In the age of social media, capitalist venues for interaction exploit user data and trade in user privacy. How such trades operate, and to what effect, generate complex and often contradictory circumstances of philosophical, ideological, and practical import. The dynamics of self, health, and community as they intersect with the cold logics of market economy evade clear moral categorization. The proper response, from any subject position, thus remains ambiguous and uncertain. Emergent theoretical advancements, such as those in affordances theory, become important tools for traversing ambivalence—identifying the tensions, tracing how they operate, and setting out the stakes. Such tools get us outside of “good/bad” debates and into a place in which ambivalence is compulsory rather than problematic. With regard to suicide prevention via data, affordances theory lets us hold together the material realities of deep and broad data collection, market exploitation, corporate responsibility, and the value of saving human lives.

Jenny is on Twitter @Jenny_L_Davis

Note: special thanks to H.L. Starnes for starting this conversation on Facebook

[1] In a paper under review, I work with James Chouinard to explicate and expand this model.