Over at The New Inquiry, an excellent piece by Trevor Paglen about machine-readable imagery was recently posted. In “Invisible Images (Your Pictures Are Looking at You)”, Paglen highlights the ways in which algorithmically driven breakdowns of photo-content is a phenomenon that comes along with digital images. When an image is made of machine-generated pixels rather than chemically-generated gradations, machines can read these pixels, regardless of a human’s ability to do so. With film, machines could not read pre-developed exposures. With bits and bytes, machines have access to image content as soon as it is stored. The scale and speed enabled by this phenomenon, argues Paglen, leads to major market- and police-based implications.

Overall, I really enjoyed the essay—Paglen does an excellent job of highlighting how systems that take advantage of machine-readable photographs work, as well as outlining the day-to-day implications of the widespread use of these systems. There is room, however, for some historical context surrounding both systematic photographic analysis and what that means for the unsuspecting public.

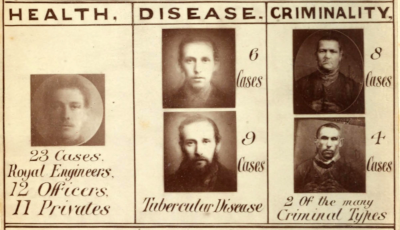

Specifically, I’d like to point to Allan Sekula’s landmark 1986 essay, “The Body and the Archive”, as a way to understand the socio-political history of a data-based understanding of photography. In it, Sekula argues that photographic archives are important centers of power. He uses Alphonse Bertillon and Francis Galton as perfect examples of such: the former is considered the reason why police forces fingerprint, the latter is the father of eugenics and—most relevant to Sekula—inventor of composite portraiture.

So when Paglen notes that “all computer vision systems produce mathematical abstractions from the images they’re analyzing, and the qualities of those abstractions are guided by the kind of metadata the algorithm is trying to read,” I can’t help but think about the projects by Bertillon and Galton. These two researchers believed that mathematical abstraction would provide a truth—one from the aggregation of a mass of individual metrics, the other from a composition of the same, but in photographic form.

Certainly, Paglen has read Sekula’s piece—the New Inquiry essay often references “visual culture of the past” or “classical visual culture” and “The Body and the Archive” played a major part in the development of visual culture studies. And it’s important to note that my goal in referencing the 1986 piece is not to dismiss Paglen’s concerns as “nothing new.” Rather, I think it’s important to consider the “not-new-ness” of the socio-political implications of these image-reading systems (see: 19th century scientists trying to determine the “average criminal face”) alongside the increased speed and “accuracy” of the technology. That is, this is something humans have been trying to do for hundreds of years, but now it is more widely integrated into our day-to-day.

At the end of his essay, Paglen offers a few calls to action:

To mediate against the optimizations and predations of a machinic landscape, one must create deliberate inefficiencies and spheres of life removed from market and political predations–“safe houses” in the invisible digital sphere. It is in inefficiency, experimentation, self-expression, and often law-breaking that freedom and political self-representation can be found.

I really like these suggestions, though I’d offer one more: re-creation. That is, what if we asked our students to recreate the type of abstracting experiments performed by the likes of Galton and Bertillon, but to use today’s technology? Better yet, what if we asked them to recreate today’s machine-reading systems using 19th century tools? This sort of historical-fictive practice doesn’t require students’ experiments to “work”, per se. Rather, it asks them to consider the steps taken and decisions made along the way. The whys and hows and wheres. In taking on this task, students might be able to more concretely connect the subjectivity inherent in our present-day systems by calling out the individual decisions that need to be made during their development. We might illustrate possible motives behind projects like Google DeepDream or Facebook’s DeepFace.

Within our new algorithmic watchmen are embedded a plethora of stakeholders and the things they want or need. Paglen, unfortunately, doesn’t do a very good job reminding us of this (he paints a picture, so to speak, of machines reading machines, but forgets that said machines must be programmed by humans at some point). And I’d be curious to know what he had mind when he refers to “safe houses” without “market or political predations” (as a colleague recently reminded me, even the Tor project can thank the US government for its existence).

To conclude, I’d like to highlight an important project by an artist named Zach Blas, Facial Wesponization Suite (2011-2014). The piece is meant as a protest against facial recognition software in both consumer-level devices, corporate and governmental security systems, and research efforts. “One mask,” writes Blas, “the Fag Face Mask, generated from the biometric facial data of many queer men’s faces, is a response to scientific studies that link determining sexual orientation through rapid facial recognition techniques.” Blas uses composite 3D scans of faces to build masks that confuse facial recognition systems.

Facial Weaponization Suite by Zach Blas

Facial Weaponization Suite by Zach Blas

This project is important here for two reasons: firstly, it’s an example of exactly the kind of thing Paglen says won’t work (“In the long run, developing visual strategies to defeat machine vision algorithms is a losing strategy,” he writes). But that’s only true if you see Facial Weaponization Suite as simply a means to confuse the software. On the other hand, if you recognize the performative nature of the work—individuals walking around in public wearing bright pink masks of amorphous blobs—you quickly understand that the piece can also confuse humans, i.e., bystanders, hopefully bringing an awareness of these machinic systems to the fore.

Wearing the masks in public, however, can be a violation of some state penal codes, which brings me to my second point. Understanding the technology here is not enough. Rather, the technology must be studied in a way that incorporates multiple disciplines: history, of course, but also law, biomedicine, communication hierarchies and infrastructure, and so on.

To be clear, I see Paglen’s essay as an excellent starting point. It begins to bring to our attention what makes our machine-readable world particularly dangerous without tripping any apocalyptic warning sirens. Let’s see if we can’t take it a step further, however, by taking a few steps back.

Gabi Schaffzin is a PhD student in Visual Arts, Art Practice at UC San Diego. He wears his sunglasses at night.