Almost two years ago, Facebook waved the rainbow flag and metaphorically opened its doors to all of the folks who identify outside of the gender binary. Before Facebook announced this change in February of 2014, users were only able to select ‘male’ or ‘female.’ Suddenly, with this software modification, users could choose a ‘custom’ gender that offered 56 new options (including agender, gender non-conforming, genderqueer, non-binary, and transgender). Leaving aside the troubling, but predictable, transphobic reactions, many were quick to praise the company. These reactions could be summarized as: ‘Wow, Facebook, you are really in tune with the LGBTQ community and on the cutting edge of the trans rights movement. Bravo!’ Indeed, it is easy to acknowledge the progressive trajectory that this shift signifies, but we must also look beyond the optics to assess the specific programming decisions that led to this moment.

To be fair, many were also quick to point to the limitations of the custom gender solution. For example, why wasn’t a freeform text field used? Google+ also shifted to a custom solution 10 months after Facebook, but they did make use of a freeform text field, allowing users to enter any label they prefer. By February of 2015, Facebook followed suit (at least for those who select US-English).

There was also another set of responses with further critiques: more granular options for gender identification could entail increased vulnerability for groups who are already marginalized. Perfecting your company’s capacity to turn gender into data equates to a higher capacity for documentation and surveillance for your users. Yet the beneficiaries of this data are not always visible. This is concerning, particularly when we recall that marginalization is closely associated with discriminatory treatment. Transgender women suffer from disproportionate levels of hate violence from police, service providers, and members of the public, but it is murder that is increasingly the fate of people who happen to be both trans and women of color.

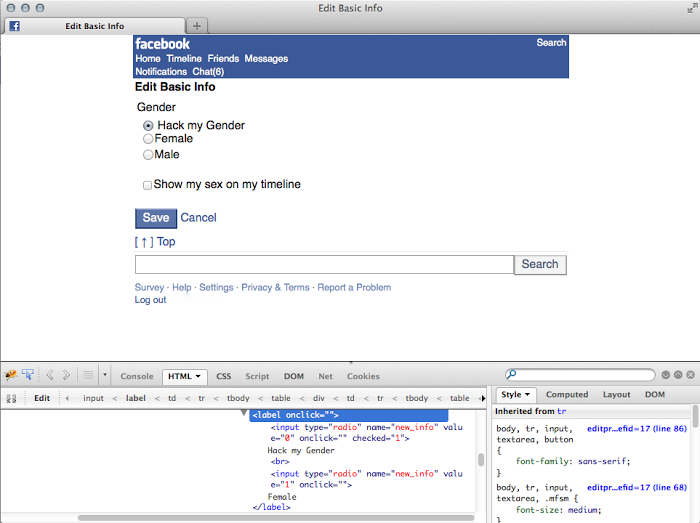

Alongside these horrific realities, there is more to the story – hidden in a deeper layer of Facebook’s software. When Facebook’s software was programmed to accept 56 gender identities beyond the binary, it was also programmed to misgender users when it translated those identities into data to be stored in the database. In my recent article in New Media & Society, ‘The gender binary will not be deprogrammed: Ten years of coding gender on Facebook,’ I expose this finding in the midst of a much broader examination of a decade’s worth of programming decisions that have been geared towards creating a binary set of users.

To make sure we are all on the same page, perhaps the first issue to clarify is that Facebook is not just the blue and white screen filled with pictures of your friends, frenemies, and their children. That blue and white screen is the graphic user interface – it is made for you to see and use. Other layers are hidden and largely inaccessible to the average user. Those proprietary algorithms that filter what is populated in your news feed that you keep hearing about? As a user, you can see traces of algorithms on the user interface (the outcome of decisions about what post may interest you most) but you don’t see the code that they depend on to function. The same is true of the database – the central component of any social media software. The database stores and maintains information about every user and a host of software processes are constantly accessing the database in order to, for example, populate information on the user interface. This work goes on behind the scenes.

When Facebook was first launched back in 2004, gender was not a field that appeared on the sign-up page but it did find a home on profile pages. While it is possible that, back in 2004, Mark Zuckerberg had already dreamed that Facebook would become the financial success that it has today, what is more certain is that he did not consider gender to be a vital piece of data. This is because gender was programmed as a non-mandatory, binary field on profile pages in 2004, which meant it was possible for users to avoid selecting ‘male’ or ‘female’ by leaving the field blank, regardless of their reason for doing so. As I explain in detail in my article, this early design decision became a thorny issue for Facebook, leading to multiple attempts to remove uses who had not provided a binary ID from the platform.

Yet there was always a placeholder for users who chose to exist outside of the binary deep in the software’s database. Since gender was programmed as non-mandatory, the database had to permit three values: 1 = female, 2 = male, and 0 = undefined. Over time, gender was granted space on the sign-up page as well – this time as a mandatory, binary field. In fact, despite the release of the custom gender project (the same one that offered 56 additional gender options), the sign-up page continues to be limited to a mandatory, binary field. As a result, anyone who joins Facebook as a new user must identify their gender as a binary before they can access the non-binary options on the profile page. According to Facebook’s Terms of Service, anyone who identifies outside of the binary ends up violating the terms – “You will not provide any false personal information on Facebook” – since the programmed field leaves them with no alternative if they wish to join the platform.

Over time Facebook also began to define what makes a user ‘authentic’ and ‘real.’ In reaction to a recent open letter demanding an end to ‘culturally biased and technically flawed’ ‘authentic identity’ policies that endanger and disrespect users, the company publically defended their ‘authentic’ strategy as the best way to make Facebook ‘safer.’ This defense conceals another motivation for embracing ‘authentic’ identities: Facebook’s lucrative advertising and marketing clients seek a data set made up of ‘real’ people and Facebook’s prospectus (released as part of their 2012 IPO) caters to this desire by highlighting ‘authentic identity’ as central to both ‘the Facebook experience’ and ‘the future of the web.’

In my article, I argue that this corporate logic was also an important motivator for Facebook to design their software in a way that misgenders users. Marketable and profitable data about gender comes in one format: binary. When I explored the implications of the February 2014 custom gender project for Facebook’s database – which involved using the Graph API Explorer tool to query the database – I discovered that the gender stored for each user is not based on the gender they selected, it is based on the pronoun they selected. To complete the selection of a ‘custom’ gender on Facebook, users are required to select a preferred pronoun (he, she, or them). Through my database queries, however, a user’s gender only registered as ‘male’ or ‘female.’ If a user selected ‘gender questioning’ and the pronoun ‘she,’ for instance, the database would store ‘female’ as that user’s gender despite their identification as ‘gender questioning.’ In the situation where the pronoun ‘they’ was selected, no information about gender appeared, as though these users have no gender at all. As a result, Facebook is able to offer advertising, marketing, and any other third party clients a data set that is regulated by a binary logic. The data set appears to be authentic, proves to be highly marketable, and yet contains inauthentic, misgendered users. This re-classification system is invisible to the trans and gender non-conforming users who now identify as ‘custom.’

When Facebook waved the rainbow flag, there was no indication that ad targeting capabilities would include non-binary genders. And, to be clear, my analysis is not geared towards improving database programming practices in order to remedy the fraught targeting capabilities on offer to advertisers and marketers. Instead, I seek to connect the practice of actively misgendering trans and gender non-conforming users to the hate crimes I mentioned earlier. The same hegemonic regimes of gender control that perpetuate the violence and discrimination disproportionately affecting this community are reinforced by Facebook’s programming practices. In the end, my principal concern here is that software has the capacity to enact this symbolic violence invisibly by burying it deep in the software’s core.

Rena Bivens (@renabivens) is an Assistant Professor in the School of Journalism and Communication at Carleton University in Ottawa, Canada. Her research interrogates how normative design practices become embedded in media technologies, including social media software, mobile phone apps, and technologies associated with television news production. Rena is the author of Digital Currents: How Technology and the Public are Shaping TV News (University of Toronto Press 2014) and her work has appeared in New Media & Society, Feminist Media Studies, the International Journal of Communication, and Journalism Practice.

This essay is cross-posted at Culture Digitally

Headline pic from Bivens’ recent article The gender binary will not be deprogrammed: Ten years of coding gender on Facebook