View post on imgur.com

While putting together the most recent project for External Pages, I have had the pleasure to work with artist and designer Anna Tokareva in developing Baba Yaga Myco Glitch™, an online exhibition about corporate mystification techniques that boost the digital presence of biotech companies. Working on BYMG™ catalysed the exploration of the shifting critiques of interface design in the User Experience community. These discourses shape powerful standards on not just illusions of consumer choice, but corporate identity itself. However, I propose that as designers, artists and users, we are able to recognise the importance of visually identifying such deceptive websites in order to interfere with corporate control over online content circulation. Scrutinising multiple website examples to inform the aesthetic themes and initial conceptual stages of the exhibition, we specifically focused on finding common user interfaces and content language that result in enhancing internet marketing.

Anna’s research on political fictions that direct the necessity for a global mobilisation of big data in Нооскоп: The Nooscope as Geopolitical Myth of Planetary Scale Computation lead to a detailed study of current biotech incentives as motivating forces of technological singularity. She argues that in order to achieve “planetary computation”, political myth-building and semantics are used for scientific thought to centre itself on the merging of humans and technology. Exploring Russian legends in fairytales and folklore that traverse seemingly binary oppositions of the human and non-human, Anna interprets the Baba Yaga (a Slavic fictitious female shapeshifter, villain or witch) as a representation of the ambitious motivations of biotech’s endeavour to achieve superhumanity. We used Baba Yaga as a main character to further investigate such cultural construction by experimenting with storytelling through website production.

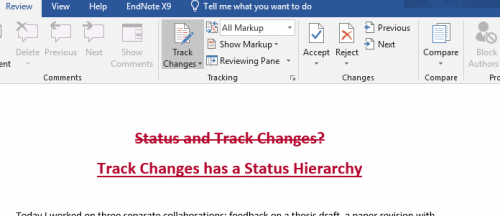

The commercial biotech websites that we looked at for inspiration were either incredibly blasé, where descriptions of the company’s purpose would be extremely vague and unoriginal (e.g., GENEWIZ), or unnervingly overwhelming with dense articles, research and testimonials (e.g., Synbio Technologies). Struck by the aesthetic and experiential banality of these websites, we wondered why they all seemed to mimic each other. Generic corporate interface features such as full-width nav bars, header slideshows, fade animations, and contact information were distributed in a determined chronology of vertically-partitioned main sections. Starting from the top and moving down, we were presented with a navigation menu, slideshow, company services, awards and partners, “learn more” or “order now” button, and eventually land on an extensive footer.

This UI conformity easily permits a visual establishment of professionalism and validity; a quick seal of approval for legitimacy. It is customary throughout the UX and HCI paradigm, a phenomenon that Olia Lialina describes as “mainstream practices based on the postulate that the best interface is intuitive, transparent, or actually no interface” in Once Again, The Doorknob. Referring back to Don Norman’s Why Interfaces Don’t Work, which champions computers to only serve as devices of simplifying human lives, Lialina explains why this ethos contributes to mitigating user control, a sense of individualism and society-centred computing in general. She applies GeoCities as a counterpoint to Norman’s design attitude and an example of sites where users are expected to create their own interface. Defining the problematic nature in designing computers to be machines that only make life easier via such “transparent” interfaces, she argues:

“’The question is not, “What is the answer?” The question is, “What is the question?”’” Licklider (2003) quoted french philosopher Anry Puancare when he wrote his programmatic Man Computer Symbiosis, meaning that computers as colleagues should be a part of formulating questions.”

Coining the term “User Centred Design” and scheming the foundations of User Experience during his position as the first User Experience Architect at Apple in 1993, Norman’s advocacy of transparent design has unfortunately manifested into a universal reality. It has advanced into a standard so impenetrable that a business’s legitimacy and success is probably at stake if they do not follow these rules. The idea that we’ve become dependent on reviewing the website rather than the company themselves – leading to user choices being heavily navigated by websites rather than company ethos – is nothing new. And additionally, the invisibility of transparent interface design has proceeded to fooling users into algorithmic “free” will. Jenny Davis’s work on affordances highlights that just because functions or information may be technically accessible, they are not necessarily “socially available”, and the critique of affordance extends to the critique of society. In Beyond the Self, Jack Self illustrates website loading animations or throbbers (moving graphics that illustrate the site’s current background actions) as synchronised “illusions of smoothness” that support neoliberal incentives of real-time efficiency.

“The throbber is thus integral to maintaining the illusion of inescapability, dissimulating the possibility of exiting the network—one that has become both spatially and temporally coextensive with the world. This is the truth of the real-time we now inhabit: a surreal simulation so perfectly smooth it is indistinguishable from, and indeed preferable to, reality.”

These homogeneous plain sailing interfaces reinforce a mindset of inevitability, and at the same time, can create slick operations that cheat the user. Actions like “dark patterns” are implemented requests that trick users into completing tasks such as enlisting or purchasing which may be unconsented. My lengthy experience with recruitment websites could represent the type of impact that sites have on the portrayal of true company intentions. Constantly reading about the struggles of obtaining a position in the tech industry, I wondered how these agencies make commission when finding employment seems so rare. I persisted and filled out countless job applications and forms, received nagging emails and calls from recruiters for a profile update or elaboration, until I finally realised that I have been swindled by the consultancies for my monetised data (which I handed off via applications). Having found out that these companies profit on applicant data and not job offer commissions, I slowly withdrew from any further communication as I knew this would only lead to another dead end. As Anna and I roamed through examples of biotech companies online, it was easy to spot familiar UI between recruitment and lab websites; welcoming slideshows and all the obvious keywords like “future” and “innovation” stamped across images of professionals doing their work. It was impossible not to question the sincerity of what the websites displayed.

Along with the financial motives behind tech businesses, there are also fundamental internal and external design factors that diminish the trustworthiness of websites. Search engine optimisation is vital in controlling how websites are marketed and ranked. In order to fit into the confines of web indexing, site traffic now depends on not just handling Google Analytics but creating keywords that are either exposed in the page’s content or mostly hidden within metadata and backlinks. As the increase in backlinks correlates with the growth of SEO, corporate websites implement dense footers with links to all their pages, web directories, social media, newsletters and contact information. The more noise a website makes via its calls to external platforms, the more noise it makes on the internet in general.

The online consumer’s behavior is another factor in manipulating marketing strategies. Besides brainstorming what users might search, SEO managers are inclined to find related terms by scrolling through Google’s results page and seeing what else their users already searched for. Here, we can see how Google’s algorithms produce a tailored feedback loop of strategic content distribution that simultaneously feeds an uninterrupted rotating dependency on their search engine.

It is clear that keyword research helps companies come up with their content delivery and governance, and I worry about the line blurring between the information’s delivery strategy and its actual meaning. Alex Rosenblat observes how Uber uses multiple definitions for their in court hearings in order to shift blame onto their drivers as they are “consumers of its software”, subsequently enabling tech companies to switch so often between the words “users” and “workers” until they become fully entangled. In the SEO world, avoiding keyword repetition additionally helps to stay away from competing with their own content, and companies like Uber easily benefit from this specific game plan as they can freely work with interchanging their wording when necessary. With the increase in applying a varied range of buzzwords, encouraged by using multiple words to portray one thing, it’s evident that Google’s SEO system plays a role in stimulating corporations to implement ambiguous language on their sites.

However, search engine restrictions also further the SEO manipulation of content. There have been a multitude of studies (such as Enquiro, EyeTools and Did-It or Google’s Search Quality blog and User Experience findings) that look at our eye-tracking patterns when searching for information, many of which back up the rules of the “Golden Triangle” – a triangular space in which the highest density of attention remains on the top and trickles down on the left of the search engine results page (SERP). While the shape changes in relation to SERP’s interface evolution (as explained in a Moz Blog by Rebecca Maynes), the studies reveal how Google’s search engine interface offers the illusion of choice, while subsequently exploiting the fact that users will pick the first three results.

In a Digital Visual Cultural podcast, Padmini Ray Murray describes Mitchell Whitelaw’s project, The Generous Interface, where new forms of searching are reviewed through interface design to show the actual scope and intricacy of digital heritage collections. In order to realise generous interfaces, Whitelaw considers functions like changing results every time the page is loaded or randomly juxtaposing content. Murray underpins the importance of Whitelaw’s suggestions to completely rethink how we display collections as a way to untie us from the Golden Triangle’s logic. She claims that our reliance on such online infrastructures is a design flaw.

“The state of the web today – the corporate web – the fact that it’s completely taken over by four major players, is a design problem. We are working in a culture where everything we understand as a way into information is hierarchical. And that hierarchy is being decided by capital.”

Interfaces of choice are contested and monopolised, guiding and informing user experience. After we have clicked on our illusion of choice, we are given yet another illusion – through the mirage of soft and polished animations, friendly welcome page slideshows and statements of social motivation – we read about company ethos (perhaps we’re given the generic slideshow and fade animation to distract us if the information is misleading).

View post on imgur.com

Murray goes on to describe a project developed by Google’s Cultural Institute called Womenwill India, which approaches institutions to digitise cultural artefacts that speak to women in India. This paved the way for scandals where institutions that could not afford or have the expertise to digitalise their own collections ended up simply administering them to Google. She goes on to study the suspiciousness of the program through the motivations that lie beneath the concept of digitising collections and the institute’s loaded power: “it’s used for machines to get smarter, not altruism […] there is no interest in curating with any sense of sophistication, nuance or empathy”. Demonstrating the program’s dubious incentives, she points to the website’s cultivation of exoticism with the use of “– India” affixed to the product’s title. She continues to describe the website to be “absolutely inexplicable” as it flippantly throws together unrelated images of labeled ‘Intellectuals’, ‘Gods and Goddesses’ and ‘Artworks’ with ‘Women Who Have Encountered Sexual Violence During The Partition’.

When capital has power over the online circulation of public relations, the distinction between website design and content begins to fade, which leads design to take on multiple roles. Since design acts as a way of presenting information, Murray believes it therefore has the potential to correct it.

“This is a metadata problem as well. Who is creating this? Who is telling us that this is what things are? The only way that we can push back against the Google machine is to start thinking about interventions in terms of metadata.”

The bottom-up approach to consider interventions as metadata could also then be applied to the algorithmic activities of web crawlers. The metadata (a word I believe Murray also uses to express the act of naming and describing information) of a website specifies “what things are”. While the algorithmic activity of web crawlers further enhance content delivery, search engine infrastructure is ruled by the unification of two very specific forces – of crawler and website. As algorithms remain to be inherently non-neutral, developed by agents with very specific motives, the suggestion to use metadata as a vehicle for intervention (within both crawlers and websites) can employ bottom-up processing to be a strong political tactic.

Web crawlers’ functions are unintelligible and concealed to the user’s eye. Yet they’re connected to metadata, whose information seeps through to reach public visibility via either content descriptions on the results page, drawn-out footers containing extensive amounts of links, ambiguous buzzword language or any of the conforming UI features mentioned above. This allows for users (as visual perceivers) to begin to identify suspicious motives of websites through their interfaces. These aesthetic cues give us little snippets of what the “Google machine” actually wants from us. And, while it may just present the tip of the iceberg, it is a prompt to not underestimate, ignore or become numb to the general corporate visual language of dullness and disguise. The idea of making interfaces invisible has formed into an aesthetic of deception, and Norman’s transparent design manifesto has collapsed onto itself. When metadata and user interfaces work as ways of publicising the commercial logic of computation by exposing hidden algorithms, we can start to collectively see, understand and hopefully rethink these digital forms of (what used to be invisible) labour.

Ana Meisel is a web developer and curator of External Pages, starting her MSc in Human Computer Interaction and Design later this year. anameisel.com, @ananamei

Headline Photo: Source

Photo 2: Source